We learn not to worry about purpose, because such worries never lead to the sort of delight we seek.

Steven Weinberg, To Explain the World

1 Introduction

(Ontological) proliferation is the enemy of (theoretical) elegance. In contrast, combinatorics is our friend: when we try to model some aspect of the world, we want to account for lots of phenomena with just a few building blocks that can be put together to allow for superficial variety and complexity. One reason for this perspective is that it conforms to the intuition that at some level a theory is a form of “data compression” (Chaitin 2006). Another reason, for linguistics—and equally applicable to other cognitive sciences—is that the “less attributed to genetic information (in our case, the topic of UG) for determining the development of an organism, the more feasible the study of its evolution” (Chomsky 2007: 4) and the more plausible its neural implementation. One strategy for achieving such elegance in linguistics is to carve up a complex domain into isolable pieces, so that from the “welter of descriptive complexity” we can abstract “certain general principles governing computation that would allow the rules of a particular language to be given in very simple forms” (Chomsky 2000: 122).

But what if our approach is so narrow and reductive as to propose almost nothing about almost nothing? It is perhaps natural that such an anxiety arises from the combination of such a program of abstraction with the substance free approach I adopt (Hale & Reiss 2000; 2008; Reiss 2017b). Substance free phonology denies a role for markedness and perceptual or articulatory considerations in phonological explanation, and initially does not make for a very promising vista of phonology as a domain for seeking intellectual delight: if we get rid of all the phonetics and minimize the computational system, what’s left to keep us occupied? I address this anxiety by showing in schematic but explicit fashion how to model a wide variety of superficially diverse phenomena with just two set-theoretic mechanisms. This pared-down inventory does not entail that our analyses will be uninteresting since “[a]s concepts and principles become simpler, argument and inference tend to become more complex—a consequence that is naturally very much to be welcomed” (Chomsky 1982: 3). Free of any dependence on phonetic substance or teleological notions of purpose, my approach seeks delight in the pure logic of phonological computation.

I propose that the complex problem of modeling phonological phenomena can be fruitfully decomposed, and in this paper I examine only the segment-internal changes effected by the most basic mappings (‘rules’). I argue that all changes to the internal structure of segments can be reduced to combinations of feature deletion by set subtraction and feature insertion by unification. Once stated, the idea seems trivial—after all, it’s obvious that you can make a d into a t by removing +VOICED and adding –VOICED. However, we’ll see that some subtleties are required in the chain of reasoning that will lead us to a full treatment of the complex phenomenon of Hungarian voicing assimilation at the end of the paper.

In order to appreciate our narrow focus, let’s consider various aspects of a simple alternation, and see how they can be distinguished. The process in Lamba (Doke 1938) that turns an onset /l/ to [n] when the preceding onset contains a +NASAL segment raises issues of what can be a possible environment in a rule and how locality can be defined in terms of adjacency, linear order, syllable structure, and so on. The process also demands that we consider whether the change in the features LATERAL and NASAL happen in parallel as a single rule, or as different steps in the derivation. Even the change in a single feature, say from –NASAL to +NASAL can be analyzed either as a single rule, or as a two-step transformation: deletion of the valued feature –NASAL then insertion of its opposite +NASAL via separate rules. Other processes raise further issues about structural changes in the mappings from Underlying Representations to Surface Representations—for example, metathesis, insertion and deletion of segments (as opposed to features) involve manipulation of sequences of segments and their precedence relations (Raimy 2000; Papillon 2020).

In light of all these questions, I focus here on just the narrow topic of feature changes within segments, like /l/ surfacing as [n]. Under certain simplifying assumptions, I propose that a complete account of changes within segments uses only the two primitive mechanisms: deletion of valued features like +NASAL via an operation of set subtraction, and insertion of valued features via an operation of set unification.1 Recent work (Bale et al. 2014; Bale & Reiss 2018; Bale et al. 2020) has proposed these operations as elements of a basic inventory of computations for phonology: the novelty of this paper is the stronger claim that just these two operations are necessary and sufficient to model all intrasegmental changes. This claim is embedded in the larger framework of assumptions in (1) that my co-authors and I adopt and justify (or at least acknowledge as temporary idealizations).

| (1) | Assumptions based on previous work | |

| a. | Segment symbols are abbreviations for sets of valued features. | |

| b. | Segments must be consistent (i.e. they don’t contain +F and –F for any feature). | |

| c. | Segments need not be complete—segments may lack a specification for some features. Assuming underspecification simplifies phonology in several senses that we discuss. | |

| d. | Feature insertion rules involve unification (similar to set union) with a singleton set. | |

| e. | Feature deletion involves set subtraction and can affect several features at once. | |

| f. | Greek letter variables, also known as α-notation, are part of phonology, and not just part of the metalanguage of phonologists—rules may contain variables whose domain is {+,–}. | |

| g. | Targets and environments of rules refer to natural classes, defined in a manner that slightly updates the traditional conception. | |

| h. | Rules are functions mapping between phonological representations, and the phonology of a language is a complex function composed of particular rules built from a toolkit of representational and computational primitives. For the most part, the rules developed here can be treated as functions mapping from strings of segments to strings of segments, however, where, e.g., syllable structure is referenced in a rule, mapping between more complex representations will be necessary. | |

All of these points will be illustrated and elucidated as we proceed. As mentioned above, rules deleting and inserting full segments, tonal patterns and many other phenomena are not treated in this paper. The enviroments and conditions for processes such as long-distance vowel harmony are also not treated, but the intention is that the accounts of segment internal changes developed here will ultimately be part of a full analysis of such processes.

2 Background on Logical Phonology

In this section, I provide more details concerning the list of assumptions given in the previous section. The term Logical Phonology is used here to situate the paper in a body of related work. Logical Phonology is an approach that is consistent with the notion of Substance Free phonology introduced by Hale & Reiss (2000; 2008); Reiss (2017b), although that term has come to be applied to a variety of often inconsistent perspectives. (Substance Free) Logical Phonology attempts to characterize the formal nature of phonological computation without reference to any notion of markedness or functionalist concepts like contrast, ease of articulation or the idea that phonological computation ‘repairs’ illformed structures. The relationship of Logical Phonology to an approach to phonetics called Cognitive Phonetics is presented in papers such as Volenec & Reiss (2017; 2019; 2020; 2022)

The basic toolkit of concepts used in this paper were developed in Bale et al. (2014), Bale & Reiss (2018) and Bale et al. (2020). It is useful to immediately distinguish three ‘levels’ at which the discussion in Logical Phonology takes place. The lowest level is the pure mathematical or logical level of set theory. At this level, we make reference to sets, subsets, membership, set subtraction and unification, which is related to the more familiar set union operation. Aside from some minor details about our version of unification, everything at this level is adopted wholesale from set theory.

At the next level, we propose two kinds of phonological rules, ones that incorporate set subtraction and ones that incorporate unification. These operations replace the traditional ‘→’ of generative phonology rules. In subtraction rules, strings containing target segments (which are sets) are mapped to strings containing corresponding segments that are the result of subtracting a set of features (the structural change, in traditional terms) from the targets, when the targets appear in the environment specified by the rule. Rules that are built with unification are a bit more complex, since unification, as a mathematical notion, is a ‘partial’ operation, which means that it might not yield an output. When unification, as part of a phonological rule, fails to generate an output, the rule provides a default identity mapping: the rule’s output is identical to its input. In our model every rule applies to every string at the appropriate point in the derivation, although the application may be vacuous in several senses. In contrast to the adoption of standard math at level one, our level two discussion manifests our own version of phonological computation, including, for example, what happens when unification failure occurs as part of rule application.

At the third level, we discuss ‘processes’ in particular languages, such as final devoicing of obstruents or BACK harmony among vowels. While these processes may be analyzed as the result of individual rules in another framework, here, they have no theoretical status and merely refer to combinations of one or more of the rules described at level two. The processes discussed at level three are not part of the model of Logical Phonology: they are just a way to relate our results to the kinds of data and problems that phonologists have traditionally discussed. For example, we treat feature-changing processes as the result of at least two rules.

It is not unusual to get a So what? reaction to this kind of ‘sterile’ research. What are the empirical consequences of deciding that feature changing-processes should be modeled as combinations of several basic rules, rather than as single rules? What new data patterns can we ‘get’? The late Sam Epstein, who was famous for working on abstruse topics like whether dominance or c-command should be taken as a primitive notion of syntax had a good response to such challenges. A senior scholar once told Sam that his musings might provide some entertainment and an endless source of paper topics, but that they could have no empirical consequences whatsoever. Sam pointed out that, in fact, making a choice between the two approaches to syntax has empirical implications for every single sentence ever generated by any human grammar. Fundamental questions matter if we are committed to a realist, internalist view of language. I’d like to flatter myself by claiming that Logical Phonology is Epsteinian phonology.

2.1 Segments are consistent, potentially incomplete feature sets

As a first approximation I treat segments as sets of (valued) features. For example, we can represent the labial oral stops p and b as the sets in (3a) and (3b). Among the simplifications adopted here are the following:

| (2) | Three simplifications | |

| a. | Not all features that are present are necessarily shown. This is just a matter of typographical convenience. | |

| b. | An actual feature set in a morpheme’s lexical representation may be associated with a timing slot, and the segment might be thought of as the timing slot combined with the feature set. Since our concern here is the range of segment-internal changes, we can ignore timing slots, tones, syllable structure, etc., here. | |

| c. | We abstract away from contour segments like affricates, which potentially have a more complex structure (see Shen 2016 for discussion). | |

Point (2a) is important to distinguish from our use of underspecification. The set of features in (3c) corresponds to neither /p/ nor /b/, but to a third possible segment, B, a labial stop lacking any specification for the feature VOICED.2

| (3) | Three labial oral stops | |

| a. | /p/ = {–VOICED, –SONORANT, –CORONAL, +ANTERIOR, +LABIAL, –NASAL,–CONTINUANT} | |

| b. | /b/ = {+VOICED, –SONORANT, –CORONAL, +ANTERIOR, +LABIAL, –NASAL,–CONTINUANT} | |

| c. | /B/ = {–SONORANT, –CORONAL, +ANTERIOR, +LABIAL, –NASAL, –CONTINUANT} (no value for VOICED) | |

These segments all fulfill our assumed condition that segments be consistent, that is that they not contain both +F and –F for any feature F. The possibility of a segment like B shows that we accept the existence of underspecification: segments can be incomplete, lacking any specification for one or more features. Further discussion of the empirical justification for underspecification, building on previous work (e.g. Keating 1988; Choi 1992) can be found in Bale & Reiss (2018) and related work.

2.2 Natural classes

Given our treatment of segments as sets of features, natural classes of segments, which are sets of segments, are fundamentally just sets of sets of features. For example, in a language containing the three oral labial stops, p,b,B, this natural class might be characterized as the set of segments whose members are all and only the segments in the language that are supersets of this set of features:3

| (4) | Set of features that define the natural class containing only p,b,B | |

| a. | {–SONORANT, –CORONAL, +ANTERIOR, +LABIAL, –NASAL, –CONTINUANT} | |

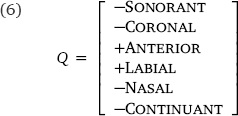

So, the set Q of labial stops in language L is the following

| (5) | Q = {q : q ⊇ {–SONORANT, –CORONAL, +ANTERIOR, +LABIAL, –NASAL, –CONTINUANT} (and q ∈ L)} |

Because this notation is unwieldy, we make use of traditional square bracket notation to denote classes of segments in the target and environment of rules. The brackets are used to show that natural classes are of a different set-theoretic type from the set of features in the structural change of a rule: the latter are sets of (valued) features, whereas the former are sets of sets of valued features, that is, sets of segments. To say it differently, (5) denotes the same natural class as (6), and both are different from the representation of the segment in (3c). They all list the same valued features, but the rest of the notation matters.

To be more precise, in (6), I’ve left implicit the second condition given in (5), the one that requires that a segment be in the inventory of the language in question—just keep in mind that the intensional natural class description in (6) may have the extension {p,b,B} in one language, but maybe {p,b} or {p} in another.

The treatment of natural classes sketched relies on the superset (or subset) relation. This fails for more complex representations, including ones that do not correspond to just single segments and those that involve α-notation (Bale & Reiss In Prep). We’ll ignore these complications in this paper.

2.3 Basic set operations

The basic set operations I attribute to phonological rules are set subtraction (also called set difference) and unification. Set subtraction here has the standard definition: If A and B are sets, then A – B = C, where C = {c : c ∈ A and c ∉ B}. That is, the members of A – B are all the elements of A except for those that are in B. Here are some examples:

| (7) | Examples of set subtraction | |

| • | {x, y} – {x, z} = {y} | |

| • | {+VOICED, –SONORANT, +CORONAL} – {+VOICED, +SONORANT} = {–SONORANT, +CORONAL} | |

In each of these examples, note that the subtrahend, the set that is being subtracted (the one on the right of the ‘–’ symbol) can contain elements that are not present in the minuend, the set on the left of the operator. Those elements are irrelevant to the outcome of the computation.

The second operation I use is unification, which is related to the familiar operation of set union.4 Set union combines the members of sets into a single set: If A and B are sets, then A ∪ B = C, where C = {c : c ∈ A or c ∈ B}. Union is called a total operation since the union of any two sets always yields a set. Instead of union, we use a version of unification, which is typically used to combine expressions that are more complex than sets. Unification is useful here because the members of the segment sets are actually ordered pairs of values (‘+’ or ‘–’) combined with features. For a feature F, we refer to the valued features +F and –F as ‘opposites’. Unification ⊔ requires the phonology to look into the valued features that are the elements of feature sets. If A and B do not contain opposites, then A ⊔ B = A ∪ B. However, if A and B do contain any conflicting values, that is if A ∪ B is not consistent, then A ⊔ B is undefined.

| (8) | Unification examples | |

| • | {+F,+G}⊔{+G,–H} = {+F,+G,–H} (Recall that the elements of a set are unique: {a, b, c} is the same set as {a, a, a, b, c, c}.) | |

| • | {+F,+G}⊔{–G,–H} is undefined, because {+F,+G}∪{–G,–H} = {+F,+G,–G,–H} which contains the opposites +G and –G. | |

Because the output of unification may be undefined, it is called a partial operation, unlike normal union which always yields an output set. When the result of unification is undefined we refer to unification failure. When unification failure occurs in the application of a phonological rule, we assume that the rule then maps the input string to an identical output.

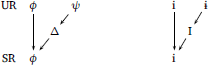

2.4 Logical relations among segments in patterns

Our discussion makes use of three abstract segment symbols ϕ ≠ ψ ≠ Δ corresponding to three different sets of valued features defined as follows. Unless otherwise noted, Δ is unspecified for some (unique) feature F, but is otherwise identical to ϕ and ψ, which are identical to each other, aside from having opposite values for F. For concreteness, an example of such a triplet of segments would be p,b,B, where, as above, B is a labial stop unspecified for voicing.

| (9) | Three abstract segments | |

| • | ∃! F s.t. ϕ and ψ differ w.r.t. F | |

| The two segments differ w.r.t. exactly one feature. | ||

| • | Δ = ϕ – {–F} and Δ = ψ – {+F} | |

| If you take –F from ϕ you get Δ, and if you take +F from ψ you get Δ (or vice versa). | ||

| • | ϕ ∩ ψ = Δ | |

| The intersection of the two fully specified segments is the underspecified one. This follows from the definition of Δ. | ||

| • | p,b,B | |

The symbols ϕ, ψ, Δ are just part of our metalanguage for discussing phonology. These three segment variables represent a minimal case of representational distinctiveness, with opposite values and presence vs. absence restricted to a single feature. The logic presented here trivially extends to richer representational contrasts involving more than one feature. It is not only methodologically useful to start out with the simplest cases, but it is also impressive to see how much empirical coverage such cases afford—we get a lot of empirical coverage without a lot of machinery.

2.5 Greek letter variables—α-notation

The use of symbols like ϕ, ψ, Δ is distinct from the use of Greek letters as the familiar α-notation variables with the domain {+,–}, to which I now turn. Most importantly, whereas the previous discussion involved variables of our metalanguage, I assume that Greek letter variables do occur in phonological expressions (pace McCawley 1971), and they express dependencies among parts of a phonological representation. However, such variables cannot occur alone, unmatched in a linguistic expression to denote existence of a feature value. In other words, I reject the use of, say, αNASAL to mean “some specified value of nasal” (see Bale & Reiss 2018: 454). I only use α-notation to express agreement or mutual dependency, the identity of values on tokens of a given feature. A valid use of α is, for example, to say that all obstruents in a cluster must be αVOICED, meaning that they must all be +VOICED or all be –VOICED. It is formally quite different to use variables to denote the mere existence of a valued feature in a segment set, and I posit that this mechanism does not exist in the phonological faculty, and I similarly reject symbols like ±VOICED. Relatedly, there are no lexical items that contain Greek letter variables, contra, for example, Kiparsky (1985: 92). The “existential” use of variables appears to be unnecessary, and it also would wreak havoc with natural class logic in phonology. Using our example of p,b,B, without existential variables we cannot refer to the fully specified stops as those containing αVOICED and thereby make a natural class of p and b to the exclusion of B; and there is also no way to group B into a class with either one of the other two to the exclusion of the remaining one (Bale et al. 2014), since we do not allow the use of negation, as in “Not +VOICED”. This appears to be a desirable outcome.

2.6 Segment mapping diagrams

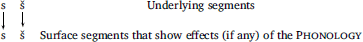

I treat phonological rules as functions mapping (in the simple cases considered here) strings of segments to strings of segments. As explained in Bale & Reiss (2018), we need to reject the informal traditional notion that rules apply to individual segments (or classes of segments) and map them to other segments (in a context), because this approach does not allow the rules of a language to compose into a single function that computes a derivation for every input representation.5 Despite this view of rules, the following discussion makes use of segment mapping diagrams (SMDs) to illustrate relations among segments that appear in URs and SRs. These diagrams are just aids to exposition. In the simplest case, where there are no relevant phonological rules, the SMD showing the mapping between segments in the input and segments in the output is straighforward, as in this case of a language with segments s and š which are not involved in any phonological interaction. Again, the SMDs are not part of human phonological grammar, and they are not part of our theory: they are part of the epistemic structure of phonology-the-discipline, merely visual aids to help phonologists recognize and discuss logical patterns that arise from different combinations of basic rules.

| (10) | Simple SMD |

|

The top row of the diagram shows segments that appear in URs, and the bottom row shows segments that occur in SRs. The arrows show the relations between the segments, with vertical arrows (most typically) showing identity mappings (or else the ‘evolution’ of a segment that doesn’t undergo split or merger with another segment). The diagram for (10) represents the very boring fact that underlying /s/ maps to surface [s] and underlying /š/ maps to surface [š].

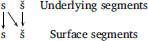

An SMD for a language with a neutralization rule that merges s and š in some context looks like this, with diagonal arrows showing non-identity mappings due to the effects of rules.

| (11) | Segment mapping diagram (SMD) for neutralization: |

|

The diagonal arrow shows, on the one hand, the split of underlying /s/ into [s] and [š], and on the other hand, the partial merger of underlying /s/ and /š/ into [š]. As we will see, more elaborate SMDs can be used to track the derivational history of segments through multiple rules. In such diagrams, there will be intermediate levels between URs and SRs. Section 4 will show how a wide range of attested processes can be reduced to combinations of rules based on set subtraction and unification and described with such SMDs.

3 The basic operations in rules

Since the focus in this paper is on segment internal changes, I adopt a simplified picture of rule environments. All the rules identify a target segment in input sequences that are defined by reference to at most one preceding and one following segment, or else by such a sequence enhanced by reference to syllable structure position such as ONSET or CODA. My rule syntax is close to a simple version of SPE rule syntax, as developed in Bale & Reiss (2018).

Phonological processes are sometimes categorized as feature-filling vs. feature-changing. As the names suggest, a feature-filling process might replace sequences containing B by sequences containing b in the corresponding position, whereas a feature-changing process might replace sequences containing p by sequences containing b in the corresponding position. I assume that ‘processes’ like this have no theoretical status, despite the fact that they are sometimes referred to as feature-changing vs. feature-filling rules. Instead, feature-filling processes are analyzed here as rules built from the unification operator, and feature-changing processes are analyzed as sequences of two or more rules—first a deletion rule built from the subtraction operator, followed by at least one feature-filling rule built from the unification operator. In brief, feature changing involves deletion of a valued feature and insertion of its opposite, in separate rules.

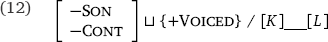

3.1 Unification rules

Feature-filling rules consist of a natural class description of a target set of segments followed by the unification symbol (in place of the normal arrow symbol ‘→’), followed by the set which is the second argument of the unification operator (the set of valued features with which each member of the target set potentially unifies). For the environment segments, to the right of the slash ‘/’ I use K, L, to denote a list of features, and they are enclosed in square brackets in (5) to denote natural classes of segments. Using these symbols we can express a rule based on unification as in (12).

Developing the model sketched by Bale & Reiss (2018) the interpretation of this rule is the following:

| (13) | Interpretation of rule (12) | |

| Rule (12) is the function that maps any finite string of segments x1x2 ⋯ xn to the string of segments y1y2 ⋯ yn such that for each index i that is greater than or equal to 1 and less than or equal to n (1 ≤ i ≤ n)⋯ | ||

| • | IF xi ⊇ {–SON, –CONT} and xi-1 ⊇ {K} and xi+1 ⊇ {L} THEN | |

| IF xi ∪ {+VOICED} is consistent, yi = xi ∪ {+VOICED}. | ||

| • | OTHERWISE yi = xi | |

Note that the rule syntax makes use of unification, but the interpretation of the rule breaks unification down into normal set union along with the notion of consistency, as discussed above.

Let’s illustrate the application of such a rule by considering how it will affect input sequences containing p,b and B in the relevant environment. Let k, l each be a segment belonging to the class denoted by [K], [L], respectively.

| (14) | Applying rule (12) | |

| a. | If the input string is kBl then, since B ⊔ {+VOICED} = b, the output of the rule is kbl. | |

| b. | If the input string is kbl then, since b ⊔ {+VOICED} = b, the output of the rule is kbl. The rule applies vacuously since unification applies vacuously here, and the rule maps kbl to kbl. | |

| c. | If the input string is kpl then we need to consider the result of p⊔{+VOICED}. Here unification is undefined, because p ∪ {+VOICED} is not consistent—the output of set union contains both +VOICED and –VOICED as members. This is a case of unification failure, and the rule semantics given in (13) yields an identity mapping in such cases. The output of the rule is kpl. This is another case of vacuous application since the rule maps kpl to kpl. | |

| d. | The effect of the rule on any other sequence, say, banana, is null—the output string will be identical to the input. There is no case of an oral stop preceded by a member of class [K] and followed by a member of class [L]. This is also a kind of vacuous application. | |

To reiterate, the application of a phonological rule built from unification can be vacuous for three reasons: either the unification itself is vacuous, as in input kbl; or unification fails, as in input kpl; or rule conditions aren’t met, as in input banana. Contrary to most of the literature, we do not say, in the last case, “the rule did not apply”. In order to compose the rules into a single function, we need each rule to map every input to an output. In all three cases the rule application leads to an identity of input and output. That is all we need here to understand rules built with the unification operator.6

3.2 Set subtraction rules

Let’s now look at a rule built with the set subtraction operator. Instead of using the unification symbol in place of the traditional arrow ‘→’, as we did above, this rule uses the set subtraction symbol ‘–’. Part of the motivation for the Logical Phonology approach I adopt is the recognition that phonological rules using ‘→’ actually encode very different basic operations. Our approach does away with such ambiguity and obviates the need for rule diacritics such as ‘feature-filling’ vs. ‘feature-changing’ labels invoked by authors such as McCarthy (1994).

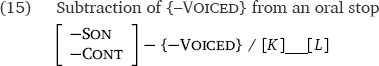

The other aspects of the rule match the example of the unification-based rule above.

We can now consider the interpretation of this rule built with the subtraction operator.

| (16) | Interpretation of rule (15) | |

| Rule (15) is the function that maps any finite string of segments x1x2 ⋯ xn to the string of segments y1y2 ⋯ yn such that for each index i that is greater than or equal to 1 and less than or equal to n (1 ≤ i ≤ n)⋯ | ||

| • | IF xi ⊇ {–SON, –CONT} and xi-1 ⊇ {K} and xi+1 ⊇ {L} THEN yi = xi – {–VOICED} | |

| • | OTHERWISE yi = xi | |

Let’s illustrate the application of this rule using the same sequences we used for the unification rule above.

| (17) | Applying rule (15) | |

| a. | If the input string is kBl then, since B –{–VOICED} = B, the output of the rule is kBl, so the rule applies vacuously. | |

| b. | If the input string is kbl then, since b – {–VOICED} = b, the output of the rule is kbl. The rule applies vacuously here, as well, since subtraction is once again vacuous. | |

| c. | If the input string is kpl then, we need to consider the result of p – {–VOICED}. The result is B so the output is kBl. | |

| d. | The effect of the rule on any other sequence, say, banana, is null—the output string will be identical to the input. | |

The rule applies non-vacuously only in case (17c).7 Following Keating (1988) and related work, I accept the evidence that underspecification can persist in the output of the grammar. Once the existence of surface underspecification is accepted, it is clear that a model with subtraction rules raises the possibility of derived surface underspecification (Bale et al. 2014: 247–8). In fact, some cases of “final devoicing” have been analyzed as ‘delinking’ of voicing without replacement by voicelessness (Wiese 2000). In other words, a derived B, as in (17c) could potentially be a surface form.

3.3 Illustration of feature-filling and feature-changing

We can now see that a unification rule like (12) is sufficient to model a feature-filling process, for example by filling in +VOICED on a /B/ and leaving underlying /p/ and /b/ unchanged.

- (18)

- A feature-filling process

-

UR kBl kbl kpl Unification Rule (12) kbl — — SR kbl kbl kpl

The dashes ‘—’ in this table reflect vacuous rule application. For input kbl the mapping is vacuous, because unification is vacuous, since b is already specified +VOICED. For input kpl the mapping is vacuous because unification fails, because p is specified –VOICED, and so unification with {+VOICED} by rule (12) is undefined. I discuss this further below.

To get a feature-changing process, we need to first apply a subtraction rule like (15) followed by a unification rule. Such a sequence of rules, (15) followed by (12), applied to kBl, kbl and kpl will yield the following derivations:

- (19)

- A feature-changing process

-

UR kBl kbl kpl Subtraction Rule (15) — — kBl Unification Rule (12) kbl — kbl SR kbl kbl kbl

We will see that combining these simple mechanism with judiciously chosen underlying forms and the use of α-notation will allow us to model a wide range of phenomena.

3.4 Exploiting unification failure to streamline grammar

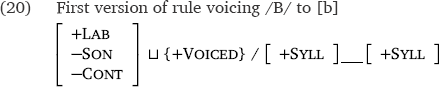

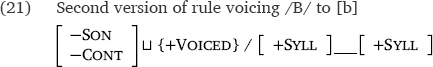

Unification failure often turns out to allow quite streamlined accounts of phonological processes. We’ll start with a toy example and see more realistic cases later. Suppose a language has a three-way contrast of /p,b,B/, and that /B/ is the only segment underspecified for the feature VOICED. Suppose further that there is a process whose effect is that /B/ surfaces as [b] between vowels, but that /p/ and /b/ surface unchanged. We need a feature filling rule to insert +VOICED into /B/ and yield [b]. Here’s a first version of such a rule:

This works, but note that unification of every other consonant in the language aside from /B/ with the set {+VOICED} will be vacuous, either because that consonant is already specified +VOICED or because it is specified –VOICED and unification with {+VOICED} will fail. So there is no need to specify the place of articulation of the target class as +LABIAL. We can streamline the rule to this second version:

This also works, but since, by assumption all segments other than /B/, vowels and consonants, are specified for either +VOICED or –VOICED, it is possible to make the rule even more general:

| (22) | Third version of rule voicing /B/ to [b] |

| [ ] ⊔ {+VOICED} / [ +SYLL ]___[ +SYLL] |

As pointed out in Bale et al. (2020), this means “unify every segment that is a superset of the empty set with the set {+VOICED} if the segment occurs between vowels.” Since basic set theory tells us that the empty set is a subset of every set, the rule unifies every segment occurring between vowels with {+VOICED}. Under our assumption that all segments but /B/ are specified for voicing, the rule will have no effect on other segments.

We may be able to take this a step further. If it is truly the case that only /B/ is underspecified for voicing at this point in the derivation, then there may be no need for a context for the rule (but there may be a need—see below):

| (23) | Fourth version of rule voicing /B/ to [b] |

| [ ] ⊔ {+VOICED} |

This means “unify every segment that is a superset of the empty set with the set {+VOICED}.” Under our assumption that all segments but /B/ are specified for voicing, the rule will apply vacuously to every other segment, in all contexts.

The interpretation of this rule is the following:

| (24) | Interpretation of rule (23) | |

| Rule (23) is the function that maps any finite string of segments x1x2 ⋯ xn to the string of segments y1y2 ⋯ yn such that such that for each index i that is greater than or equal to 1 and less than or equal to n (1 ≤ i ≤ n)⋯ | ||

| • | IF xi ∪ {+VOICED} is consistent, THEN yi = xi ∪ {+VOICED}. | |

| • | OTHERWISE yi = xi | |

Of course, this rule might be ordered at the end of the derivation, after other tokens of underlying /B/ have been assigned a value for VOICED by other rules. Rule (23) will fill in a default value for remaining underspecified tokens. In the following discussion this kind of economy will be exploited to varying degrees. I will not provide the interpretations for the rules in the remainder of the paper since they involve trivial extension of the examples provided so far.

I have insisted that rules are functions that map any input representation to an output representation. Sometimes an input representation will be mapped to an identical output. This is necessary in order to treat the whole phonology as a composed function. The examples of rule semantics for a unification-based rule and a subtraction-based rule have been formulated in terms of such functions. However in discussion, it is often convenient to focus on the mapping of target segments from input to output. This expository expedience should not be taken as a rejection of the nature of the rules indicated by the interpretations given above. As shown in Bale & Reiss (2018), rule semantics become more complex once segment insertion and deletion (not the feature insertion and deletion considered here) are considered. This is because it becomes necessary to map between strings of different lengths, and it is not trivial to determine the correspondence between input and output segments in the two strings.

4 Combining the primitives

In this section I provide a schematic SMD using the segment variable symbols ϕ, ψ and Δ along with a description of the corresponding composed rules. In each case a simple toy example or actually attested case is given for concreteness.

4.1 Simple neutralization

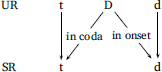

The SMD in (11) showing the partial neutralization of /s/ with /š/ did not take into account the discussion in section 3.3 about the two-step analysis of feature-changing processes. In order to reflect this view, a more complete SMD for simple neutralization would have an extra level as in (25).

| (25) | Explicit SMD for feature-changing neutralization of ϕ and ψ |

|

As this SMD shows, in some environment, a first subtraction rule deletes the valued feature on ϕ with respect to which ϕ disagrees with ψ. This turns ϕ into Δ (which we are assuming is not present in any URs). In the next step, a unification rule inserts the valued feature which is the opposite of the previously deleted one into the newly created Δ, yielding ψ. This is simple conditioned neutralization of two fully specified underlying segments.

However, I will not generally reiterate in the SMDs all the vacuous mappings or show the inventory at each stage, so a less explicit version of (25), like (26) will sometimes be used.

| (26) | Less explicit SMD for feature-changing neutralization of ϕ and ψ |

|

I will also represent distinct rules at the same level in an SMD if there is no way to explicitly order them (as in the discussion of Turkish stops below).

4.2 Feature-changing allophony of ϕ and ψ

If a language has a sequence of rules just like in the previous case, but without having any underlying tokens of ψ, then we have a classic case of allophony, with ϕ and ψ in complementary distribution on the surface.

| (27) | Feature-changing allophony |

|

The only difference from the previous case is that there are no underlying tokens of ψ and thus there is no identity mapping to surface ψ. The rules would be the same as in the previous case—delete a valued feature from ϕ in certain environments to derive Δ, then insert the opposite value to derive ψ from Δ. This analysis is justified when ψ occurs in a natural class of environments, but where ϕ does not and must be posited as the underlying form. The SMDs in (26) vs. (27) serve as a visual aid to see that the former differs from the latter just by virtue of having an additional identity mapping—which in turn is a result of having a different set of underlying segments present in the lexicon.

For a concrete example of the relationships and mappings manifested in (27), let’s suppose that Modern Greek [x] contains the specification +BACK. This segment occurs before the back vowels [u,o,a] and before a consonant. Before the front vowels, [i,e], an ‘allophone’, sometimes written [xj] occurs, which we will assume contains the same specifications as [x], except that it is specified –BACK. If we posit an underlying /x/ that surfaces as [xj] before front vowels, our model claims that the mapping from /x/ to [xj] passes through a stage corresponding to a segment we can denote X, underspecified for BACK, but otherwise featurally identical to x and xj.

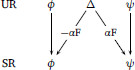

4.3 Feature-filling allophony of ϕ and ψ

In some cases, it is not possible to choose an underlying form based on elsewhere-case reasoning. In other words, it may be clear that ϕ and ψ are in complementary distribution, but it is an arbitrary decision as to which should be posited as underlying. Rather than make an arbitrary choice as a linguist, and rather than attribute an arbitrary choice between extensionally equivalent and equally elegant grammars to the learner, we can posit that a learner encodes an underlying form that is neither ϕ nor ψ, namely Δ. This situation can be called feature-filling allophony, since both surface forms are derived by feature-filling from the UR.

| (28) | Feature-filling allophony of ϕ and ψ |

|

An example of this situation might be found in the English distribution of laterals. In some English dialects, dark [ɫ] occurs in codas and light [l] occurs in onsets, so both can be derived from a lateral unspecified for one (or more) features. Strictly speaking, the SMD in such a case should have a separate level corresponding to each of the two rules (one for feature-filling in onsets and another in codas), but since it is not possible to determine the mutual ordering of the two rules, we’ll maintain the simple SMD of (28).

4.4 Absolute neutralization

If we flip the SMD for feature-changing allophony (27) upside down we get a situation that initially looks implausible. There are two underlying segments, but only a single surface correspondent. This SMD models the logical structure of feature-changing absolute neutralization which potentially corresponds to situations like the harmonically irregular vowels of Hungarian, like the [i:] in híd ‘bridge’ that takes back vowel suffixes as in dative hídnak, as opposed to the regular víz ‘water’ with dative víznek.

| (29) | Feature-changing absolute neutralization of ϕ and ψ with Hungarian parallel | |

|

||

| • | Rules: A subtraction rule for ψ-to-Δ and a unification rule for Δ-to-ϕ | |

The SMD is just like that of feature-changing neutralization in (26), aside from the lack of an identity mapping for ψ. In other words, the SMD represents the old idea that the harmonically irregular vowel is the +BACK version of /i/, namely /ɨ/. The vowel /ɨ/ triggers harmony as expected, then a subtraction rule deletes the feature +BACK from it, and a unification rule inserts –BACK into the derived, underspecified vowel. What we gain by this discussion—what makes it go beyond just restating an old idea—is the demonstration that, despite our pre-theoretical prejudice against abstractness, such a solution is not formally complex, and it uses machinery that is needed for other phenomena. The theory predicts the existence of such cases, and formalization of this sort helps us overcome intuitive biases. Of course, the facts of Hungarian are more complex than I have indicated here, as discussed by Törkenczy et al. (2013). A question for further research is whether the formal innovations presented here can help us generate new analyses of the full range of Hungarian patterns.

4.5 Three-to-two feature-filling mapping

The next pattern is just like feature-filling allophony (28) but with the addition of ϕ and ψ in the lexicon (and thus in URs). As we see in (30) all three segments ϕ, ψ and Δ are present in URs. Feature filling rules for each context fill in either +F or –F to derive ϕ and ψ, but Δ never surfaces.

| (30) | Three-to-two feature-filling mapping | |

|

||

| • | Rules: Two unification rules—one for Δ-to-ϕ and one for Δ-to-ψ | |

This structure corresponds to Inkelas and Orgun’s (1995) analysis of Turkish, where it is necessary to posit a three-way contrast in root final stops, even though there are only two stops on the surface. Some roots like sanat ‘art’ have non-alternating [t] as the final segment, so the lexical form can be posited with a /t/. Others have non-alternating [d], as in etüd ‘étude’, so the lexical form has /d/, for at least some speakers, according to Öner Özçelik (p.c.). For roots with surface forms like kanat-/kanad- we can posit a final /D/, a coronal stop with no specification for VOICED. In such roots, the /D/ surfaces as [d] in onsets, as in kanadım, and as [t] in codas, as in kanatlar.

| (31) | Turkish stops | |

| a. | Non-alternating voiceless: – VOICED /t/ | |

| [sanat] ‘art’, [sanatlar] ‘art-plural’, [sanatɯm] ‘art-1sg.poss’ | ||

| b. | Non-alternating voiced: +VOICED /d/ | |

| [etyd] ‘etude’, [etydler] ‘etude-plural’, [etydym] ‘etude-1sg.poss’ | ||

| c. | Alternating: (no specification for VOICED) /D/ | |

| [kanat] ‘wing’, [kanatlar] ‘wing-plural’, [kanadɯm] ‘wing-1sg.poss’ | ||

For Turkish, we need one feature-filling unification rule to insert +VOICED in onsets, affecting only /D/; and we need another feature-filling unification rule to insert –VOICED in codas, again affecting only /D/. To reiterate an important point, formalization allows us to see how this pattern is just a combination of what we needed for the English /L/ analysis with the addition of the identity mappings we have also made use of.

In (32) we repeat the logical structure shown in (30) but with the Turkish segments filled in, along with the environments of the two feature-filling rules responsible for the non-identity mappings.

| (32) | Segment mapping (non-crucial rule ordering ignored here) |

|

As mentioned above, we assume that all rules are ordered in a grammar, but the two rules here cannot be ordered by the analyst.

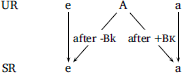

4.6 Three-to-two with α-notation

We now turn to an SMD that has the exact same three-to-two structure of the previous example involving Turkish stops, but where we can make use of a single rule to account for both mappings by introducing α-notation. This SMD is relevant when the environments that determine the mappings Δ-to-ϕ and Δ-to-ψ can be characterized in terms of opposite values of the feature that distinguishes ϕ and ψ.

| (33) | Three segments mapping to two with α-notation |

|

This SMD models the harmonic alternations of Turkish vowels seen in the plural suffix:

- (34)

- Turkish plurals

Trigger vowel SINGULAR PLURAL GLOSS Suffix form [i] ip ipler ‘rope’ [ler] [e] ek ekler ‘joint’ [y] gül güller ‘rose’ [œ] öç öçler ‘revenge’ [ɯ] kıl kıllar ‘body hair’ [lar] [a] sap saplar ‘stalk’ [u] pul pullar ‘stamp’ [o] son sonlar ‘end’

The alternating vowel can be posited to be underlyingly /A/, a non-high, non-round vowel, lacking specification for BACK.

| (35) | Segment mapping with an α rule |

|

So, there are two diagonal arrows in (35) but they reflect the mapping of a single rule (36) that uses α-notation.

| (36) | [–HIGH, –ROUND ] ⊔ {αBACK} / when preceding vowel is [αBack] |

Application of this rule to a sequence with fully specified /e/ or /a/ in target position will result in vacuous application, either via vacuous unification or unification failure. If /A/ is the target, the rule will fill in a value for BACK according to the context. The SMDs in (32) and (35) show the similar structure of the two cases when we abstract from the details of the particular rules.

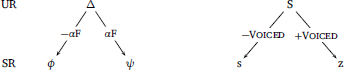

4.7 A predicted allophonic pattern

The examples of the Turkish stops and vowels just given lead to the consideration of similar patterns involving allophony, rather than neutralization. Note that the analysis of the Turkish stop /D/ is parallel to the syllable structure based analysis of the lateral allophones of English given in section 4.3. It should be possible to have a language like Turkish, but without the underlying t and d—and in fact, that’s the structure of the English lateral pattern. In principle, we should expect that another possible pattern would be an allophonic version of the Turkish vowel pattern, that is, a mapping from an underspecified segment to two allophones via a single rule that uses α-notation. In section 4.3 we saw feature-filling allophony, but we needed two rules, one to generate clear [l] in onset position and another to generate dark [ɫ] in codas, from an underlyingly underspecified /L/.

I haven’t yet found such a case based on α-notation where the value on a feature F in the environment determines the value of F on the two surfaces allophones. This requires the two surface forms to both occur in a natural class of environments definable by α-notation, with no apparent elsewhere case.8 A hypothetical case would be a language where, say, [s] and [z] occur only as the first member of a cluster, with both derived from underspecified /S/ and the appearance of each dependent on the voicing of the following segment. The SMD would look like this:

| (37) | One segment mapping to two with α-notation |

|

If we allow cross-featural use of α-notation, then it is possible to find such patterns, for example, the distribution of [l] and [d] in Tswana. According to Odden (2013: 22) in Tswana “there is no contrast between [l] and [d]. Phonetic [l] and [d] are contextually determined variants of a single phoneme: surface [l] appears before nonhigh vowels, and [d] appears before high vowels (neither consonant may come at the end of a word or before another consonant).” Obviously these two segments differ by more than a single feature, but the pattern of interest can be derived by a feature-filling rule that inserts ±LATERAL according to the value for HIGH on the following vowel:

| (38) | [+CORONAL] ⊔ {αLATERAL} / ____ [–αHIGH] |

Odden points out that the Tswana surface distribution can be handled by choosing either segment as the underlying form: “There is no evidence to show whether the underlying segment is basically /l/ or /d/ in Tswana, so we would be equally justified in assuming either” a rule that turns /d/ to [l] or a rule that turns /l/ to [d], since “[s]ometimes, a language does not provide enough evidence to allow us to decide which of two (or more) analyses is correct”. Odden is writing here in Chapter 2 of an undergraduate textbook, so he doesn’t mention the third option, suggested here, that neither of the surface forms is identical with the underlying form. It is hard to imagine how allophonic patterns derived from underspecified segments could be excluded in any systematic manner, so the formal approach we have adopted helps us to formulate a topic for empirical exploration—a non-cross-featural α-rule example might turn up any day.9

4.8 Reciprocal neutralization

Another pattern that can be usefully identified using symbols in the logical relationship of ϕ, ψ, Δ is reciprocal neutralization. I apply this term to a situation where there is good evidence to posit an underlying ϕ that surfaces sometimes as ψ, and there is also evidence to posit an underlying ψ that surfaces sometimes as ϕ. In light of our account of feature-changing as a sequence of feature deletion via a rule built from set subtraction followed by insertion via a rule built from unification, the transformation from ϕ to ψ and vice versa is most directly modeled as passing through Δ, as in (39):

| (39) | Schematic reciprocal neutralization |

|

This generic pattern can be illustrated with separate rules or with a single set of rules using α-notation. In the next two sections, I present a simplified example using α-notation, based on Hungarian voicing assimilation. I then develop this account for a more complete version of voicing assimilation in Hungarian consonant clusters. The SMD for reciprocal neutralization shows that it is just a combination of mappings like allophonic α rules (37) and something like the Turkish vowel harmony rule (36).

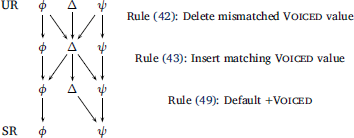

5 Simplified Hungarian Reciprocal Neutralization

Hungarian10 shows patterns of neutralization that can be illustrated with the behavior of /t/ and /d/:

| (40) | Reciprocal neutralization SMD: |

|

Both /t/ and /d/ surface unchanged in final position, as in kút [kuːt] ‘well’ vs. kád [kaːd] ‘tub’.

- (41)

- Reciprocal neutralization in Hungarian

Noun In N From N To N kuːt kuːdbɔn kuːttoːl kuːtnɔk ‘well’ kaːd kaːdbɔn kaːttoːl kaːdnɔk ‘tub’ byːn byːnben byːntøːl byːnnek ‘crime’

However, /t/ undergoes voicing assimilation to a following [b], or other voiced obstruent, and surfaces as [d], as in [kuːdban]. Similarly, /d/ undergoes voicing assimilation to a following [t], or other voiceless obstruent, and surfaces as [t], as in [kaːttoːl]. As illustrated by [byːntøːl] and [kuːtnɔk], sonorants like /n/ neither assimilate nor trigger assimilation.

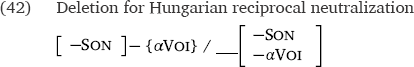

First, I posit a feature deletion rule based on set subtraction, as we have seen above:

This rule deletes the voicing value on an obstruent if the following obstruent has a different value.11 The effect of such a rule is to map tb to Db, for example. The next rule is a feature-filling unification rule:

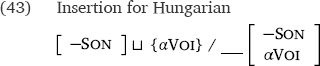

This rule maps Db to db. The same two rules will also map dt to Dt then to tt.

We can combine the two rules into a single SMD:12

| (44) | Revised reciprocal neutralization SMD: |

|

The SMD looks complex, but we have seen all the parts before.

6 Full Hungarian situation

It turns out that there is more to Hungarian voicing assimilation. The consonants written v and h behave sometimes like obstruents like t,d and sometimes like sonorants like n,r,l. More specifically, the consonant written v (which we’ll just call v), undergoes voicing assimilation to sometimes surface as [f], but this consonant does not trigger voicing assimilation, although it is typically realized as voiced [v]; and the consonant written h or ch (which we’ll just call h), triggers voicing assimilation to devoice obstruents, but this consonant does not undergo voicing assimilation, remaining voiceless [x] in codas, even before a voiced obstruent. In onsets, it will surface as [h] where it is not possible for it to undergo voicing assimilation at all.

My goal here is not to delve into the phonetic factors and diachronic factors that may have led to the synchronic behavior of Hungarian v and h. These are legitimate questions for those interested in the phonetics of sound change, but they are irrelevant to the narrow definition of phonology as mental computation assumed here. My goal is to demonstrate that we already have the means to account for the behavior of these two segments using the kinds of rules developed above, along with two simple assumptions about the two segments in question: (1) v is unspecified for VOICED underlyingly, but is otherwise specified as expected for a segment that alternates on the surface between [f] and [v]; and (2) h is unspecified for CONSONANTAL, but is otherwise specified as expected for a segment that surfaces as a voiceless fricative—it is –VOICED, which allows it to trigger devoicing, but the lack of a value for CONSONANTAL makes it immune to assimilation. I denote these two segments /V/ and /H/, respectively. We’ll work through the treatment of these two ‘exceptional’ segments one at a time.

6.1 Hungarian v

6.1.1 Feature filling from context

Data illustrating the interaction of v with other consonants appears in (45) using the symbol /v/ as in Siptár & Törkenczy (2000).13

| (45) | v is a target of assimilation, but not a trigger | |

| a. | Target: hívsz /vs/ ⟿ [fs] ‘you call’, óvtam /vt/ ⟿ [ft] ‘I protected’; | |

| révbe ‘to port’, bóvli ‘junk’, sav ‘acid’ | ||

| b. | Non-trigger: kvarc /kv/ [gv] ‘quartz’, pitvar /tv/ [gv] ‘quartz’, pitvar /tv/ [dv] ‘porch’; [dv] ‘porch’; |

|

| medve ‘bear’, olvas ‘read’, kova ‘flint’, vér ‘blood’ | ||

We see in (45a) that Hungarian v surfaces as [f] before a voiceless obstruent, as in óvtam ‘I protected’, where /vt/ ⟿ [ft]. So v apparently acts like other segments such as /z/ that are subject to voicing assimilation. The form sav shows that there is no coda devoicing.

However, if we assume that v is /V/ without an underlying specification for VOICED, then the transformation from /V/ to [f] is a feature-filling process, and thus effected by the unification based rule (43). The deletion rule (42) does not affect /V/ since there is no VOICED feature to delete. To be clear, all of the input forms in (45) should be rewritten with /V/ instead of /v/ under the assumptions I adopt here. For example, for óvtam, sav, pitvar, instead of /o:vtɔm, sɔv, pitvɔr/ I assume /o:Vtɔm, sɔV, pitVɔr/.

The SMD in (46) shows the derivation of /V/ to both [v] and [f] by the same two rules we used in the previous section. Again, the deletion rule (42) applies vacuously, since /V/ has no voicing value. The voicing of the following obstruent is indicated by the environments denoted ‘___p’ vs. ‘___b’. This value is filled onto the segment corresponding to underlying /V/ by rule (43) at this point in the derivation.

| (46) | v as target (output agrees with following obstruent, e.g., óvtam [ft]) |

|

The behavior of /V/ is formally identical to other feature-filling processes we have seen, such as the behavior of Turkish /A/ in (35).

Note that turning an underlying /V/ into an [f] involves rule (43). The same rule is responsible for turning D derived from underlying /d/ into a [t] in the right environment. Rule (43) is thus independent of the subtraction rule (42)—the application of (43) does not need to track what happened previously to a form going through a derivation.

Now consider what happens when /V/ is on the right side of a cluster, in the position of a potential trigger for voicing assimilation. A voiceless segment before a v does not get voiced, as we see in (45b). This is because v, lacking a VOICED value, does not trigger deletion of –VOICED by rule (42) in a preceding voiceless obstruent. This rule deletes the voicing value on a segment only if conflicts with the value on the next segment, and the absence of voicing on v cannot conflict with either +VOICED or –VOICED. The voiceless obstruents that precede v remain specified as voiceless. The subsequent feature filling rule (43) applies vacuously, both because the preceding obstruent has a value of its own, and because there is no value to copy from v. The last two forms in (45b), kova and vér, just show that /V/ surfaces with voicing when it is alone in an onset.

| (47) | SMD for v as a non-trigger (doesn’t trigger voicing agreement with preceding obstruent, as in kvarc) |

|

The two rules account for the outcome of obstruent clusters which do not contain v; for clusters with v as an affected target in the left side of an obstruent cluster; and for the (unaffected) outcome of other obstruents in clusters with a v on the right side.

6.1.2 A default fill-in rule

The system of two rules developed thus far fails to account for a v that is not to the left of another obstruent. This includes v before a vowel or other sonorant, or at the end of a word. Such a v surfaces with a +VOICED specification, that is, as [v]. A first attempt at a fill-in rule for segments derived from underlying /V/ that have not received a +VOICED specification is given in (48):

| (48) | [ –SON ] ⊔ {+VOICED} (Only remaining V undergoes non-vacuous unification) |

However, this rule can be made even more economical if we assume that at the end of the derivation every segment, consonant or vowel, other than the outputs of underlying prevocalic or word-final /V/’s has a specification for VOICED. If this is so, then the unification rule in (48) can be generalized by removing the stipulation that it apply to obstruents:

| (49) | [ ] ⊔ {+VOICED} (Unify every segment with {+VOICED}) |

See the discussion around the rule in (22) to confirm that the effect of rule (49) is to unify every segment that is a superset of the empty set (that is, every segment) with the set {+VOICED}. The possibility of unification failure enables such economical rule formulation.

6.1.3 It is what it is

Why is v actually /V/, a segment underspecified for voicing? Or rather, why do we posit that it is the lack of the feature VOICED that makes v act ‘strange’? On one hand, that is not a phonological question. In particular, it is not a phonological question about /v/, which is the symbol for a +VOICED labiodental fricative. Under my analysis, Hungarian has no /v/. It has surfacing [v] from underlying /V/. The analysis here parallels my analysis of Russian v also as /V/ (Reiss 2017a), but as mentioned above, the phonetics of why labiovelar fricatives seem to engage in ‘exceptional’ behavior cross-linguistically with respect to voicing interactions is not in the purview of a paper on phonological computation. The model of segments as sets of features adopted here allows for underspecification (and this is actually a simplification of phonology, as I have explained elsewhere (Reiss 2012; Matamoros & Reiss 2016; Bale & Reiss 2018)), so there is no ‘cost’ for modeling Hungarian v as /V/. Since it is exactly the feature VOICED with respect to which v acts exceptionally, it is not surprising that it is the absence of this feature which, I suggest, distinguishes v from other obstruents.

Other approaches to the behavior of v, in Russian and Hungarian both, have suggested that the segment in question is not specified as an obstruent. For example Siptár & Törkenczy (2000), in a thorough discussion of the data and an analysis combining aspects of feature geometry and Government Phonology, treat v as underspecified for the feature SONORANT. Given that two realizations of v are clearly the fricatives [v] and [f], it seems reasonable to attempt to treat the underlying segment from which these obstruents are derived as specified –SONORANT, i.e., as an obstruent.14 So, from this perspective (adopting certain idealizations), “Why /V/?” is a purely phonological question, and the answer is that positing this segment for v gives us an elegant account of Hungarian that can be constructed without extending the model of segmental changes we developed for the many other patterns of phonological processes illustrated above.

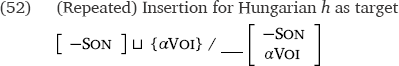

It may be useful to show graphically that in developing an account of Hungarian our model has not gotten any more complicated than what we used to account for the various languages above. In order to present an SMD for Hungarian, we will adjust our interpretation of the symbols ϕ, ψ, Δ. Instead of assuming that the three are identical aside from a single feature specification, let’s abstract away from all other features, but one. In other words, let ϕ be any voiceless obstruent, [p, t, s], etc.; let ψ be any voiced obstruent, [b, d, z] etc.; and let Δ be any obstruent unspecified for voicing, such as /V/. The following SMD shows how the rule system we developed handles these three types of segment, wherever they occur, as first or second member of an obstruent cluster or elsewhere.

| (50) | SMD for ‘normal’ obstruents and /V/ in Hungarian |

|

The first α rule deletes the first in a sequence of two incompatible voicing features. The next α rule inserts into the first consonant the value of voicing on the second consonant, if the first lacks a value for that feature. The third rule is the default +VOICED fill-in rule.

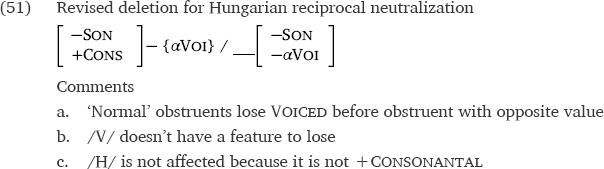

6.2 Hungarian h

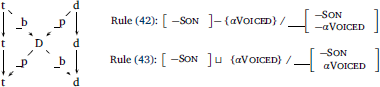

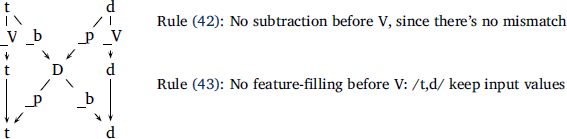

Two simple stipulations are needed to extend the analysis of Hungarian to the underlying consonant surfacing as a voiceless glottal fricative [h] in onsets, written h, and a voiceless velar fricative [x], also written h, or sometimes ch, in codas.15 Recall that we will denote this segment /H/ and assume that it lacks a specification for CONSONANTAL.16 There must be something about the featural make-up of this segment that allows it to cause devoicing of preceding obstruents, as in adhat /ɔd-Hɔt/ ‘he may give’ ⟿ [ɔthɔt], without allowing the h itself to undergo assimilation as a target, as seen in pechből /peH-bø:l/ ‘out of bad luck’ where /Hb/ ⟿ [xb]. We achieve this effect by (a) slightly revising the feature deletion rule (42) to make reference to the valued feature +CONSONANTAL; and (b) by accepting that h is underlying /H/, a segment that has all the features of [h] aside from CONSONANTAL. Here’s the revised version of (42):

Rule (51) will not delete voicing on /V/ because there is none. It won’t delete voicing on /H/ because the rule specifies that the target must be +CONSONANTAL and /H/, by hypothesis is not thus specified—it has no value for CONSONANTAL.

Since the segment /H/ will not lose its –VOICED specification by rule (51), the unification rule (43), repeated as (52), responsible for inserting a VOICED value will apply vacuously when the reflex of /H/ is the first member of the cluster—either because the following segment is also voiceless, so unification is vacuous, or because the following segment is voiced, so unification fails.

The slightly revised deletion rule (51) is not quite the only change needed to account for clusters containing v and h. Underlying /H/ also needs some fill-in rules, assuming that its reflexes are fully specified at the surface. If we assume that /H/ is the only source of segments without a value for CONSONANTAL near the end of the derivation, then we can assign +CONSONANTAL in coda position where the surface form is [x] with rule (53):

| (53) | [ ] ⊔ {+CONS}/ in CODA (Only ‘H’ undergoes non-vacuous unification) |

In onset position, we can follow the SPE (Chomsky & Halle 1968) tradition of treating [h] as –CONSONANTAL:

| (54) | [ ] ⊔ {–CONS}/ in ONSET (Only ‘H’ undergoes non-vacuous unification) |

Let’s (arbitrarily) assume that feature filling for CONSONANTAL in onsets is ordered after filling for codas. We can then remove the rule context and formulate a more general rule that extensionally only applies to the reflex of /H/ in onsets:

| (55) | [ ] ⊔ {–CONS} (Only ‘H’ undergoes non-vacuous unification) |

Alternatively, we could leave h in onset unspecified for CONSONANTAL at the surface, as in the cases of surface underspecification presented by Keating (1988).17

6.3 Hungarian cluster derivations

Recall that the focus of this paper is on changes within segments. In order to illustrate the logic of our set theoretic approach we have made several simplifications. For example, we have only considered clusters of two consonants, but Hungarian has clusters of three consonants, with the rightmost member determining the outcome of the whole cluster, so that it looks like voicing assimilation works iteratively from right to left, e.g., liszt-ből [stb] ⟿ [sdb] ⟿ [zdb] ‘from flour’. There are alternative analyses, but this brings us into questions of how rules apply and how rule environments are stated, and I leave these questions aside for now. Here, I give derivations for schematic cases of sequences of one or two consonants, where each member of the sequence is either one of the ‘regular’ obstruents or one of the two ‘exceptional’ obstruents corresponding to v and h. In other words, all of the segments in these sequences are specified –SONORANT.

Let’s first consider the clusters of fully specified segments, corresponding to the discussion of simplified Hungarian in section 5. The derivations are in (57), using the same abbreviations that I will now explain and use in the subsequent tables.

In the tables below (57)–(60), a dash ‘–’ indicates, as is traditional, an identity mapping from the next cell up in the table, when the structural description of a rule is not met. For example, underlying /tp/ is unchanged by the first rule (51), since the segments do not disagree in voicing. The rule conditions are not met and this is denoted with the en-dash ‘–’.

In the same column, the row corresponding to the rule (52) that potentially inserts voicing value is marked with ‘vac.’, indicating the vacuous unification of /t/ with the set {–VOICED} coming from /p/.

The next cell down is marked ‘un.fail’ for unification failure. This is the row corresponding to the default rule (49) that fills in +VOICED. Unification of /t/ (and /p/) with {+VOICED} fails because of the conflict of –VOICED with +VOICED. Since unification fails, we again map the input to the output. Thus we have three different situations that result in vacuous rule application, but I have marked them differently:

| (56) | Three kinds of vacuous rule application: | |

| a. | – : Input string does not match rule structural description, so target segment is unaffected | |

| b. | vac. : Unification is vacuous, so rule application does not affect target segment | |

| c. | un.fail : Unification fails because of feature value conflict, so (by definition) input representation is mapped to identical output representation | |

I call all three of these situations “vacuous rule application” rather than, for example, saying that the rule does not apply in situation (56a) for reasons discussed in Bale & Reiss (2018). In brief, if we want the phonology to be a function composed from the individual rules, each rule must ‘apply’ to each sequence, so that there is an output that can be handed to the subsequent rules, or ultimately to the surface representation. In cells with two notations for vacuous rule application, like vac./–, the intent is to explain the result for each segment in the cluster.

Here are the derivations for clusters of fully specified segments:

- (57)

- Derivations for Hungarian fully specified sequences

UR tp tb dp db (51) Delete –αVOI – Db Dp – (52) Insert αVOI vac. db tp vac. (49) Fill +VOI un.fail vac. un.fail vac. (53) Fill +CONS CODA vac./– vac./– vac./– vac./– (55) Fill –CONS un.fail un.fail un.fail un.fail SR tp db tp db

Since the examples in (57) involve only fully specified segments after the application of rule (52), the last three rules, which are all fill-in rules, can have no effect on their inputs. The situation changes as we consider additional inputs with underspecified segments. Let’s first consider derivations whose inputs contain /V/ as the first segment of an obstruent cluster (/Vp/ and /Vb); the second segment of an obstruent cluster (/tV/ and /dV/); or elsewhere (/V/).

- (58)

- Derivations for Hungarian sequences with /V/

UR Vp Vb tV dV V (51) Delete –αVOI – – – – – (52) Insert αVOI fp vb – – – (49) Fill +VOI un.fail vac. tv dv v (53) Fill +CONS CODA vac./– vac./– vac./– vac./– vac./– (55) Fill –CONS un.fail un.fail un.fail un.fail un.fail SR fp vb tv dv v

None of the clusters or the single consonant /V/ meet the conditions for the first rule (51), which looks for a mismatch in voicing values on adjacent obstruents, so we have ‘–’ across the row. However, the feature filling rule (52) in the second row provides underlying /V/ with a voicing value that agrees with the following obstruent, so we get [f] before [p] and [v] before [b].

The next row corresponds to rule (49) which fills in +VOI. In the first column of inputs, /V/ has turned into [f] so unification fails; in the second column, /V/ has turned into [v], so unification is vacuous. In the last three forms, /V/ is unchanged from the UR, so the fill-in rule provides +VOICED and we get [v] in all three cases.

Consider the next row, corresponding to rule (53), which fills in +CONSONANTAL in codas. All of the consonants in the five columns are specified +CONSONANTAL (already in the UR). So the unification will be vacuous when one of these falls in a coda. When a consonant is in an onset, the rule condition is not met. This is why all five columns are marked with both ‘vac.’ and ‘—’.

Finally, in the last row, rule (55) ‘tries’ to fill in –CONSONANTAL on reflexes of the input consonants, but they have remained +CONSONANTAL since the underlying representation. Unification fails in each case, as indicated, and the surface representations all come out as desired.

Now we turn to the derivation of forms with /H/ in the input. We consider /H/ as the first or second member of a cluster between vowels, so in coda or onset position, with the other segment either voiceless or voiced. We also need to consider /H/ on its own, not adjacent to another obstruent, in both onset and coda position.

- (59)

- Derivations for Hungarian sequences with /H/

UR Hp Hb tH dH H-ons H-cod (51) Delete –αVOI – – – DH – – (52) Insert αVOI vac. un.fail vac. tH – – (49) Fill +VOI un.fail un.fail un.fail un.fail un.fail un.fail (53) Fill +CONS CODA xp xb vac./– vac./– – x (55) Fill –CONS un.fail un.fail th th h un.fail SR xp xb th th h x

Because rule (51), the first rule, requires that the lefthand member of an input sequence be specified +CONSONANTAL, this condition is not met when /H/ precedes another obstruent, so we get ‘–’ in the first two columns. We get ‘–’ in the third column because /t/ and /H/ do not disagree in voicing, since both are –VOICED. In the next column, the voicing mismatch of /d/ with following /H/ causes the deletion of +VOICED on the former, yielding derived underspecified [D]. The last two columns do not contain sequences of obstruents, so the rule condition is not met.

The only non-vacuous application in the next row, for the rule (52) that copies a voicing value from the right-hand member of a sequence to the left, is the case where derived [D] becomes [t] before the voiceless /H/.

At this point in the derivation, all segments in this table are specified as either +VOICED or –VOICED, so the default rule that fills in +VOICED will alway be vacuous. I have marked this as ‘un.fail’ in each column, since there is unification failure with at least one segment in each case, but for the second column of forms, there is not only unification failure with the reflex of /H/ but also vacuous unification with the reflex of /b/, which is still voiced.

In the next row, rule (53) fills in +CONSONANTAL on each /H/ in coda position, yielding [x] (since we are ignoring the glottal vs. velar contrast). For the forms in the third and fourth columns, derived from /tH/ and /dH/, respectively, the reflex of /H/ is not in a coda, so we mark ‘–’, but the rule as formulated also targets the underlying and derived [t]’s. These are already +CONSONANTAL, so the unification is also vacuous, so marked ‘vac.’

The next row shows that any remaining /H/ becomes –CONSONANTAL at this point in the derivation, but for all other consonants, which are +CONSONANTAL, there is unification failure.

Finally, let’s show derivations for clusters /HV/ and /VH/:

- (60)

- Derivations for Hungarian sequences /HV/ and /VH/

UR HV VH (51) Delete –αVOI – – (52) Insert αVOI – fH (49) Fill +VOI Hv un.fail (53) Fill +CONS CODA xv vac./– (55) Fill –CONS un.fail fh SR xv fh

For the second column, forms like szívhez ‘to heart’ with /VH/ ⟿ [fh] confirm that our rules work for this cluster. There is a complication with finding the output for /HV/ since Hungarian has two kinds of suffixes that begin with v. In some Hungarian v-initial suffixes, the v assimilates fully to a preceding consonant. In the others, the v does not assimilate. This non-assimilating v is the one we are interested in. This distinction could be due to a featural difference in the two v’s or, as Siptár & Törkenczy (2000) assume, to a structural difference in the words containing the two kinds of suffixes. Unfortunately it is accidentally the case that the suffixes that show non-assimilating v do not attach to any stems ending in /H/. For example, the va/ve suffix attaches to verb roots, but there are no verbs ending in /H/. Thus the predicted output [xv] is never observed. Péter Siptár (p.c.), a native speaker phonologist, suggests that the expected outcome of the relevant sequence in a wug-test situation would indeed be [xv].18 Fortunately, Hungarian voicing assimilation is postlexical (see fn. 22, p. 198 of Siptár & Törkenczy 2000) so it applies not only between a root and a suffix, but also across compound boundaries and word boundaries, so we can find relevant cases. Just as the inflected form szívhez parallels the compound tév-hit ‘misbelief’ where both show /vH/ ⟿ [fh], the accidental data gap identified above is predicted to parallel compounds like potroh-végi ‘caudal’ with /HV/ surfacing as [xv]. In fact, there actually is no data gap, since the process is postlexical. So, the system I have constructed appears to work for this cluster as well.

7 Conclusions

The point of the epigraph to this paper “We learn not to worry about purpose, because such worries never lead to the sort of delight we seek” is that this work is the antithesis of that exhorted by Prince & Smolensky (1993: 216) in a founding document of Optimality Theory: “We urge a reassessment of this essentially formalist position. If phonology is separated from the principles of well-formedness (the ‘laws’) that drive it, the resulting loss of constraint and theoretical depth will mark a major defeat for the enterprise.” The framework presented here is purely formal and has no teleology or notion of well-formedness. Phonology is not trying to optimize anything at all, to repair or to block marked structures, to find “cures” for “conditions” (Yip 1988), or manage “trade-offs” between ease of articulation and maintenance of meaning contrasts contra, for example, the papers in Hayes et al. (2004).

Instead, the Logical Phonology approach I assume here (developing work such as Bale et al. 2014; Bale & Reiss 2018; Bale et al. 2020; Volenec & Reiss 2020) posits a set of representational primitives, such as binary features and α variables, as well as a set of computational primitives that are organized into rules that do things like subtract or unify sets of valued features. Phonology, in this conception, involves abstracting away from instances of phonological patterns the contingencies of long chains of historical events in the course of transmission across generations (as discussed by Blevins 2003; Hale 2007; Hale & Reiss 2008, among others). Rather than seeing this as an original idea, it strikes me as the normal method of science, “the natural approach: to abstract from the welter of descriptive complexity certain general principles governing computation that would allow the rules of a particular language to be given in very simple forms, with restricted variety” (Chomsky 2000: 122). This is exactly what I have tried to do in this paper by deriving superficial variety from a few basic notions.