1 Introduction

One aspect of linguistic knowledge is arbitrary associations between words and the patterns of word formation that they follow. For example, Hungarian speakers know that the words [paːr] ‘pair’ and [kaːr] ‘damage’ arbitrarily take distinct possessive suffixes, which I call -jV ([paːr-jɒ]) and -V ([kaːr-ɒ]). Speakers can also extend patterns productively: given a novel noun, like the English borrowing [baːr] ‘bar’, speakers use one of the existing suffixes to form its possessive (in this case, [baːr-jɒ]). This productivity is the subject of this paper: What patterns have speakers learned about their language? How do they generalize the arbitrary patterns of known lexical items to unknown ones?

Previous work on morphological productivity has focused on phonological factors: for example, Hungarian nouns ending in alveolar plosives, like [gaːt] ‘dam’, are more likely to take -jV ([ɡaːt-jɒ]) than those ending in velar plosives, like [fok] ‘degree’, whose possessive is [fok-ɒ] (Rácz & Rebrus 2012). In this paper, I focus on another type of generalization: correlations between arbitrary associations of lexical item and pattern (which I call morphological dependencies). While [paːr] and [kaːr], like most words, take the plural suffix -ok, a small number of words like [ɟaːr] ‘factory’ instead have plural -ɒk; nouns with this plural greatly prefer -V (e.g. [ɟaːr-ɒ]). These correlations drive the organization of complex morphological systems (see e.g. Wurzel 2001; Finkel & Stump 2007; Halle & Marantz 2008; Ackerman et al. 2009; Ackerman & Malouf 2013), but speakers’ knowledge of them has not been systematically tested. In a nonce word study, I find that speakers learn and productively apply the morphological dependency between plural and possessive alongside phonological generalizations about the distribution of possessives. I show that theoretical tools used to capture speakers’ knowledge of phonological generalizations can neatly be applied to morphological dependencies as well, and that these tools complement a syntactic, piece-based approach to morphological derivations, which has previously been criticized for ignoring morphological dependencies.

1.1 How to infer unknown forms

Linguists typically test speakers’ productive use of lexical patterns with nonce word studies, in which speakers are asked to inflect a made-up word; since they cannot have stored associations between these words and the patterns they take, they must fall back on broader generalizations. When tested on lexically variable patterns through nonce word studies (e.g. Albright & Hayes 2003; Ernestus & Baayen 2003; Gouskova et al. 2015) and artificial language studies (e.g. Hudson Kam & Newport 2005), adults usually follow what Hayes et al. (2009) call the Law of Frequency Matching:

- (1)

- Law of Frequency Matching (Hayes et al. 2009: 826)

- Speakers of languages with variable lexical patterns respond stochastically when tested on such patterns. Their responses aggregately match the lexical frequencies.

For example, Hayes & Londe (2006) found that Hungarian speakers obeyed this “law” when assigning suffixes with either front or back harmony (see Section 2.1) to nonce words with ambiguous harmony, like [haːdeːl], which contains one back vowel and one front vowel. Their participants showed probabilistic behavior: they were more or less likely to assign a harmony class to a noun stem depending on its vowels, and the rate at which speakers placed nouns with given vowels into each harmony class corresponded to the distribution of real noun stems with those vowels. While there are exceptions to this “law” (e.g. Pertsova 2004; Becker et al. 2011), it describes experimental results across a wide range of languages and phenomena. To date, these experiments have generally studied how speakers stochastically generate novel forms of nonce words according to their phonological characteristics.

Formal theories require a means of grammatically encoding these phonological generalizations, as they constitute part of speakers’ knowledge about the morphophonology of their language. One such method builds on a common approach to lexically arbitrary allomorphy in which words following a certain pattern are marked with diacritic features: for example, nouns like [ɡaːt] ‘dam’ that take possessive -jV would be marked with a feature [+j]. Likewise, [fok] ‘degree’ and other words that take -V would be marked with [–j]. Speakers can then extract and store generalizations over nouns that share a feature, as proposed by the sublexicon model of phonological learning (Allen & Becker 2015; Gouskova et al. 2015; Becker & Gouskova 2016) described in Section 6. Thus, the preference of words like [ɡaːt] for -jV can be expressed as a cooccurrence relation between phonological features and [+j]:

- (2)

- A phonological generalization over the distribution of Hungarian possessives

- Nouns ending with [coronal, –continuant, –nasal] (that is, alveolar plosives) tend to have [+j] (e.g. [ɡaːt-jɒ] ‘her dam’)

The phonological properties of a Hungarian noun are not the only source of information about its possessive, as mentioned above. Wurzel (2001) notes that a word’s form in one cell of its morphological paradigm can be predictive of its behavior in other cells. This is clearest in the case of typical inflection classes: knowing the nominative singular suffix of the Russian noun /sobák-a/ ‘dog’ allows speakers to infer that this noun has the suffix -i in the genitive singular, -e in the dative singular, and so on; all of these suffixes are characteristic of what is commonly called inflectional class II (cf. Corbett 1982: 204–5). While Ackerman et al. (2009), Ackerman & Malouf (2013), Parker & Sims (2020), and others have studied the information contained within morphological paradigms, they have not tested whether and how speakers actually use this information. Thus, Bonami & Beniamine (2016: 178) write: “It should be stressed that this paper only established that speakers are exposed to relevant information and that this information is helpful; the next step, of course, is to establish experimentally that speakers do indeed rely on [certain correlations between paradigm cells] when predicting the form of unknown words.” This paper shows that Hungarian speakers do, in fact, learn a correlation between paradigm cells (the plural and possessive) from their lexicon.

As I show in Section 6, the sublexicon model’s cooccurrence relations between features can also account for morphological dependencies. For example, if nouns like [ɟaːr] with plural -ɒk are marked with the diacritic feature [+lower], then the dependency between plural and possessive can be expressed as follows (Halle & Marantz (2008) make a similar proposal for Polish):

- (3)

- A morphological generalization over the distribution of Hungarian possessives

- Nouns ending with [+lower] (that have plural -ɒk) tend to have [–j] (e.g. [ɟaːr-ɒk] ‘factories’, [ɟaːr-ɒ] ‘her factory’)

Thus, I propose that speakers learn phonological and morphological dependencies in the same way and apply them together when productively generating unknown forms of new words. This approach captures morphological dependencies with independently proposed theoretical mechanisms and correctly accounts for the fact that speakers weigh generalizations of different kinds against one another when choosing novel forms of words.

1.2 Road map

In Section 2, I provide a detailed background on the Hungarian plural and possessive, and Section 3 contains a formal analysis. In Section 4, I present a corpus data showing the phonological and morphological factors predicting the distribution of possessive -V and -jV in the Hungarian lexicon. In Section 5, I present a nonce word study showing that Hungarian speakers productively extend these phonological and morphological generalizations to novel forms. Section 6 describes a theory of phonological and morphological generalization based on Gouskova et al. (2015). Section 7 concludes with considerations of the theoretical import of my proposal.

2 Background

In the introduction, I described lexical variation in two Hungarian suffixes: the possessive and the plural. In this section, I provide more background about this variation and about a related source of suffix alternations, vowel harmony.

As mentioned above, the possessive has two basic allomorphs, -V and -jV, both of which are very frequent. In the plural, most nouns have -ok, while a small class called “lowering stems” instead takes -ɒk. Table 1 shows that all four combinations of plural and possessive are possible. Nouns are not evenly distributed between the four options, as described in (3): most lowering stems, like [vaːlː] ‘shoulder’, take -V, and only a few, like [hold] ‘moon’, take -jV.

Table 1: Possible combinations of Hungarian plural and possessive suffixes.

| dɒl ‘song’ | tʃont ‘bone’ | vaːlː ‘shoulder’ | hold ‘moon’ | |

| plural | dɒl-ok | tʃont-ok | vaːlː-ɒk | hold-ɒk |

| possessive | dɒl-ɒ | tʃont-jɒ | vaːlː-ɒ | hold-jɒ |

2.1 Vowel harmony

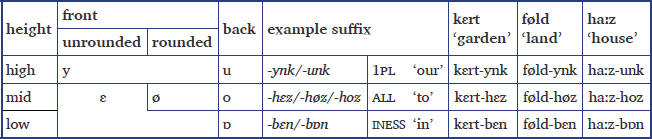

As mentioned briefly in the introduction, Hungarian words have either back or front harmony, and suffix vowels alternate accordingly. The mid suffixes also show rounding harmony: front-harmonizing suffixes with mid vowels have rounded and unrounded variants to match the last vowel of the stem. These alternations, for short vowels, are shown in Table 2; see Siptár & Törkenczy (2000: 63–73) for more details. One striking asymmetry is that low and mid vowels are not differentiated for all harmony classes: the same vowel, [ɛ], is the low counterpart of mid [ø] for words with front rounded harmony, and both the low and mid vowel for words with front unrounded harmony. There is no distinct front low vowel like [æ].1 Examples in this paper have back harmony. Table 2 can be used to find the front-harmonizing version of each suffix. Thus, the front-harmonizing equivalents of possessive -ɒ and -jɒ are -ɛ and -jɛ. Regular-stem plural -ok (from the mid vowel set) has two front-harmonizing variants, depending on rounding, -øk (e.g. [ʃyl-øk] ‘porcupines’) and -ɛk, while the lowering stem plural -ɒk (from the low vowel set) only has one front-harmonizing variant, -ɛk (e.g. [fyl-ɛk] ‘ears’). Words with front unrounded harmony can only have plural -ɛk and thus cannot be distinguished on the surface as lowering stems. Siptár & Törkenczy (2000: 225) mark some nouns with front unrounded harmony as lowering stems on the basis of other properties that correlate (more or less reliably) with lowering stem status. Since this difference is not marked in Papp (1969), which I use as a corpus (see Section 4.1) and cannot be reliably inferred, I assume that all words with front unrounded harmony are undetermined for stem class. In the nonce word experiment (Section 5), I treat stimuli with front unrounded harmony as fillers.

Hungarian suffix vowel harmony alternations (from Siptár & Törkenczy 2000: 65).

A stem’s harmony class is usually but not always predictable from its vowels (Siptár & Törkenczy 2000; Hayes & Londe 2006; Hayes et al. 2009; Rebrus et al. 2012; 2020)—thus, at least some nouns must be explicitly marked for harmony class. However, I assume that vowel harmony is handled in the phonology proper, unlike the distinction between possessive -V and -jV: -ɒ and -ɛ are surface variants of one underlying form, while -jɒ and -jɛ are surface variants of a different underlying form.

2.2 The possessive

Table 3 shows the full paradigm of possessives for the four words in Table 1 (see Rounds 2008: 135–137). Hungarian distinguishes between the person and number of possessors, as well as the number of the possessed noun, so [dɒl-om] means ‘my song’, while [dɒl-ɒ-i-m] means ‘my songs’, and so on. There are two main points of variation among these paradigms, which I discuss in this and the next section. The first is the variable presence of [j] (bolded in Table 3) in singular nouns with 3sg and 3pl possessors and plural nouns with all possessors.2 This is the possessive morpheme, with allomorphs -V and -jV. Its vowel deletes before 3pl -uk.

Table 3: Hungarian possessive paradigms for some back-harmonizing words.

| dɒl ‘song’ | tʃont ‘bone’ | vaːlː ‘shoulder’ | hold ‘moon’ | |

| possessor | singular noun | |||

| 1sg | dɒlom | tʃontom | vaːlːɒm | holdɒm |

| 2sg | dɒlod | tʃontod | vaːlːɒd | holdɒd |

| 3sg | dɒlɒ | tʃontjɒ | vaːlːɒ | holdjɒ |

| 1pl | dɒlunk | tʃontunk | vaːlːunk | holdunk |

| 2pl | dɒlotok | tʃontotok | vaːlːɒtok | holdɒtok |

| 3pl | dɒluk | tʃontjuk | vaːlːuk | holdjuk |

| possessor | plural noun | |||

| 1sg | dɒlɒim | tʃontjɒim | vaːlːɒim | holdjɒim |

| 2sg | dɒlɒid | tʃontjɒid | vaːlːɒid | holdjɒid |

| 3sg | dɒlɒi | tʃontjɒi | vaːlːɒi | holdjɒi |

| 1pl | dɒlɒink | tʃontjɒink | vaːlːɒink | holdjɒink |

| 2pl | dɒlɒitok | tʃontjɒitok | vaːlːɒitok | holdjɒitok |

| 3pl | dɒlɒik | tʃontjɒik | vaːlːɒik | holdjɒik |

Under the standard syntactic analysis (cf. Bartos 1999; É. Kiss 2002; Dékány 2018), -V and -jV are realizations of a Poss head, which has a zero allomorph when adjacent to a first- or second-person possessor marker. (The marker for third singular possessors is null.) Thus, I gloss -V and -jV as poss (not 3sg), while -(V)m, -(V)d, etc. mark 1sg, 2sg, and so on.

2.3 Linking vowels

The second point of variation in the possessive paradigms in Table 3 is the alternation between [o] and [ɒ] (underlined in Table 3) in the 1sg, 2sg, and 2pl singular. This is the same lowering stem alternation described for the plural in Section 1.1. Table 4 shows these forms again for the regular stem [dɒl] ‘song’ and the lowering stem [vaːlː] ‘shoulder’, as well as forms of [kɒpu] ‘gate’. The non-possessed plural and the three possessive markers show the same pattern: the suffix is a bare consonant (or -tok) after a vowel, as in [kɒpu] (as well as the plural -i that marks the plural of possessed nouns, as shown in Table 3). Otherwise, this consonant is preceded by a “linking vowel” (cf. Siptár & Törkenczy 2000: 219), which is mid after [dɒl] and low after [vaːlː]. This suggests an analysis of lowering stems in which the different linking vowel suffixes are handled in a unified way. I present this analysis, alongside an account of possessive allomorphy, in Section 3.

Table 4: Lowering stem alternations in plural and possessive markers.

| possessor | number | dɒl ‘song’ | vaːlː ‘shoulder’ | kɒpu ‘gate’ |

| none | plural | dɒlok | vaːlːɒk | kɒpuk |

| 1sg | singular | dɒlom | vaːlːɒm | kɒpum |

| 2sg | singular | dɒlod | vaːlːɒd | kɒpud |

| 2pl | singular | dɒlotok | vaːlːɒtok | kɒputok |

3 Formal analysis

In this section, I present a formal analysis of Hungarian possessive allomorphy and lowering stems. This analysis is somewhat simplified, but is sufficient for the account of phonological and morphological dependencies in Section 6.

3.1 Possessive allomorphy

In the introduction, I suggested that possessive allomorphy is indexed by a diacritic feature: nouns that take -jV are marked with [+j] and, analogously, nouns that take -V with [–j]. In (4), we see how these features govern allomorphy selection: by appearing in rules of realization that spell out the possessive (ignoring vowel harmony).

- (4)

- Rules of realization for the Hungarian possessive

- a.

- poss ⟷ jɒ / [+j] ___

- b.

- poss ⟷ ɒ / [–j] ___

In the assumptions of Distributed Morphology (Halle & Marantz 1993; Embick & Marantz 2008) with root-outward vocabulary insertion (Bobaljik 2000), the noun root is inserted first. Its underlying form bears a lexical feature indexing its possessive: for example, the underlying form of [dɒl] ‘song’ is /dɒl[–j]/.3 This [–j] feature is then visible during spellout of the possessive, matching the context in (4b). Thus, the possessive is spelled out as -ɒ, yielding [dɒl-ɒ].

Following Gouskova et al. (2015), whose framework I adopt in Section 6, I assume that both of the rules in (4) are marked with a diacritic feature; that is, there is no default rule, and every noun root must have a [±j] feature in order to get a possessive form. Rácz & Rebrus (2012), in contrast, argue that -jV is a productive default for most words (meaning that (4a) should be unmarked). Although I adopt the symmetrical, default-free analysis for theoretical reasons, this decision is borne out empirically by my experimental results, in which participants do not show evidence of default behavior, but rather use -V and -jV for the same nonce words.

3.2 Lowering stems and the plural

In Section 2.3, I showed that the plural and some possessive suffixes show a three-way distinction between -C (after vowel-final stems), -oC (after regular stems), and -ɒC (after lowering stems). In (5), I assume that these suffixes lack the linking vowel underlyingly, but are marked with a feature, [LV],4 indicating that they undergo linking vowel alternations:

- (5)

- Rules of realization for linking vowel suffixes

- a.

- pl ⟷ k[LV]

- b.

- 1sg ⟷ m[LV]

- …

Readjustment rules can then insert the appropriate linking vowel after consonants. In particular, lowering stems like [vaːlː] ‘shoulder’, marked with [+lower] in addition to their possessive feature (/vaːlː[+lower,-j]/), trigger insertion of the low linking vowel [ɒ], while most consonant-final nouns—marked with a complementary [–lower] feature, as in /dal[–lower,–j]/ ‘song’—trigger insertion of the linking vowel [o].

- (6)

- Readjustment rules for linking vowels

- a.

- Ø → ɒ / [+lower] ___ [LV]

- b.

- Ø → o / [–lower] ___ [LV]

This analysis correctly predicts that a noun that has a low linking vowel in one suffix (e.g. the plural) will have a low linking vowel in all suffixes. It also enables speakers to learn the morphological dependency between lowering stems and the possessive as a correlation between features [+lower] and [–j], as shown in (3) above (see Section 6.3).

3.3 The representation of lowering stems

The analysis in the previous section assumes that the lowering alternation is encoded morphologically: lowering stems are marked with [+lower], and suffixes with linking vowels have an [LV] feature. Siptár & Törkenczy (2000: 231–244) instead propose an abstract phonological analysis: lowering stems have a floating low feature [+open1] and linking vowel suffixes have an underlying vowel unspecified for height. This vowel surfaces as low in the presence of [+open1], otherwise it surfaces as mid after consonants and deletes after vowels.

These analyses represent two approaches to morphophonologically exceptional morphemes. In my analysis in (4), (5), and (6), exceptional lexical items are marked with a diacritic indexing a morpheme-specific rule or constraint (e.g. Pater 2010; Gouskova 2012; Rysling 2016). Siptár & Törkenczy (2000) instead use defective segments and subsegmental units that cannot surface faithfully and behave differently from full segments (e.g. Lightner 1965; Rubach 2013; Trommer 2021).

The two approaches are not mutually exclusive—for example, Chomsky & Halle (1968) use both—and the choice between them is often one of elegance and coverage. Moreover, both have been criticized on similar grounds: Pater (2006) and Gouskova (2012) argue that accounts with abstract underlying forms can overgenerate and be hard to learn, while Bermúdez-Otero (2012; 2013), Haugen (2016), and Caha (2021) argue that arbitrary lexical marking and readjustment rules are unrestrained and weaken our theory of grammar. In this case, the two analyses are largely equivalent: for Siptár & Törkenczy (2000), the floating feature has no phonological effect beyond producing a low linking vowel, making it akin to what Kiparsky (1982) calls “purely diacritic use of phonological features”.5

This paper argues that Hungarian speakers learn generalizations over [+lower], a feature marking lowering stems. For Siptár & Törkenczy (2000), the floating [+open1] feature is similarly unique to lowering stems. Both analyses are thus compatible with my main hypothesis that speakers learn generalizations over features that index unpredictable morphophonological behavior.

With this empirical and formal background, we can turn to quantitative study of the distribution of possessive allomorphs and lowering stems and begin to test the correlation between the two.

4 Possessive allomorphy in the Hungarian lexicon

The primary goal of this paper is to show that Hungarian speakers extend gradient patterns in their lexicon to nonce words, in particular the morphological generalization that lowering stems prefer possessive -V. In order to show this, I must first determine what the lexical patterns are. This section presents a corpus study of the Hungarian lexicon that serves as the foundation for the nonce word study in Section 5.

4.1 Representing the Hungarian lexicon

In this section I discuss my representation of the Hungarian lexicon.6 My source of data is Papp (1969), a printed morphological dictionary of Hungarian which I digitized manually. I use Papp (1969) for its comprehensive tagging of derivational morphology, but it has potential disadvantages: it is over 50 years old and reflects lexicographic work rather than pure corpus data. In Section 4.2.3, I compare my corpus with that of Rácz & Rebrus (2012), who use a web corpus. The distribution of possessives in the two sources is quite similar, so I conclude that Papp (1969) is a relatively accurate representation of contemporary Hungarian.

Under standard assumptions in Distributed Morphology, lexical information like allomorph selection is stored for roots and affixes, not complex stems (Embick & Marantz 2008). Thus, if speakers are generalizing over the frequency of types in the lexicon (cf. Bybee 1995; 2001; Pierrehumbert 2001; Albright & Hayes 2003; Hayes & Wilson 2008; Hayes et al. 2009), derived words and compounds with the same head (rightmost affix or root) should not count as separate types. Root-based storage predicts that words ending in the same suffix should take the same possessive, which is largely true in Hungarian (Rácz & Rebrus 2012). I adopt the assumption of root-based storage by limiting my corpus to monomorphemic nouns. In Section 5.7.3, I suggest that this corpus more accurately reflects the behavior of participants in the nonce word study than a corpus including complex nouns.

Although adjectives can also take possessive suffixes, I limit my corpus to nouns. Unlike nouns, most adjectives are lowering stems (Siptár & Törkenczy 2000: 229–230), so including adjectives would complicate the relationship between lowering stems and possessive allomorphy (see also Rebrus & Szigetvári 2018). Of 35,130 nominals in my corpus, 5,055 are monomorphemic, and 4,443 of those are listed exclusively as nouns. I excluded 1,768 vowel-final words, since these categorically take -jV and would be undefined for many of the factors in my regression. I also removed the 27 words ending in orthographic h, which is phonologically complicated (Siptár & Törkenczy 2000: 274–276). Finally, I excluded 216 nouns listed in Papp (1969) with variable or unknown possessive to allow for binary coding of the possessive variable (-V vs. -jV). This leaves 2,432 noun types.

4.2 Corpus study: the distribution of -V and -jV in the lexicon

In this section, I present the results of a corpus study showing phonological and morphological factors predicting a noun’s possessive allomorph in the Hungarian lexicon. Like other cases of lexically specific variation (e.g. Hayes et al. 2009; Becker et al. 2011; Gouskova et al. 2015), the distribution of -V and -jV shows gradient tendencies; these are conditioned upon both phonological properties and the morphological dependency of stem class. In Section 5, I test whether speakers productively apply this morphological effect to new forms.

4.2.1 Analysis

In this study, I look at various phonological properties of the stem and one morphological property, stem class. The full model of the lexicon is shown in Section 4.2.2. This is a logistic regression with possessive suffix as the dependent variable (-V is represented as 0, -jV as 1; higher coefficients represent a higher likelihood of -jV). The goal of this model is to present an accurate representation of the lexicon, so I include a large number of phonological properties representing the right edge of a stem (local to the suffix): the place (alveolar, labial, palatal, velar) and manner (plosive, non-sibilant fricative, sibilant fricative/affricate, approximant) of its final consonant; the height (mid, high, low) and roundedness (unrounded, rounded) of its final syllable’s vowel; its vowel harmony class (back, front, variable); the complexity of its final coda (singleton, geminate, cluster); and its length (polysyllabic, monosyllabic).7 All variables were dummy coded with the first listed level (the most frequent) as the reference level. Alternating stems were considered in their form when suffixed: for example, [mɒjom] ‘monkey’, which displays a vowel–zero alternation (e.g. [mɒjm-ɒ] ‘her monkey’), is coded as ending in a cluster with [ɒ] as its last vowel. For stem class, nouns were classified as lowering, non-lowering, variable, or indeterminate (nouns with front unrounding harmony, see Section 2.1). This factor was dummy coded with non-lowering as the reference level.

The model was assembled by forward stepwise comparison using the buildmer function in R from the package of the same name (R Core Team 2022; Voeten 2022).8 This function adds factors one at a time such that each additional factor improves (lowers) the model’s Akaike Information Criterion (AIC), which measures how well the model fits the data while penalizing model complexity (number of factors). All included factors lowered the AIC by at least 16.5 and significantly improved the model fit according to a chi-squared test (conducted using the drop1 function in R’s basic stats package). Two additional phonological factors, the length and backness of the final vowel, were considered but did not improve the model. The model equation is: possessive ∼ C manner + C place + stem class + V height + harmony + coda + V length + syllables.

I also fitted two intermediate regressions: one with only phonological factors, and the other with only the morphological factor of stem class. I present the phonological model in Section 4.2.2 alongside the full model. I include phonology and stem class as separate factors in my model of the nonce word experiment in Section 5.6 (see Section 5.7.1 for discussion), and the phonological model of the lexicon represents speakers’ knowledge of phonological generalizations over possessive allomorphy. The phonological factors are the same in the two models, though added in a slightly different order. The final equation of this model is: possessive ∼ C manner + C place + harmony + V height + V length + coda + syllables.

I do not present the model predicting possessive from the morphological factor of stem class alone (possessive ∼ stem class) because I do not use it in the nonce word study: as I discuss in Section 5.5, for my analysis of the experimental results I encode stem class categorically (lowering vs. not).

4.2.2 Results

Table 5 contains the full model with factors listed in the order in which they were added to the model (roughly corresponding to importance). Most of the factors are significant; the most influential are the place and manner of the final consonant, followed by stem class. The two phonological effects are likely driven by the near-categorical use of -V among sibilants and palatals. Other phonological factors have significant effects as well: for example, front-harmonizing words take -jV less than back-harmonizing words, and nouns ending in geminates prefer -jV relative to nouns ending in singleton consonants. The effect of lowering stems is strongly negative: independent of their phonology, lowering stems are more likely to take -V than non-lowering stems. The difference between non-lowering stems and nouns with undetermined stem class is smaller and not significant. As discussed in Section 2.1, this class comprises nouns with front unrounded harmony, so its effect should be masked by the factor of harmony. I confirmed the independence of stem class by testing its variance inflation factor (VIF) using the check_collinearity function from version 0.10.8 of R’s performance package (Lüdecke et al. 2021). This measures whether different factors are describing the same effect. Stem class had a low correlation (a VIF of 2.96) with the other factors (see James et al. 2013), meaning that its effect cannot be reduced to some combination of phonological factors.

Table 5: Regression model with phonological predictors of possessive -jV and stem class, with significant effects italicized.

| ΔAIC | β coef | SE | Wald z | p | |

| Intercept | 4.32 | .31 | 13.99 | <.0001 | |

| C Manner (default: plosive) | –951.1 | ||||

| fricative | –1.03 | .44 | –2.37 | .0179 | |

| sibilant | –11.07 | .80 | –13.87 | <.0001 | |

| nasal | –2.07 | .28 | –7.39 | <.0001 | |

| approximant | –4.06 | .31 | –13.10 | <.0001 | |

| C Place (default: alveolar) | –719.8 | ||||

| labial | –2.22 | .27 | –8.35 | <.0001 | |

| palatal | –9.25 | 1.13 | –8.22 | <.0001 | |

| velar | –3.54 | .31 | –11.55 | <.0001 | |

| Stem class (default: non-lowering) | –241.3 | ||||

| lowering | –3.71 | .44 | –8.44 | <.0001 | |

| undetermined | –0.25 | 0.25 | –0.98 | .3278 | |

| variable | –2.76 | .69 | –4.00 | <.0001 | |

| V Height (default: mid) | –114.8 | ||||

| high | 1.85 | .23 | 8.09 | <.0001 | |

| low | 0.77 | .21 | 3.66 | .0003 | |

| Harmony (default: back) | –81.6 | ||||

| front | –1.98 | .27 | –7.41 | <.0001 | |

| variable | 2.25 | 1.04 | 2.17 | .0297 | |

| Coda (default: singleton) | –39.2 | ||||

| geminate | 2.43 | .41 | 5.97 | <.0001 | |

| cluster | –0.08 | .22 | –0.37 | .7147 | |

| V Length (default: short) | –38.7 | ||||

| long | 1.30 | .19 | 6.97 | <.0001 | |

| Syllables (default: polysyllabic) | –16.8 | ||||

| monosyllabic | –0.79 | .18 | –4.31 | <.0001 | |

Table 6 shows the intermediate model with phonological factors. This model is significantly worse than the full model (χ2 = 112.9, p < .001), although both are quite good fits to the data (Tjur’s R2 of .68 and .71, respectively).9 The phonological effects in the two models are very similar, further indicating that stem class has an effect independent of phonology.

Table 6: Regression model with phonological predictors of possessive -jV, with significant effects italicized.

| ΔAIC | β coef | SE | Wald z | p | |

| Intercept | 4.16 | .30 | 13.85 | <.0001 | |

| C Manner (default: plosive) | –951.1 | ||||

| fricative | –1.44 | .39 | –3.73 | .0002 | |

| sibilant | –10.69 | .80 | –13.38 | <.0001 | |

| nasal | –1.95 | .27 | –7.16 | <.0001 | |

| approximant | –4.08 | .30 | –13.47 | <.0001 | |

| C Place (default: alveolar) | –719.8 | ||||

| labial | –2.02 | .26 | –7.94 | <.0001 | |

| palatal | –8.88 | 1.10 | –8.06 | <.0001 | |

| velar | –3.26 | .29 | –11.19 | <.0001 | |

| Harmony (default: back) | –224.0 | ||||

| front | –2.03 | .18 | –10.96 | <.0001 | |

| variable | 2.27 | .97 | 2.33 | .0197 | |

| V Height (default: mid) | –68.5 | ||||

| high | 1.73 | .22 | 7.89 | <.0001 | |

| low | 0.28 | .19 | 1.50 | .1342 | |

| V Length (default: short) | –44.4 | ||||

| long | 1.40 | .17 | 7.98 | <.0001 | |

| Coda (default: singleton) | –44.2 | ||||

| geminate | 2.47 | .40 | 6.25 | <.0001 | |

| cluster | 0.04 | .21 | 0.17 | .8617 | |

| Syllables (default: polysyllabic) | –44.4 | ||||

| monosyllabic | –1.15 | .17 | –6.67 | <.0001 | |

I use the model in Table 6 to represent the phonological properties of nonce words in my analysis of the nonce word experiment in Section 5.6. For a given word (real or nonce), this regression assigns a coefficient x (which I call phon_odds) measuring the predicted probability P that that word takes -jV, P = ex/(1+ex). This coefficient is the sum of the β coefficients of the intercept and a word’s value for each factor when it differs from the default. For example, the nonce word [lufɒn] has a coefficient of βIntercept + βC place: nasal + βV height: low = 4.16 – 1.95 + 0.28 = 2.49, corresponding to a probability of e2.49/(1+e2.49) = .923 = 923%: if this were a real word, its possessive would likely be [lufɒn-jɒ]; if a nonce word, speakers should be likely to choose [lufɒn-jɒ] for its possessive as well.

4.2.3 Discussion

The purpose of this corpus study is to determine that a Hungarian noun’s possessive depends on specific phonological properties and its stem class; in Section 5, I test the hypothesis that speakers extend these same dependencies to the possessives of nonce words as well, according to the Law of Frequency Matching described in (1). In order for my analysis of the nonce word study to work as intended, my data source, Papp (1969), must be an accurate representation of the contemporary Hungarian lexicon. However, we are faced with a potentially troubling discrepancy: some of the results in Section 4.2.2 differ from those of Rácz & Rebrus (2012), who use a web corpus. In this section, I show that these differences are due to choices in creating our corpora, not properties of the data source—their corpus and Papp (1969) are largely similar, at least in the distribution of possessive allomorphs.

4.2.3.1 Corpus comparison

Rácz & Rebrus (2012) note three tendencies in Hungarian possessive distribution: stems ending in labial and alveolar plosives take -jV more than stems ending in velar plosives, stems ending in complex codas (geminates and clusters) take -jV more than stems ending in singletons, and stems with back harmony take -jV more than stems with front harmony. Section 4.2.2 shows a similar effect of harmony and coda complexity (though in my case it is limited to geminates, not clusters), but finds a different effect of place: alveolars prefer -jV relative to both labials and velars. In addition, my corpus contains much higher rates of -jV across the board than that of Rácz & Rebrus (2012).

Rácz & Rebrus (2012) use comparison of type and token counts rather than statistical modelling. Thus, their presentation of the effect of place of articulation is limited to plosives to keep sibilants from skewing the data. Their type and token counts for plosives by place are shown in Table 7.10

Table 7: Phonological distribution of possessive allomorphs from Rácz & Rebrus (2012: 57–58) for nominals ending in non-palatal plosives, by place of articulation.

| tokens (thousands) | types (thousands) | |||||

| -V | -jV | % -jV | -V | -jV | % -jV | |

| labial | 126 | 186 | 60% | 0.3 | 0.3 | 50% |

| alveolar | 1039 | 350 | 25% | 1.7 | 1.4 | 45% |

| velar | 1706 | 150 | 8% | 3.2 | 0.5 | 14% |

| total | 2871 | 686 | 19% | 5.2 | 2.2 | 30% |

The counts in Table 7 are tabulated from an unlemmatized web corpus that includes all words appearing with possessive suffixes: monomorphemic nouns; derived nouns; and occasional adjectives, numerals, and similar. In Table 8, I show the possessive distribution of plosive-final stems from Papp (1969) for my corpus of monomorphemic nouns only (see Section 4.1) and the full set of nominals whose possessives are listed, including complex nouns, adjectives, and others.

Table 8: Phonological distribution of possessive allomorphs from Papp (1969) for nominals ending in non-palatal plosives, by place of articulation.

| monomorphemic nouns | all nominal | |||||

| -V | -jV | % -jV | -V | -jV | % -jV | |

| labial | 23 | 76 | 76.8% | 291 | 406 | 58.2% |

| alveolar | 13 | 359 | 96.5% | 1633 | 1502 | 47.9% |

| velar | 126 | 224 | 64.0% | 2851 | 743 | 20.7% |

| total | 162 | 659 | 80.3% | 4775 | 2651 | 35.7% |

The distribution of -jV across plosive-final nominal stems reported by Rácz & Rebrus (2012) is very similar to (though slightly lower than) those from Papp (1969). Monomorphemic nouns have a very different distribution: -jV is much more common overall and alveolar plosives prefer -jV relative to labial plosives. Thus, the differences between their study and mine lie almost entirely in the difference between monomorphemic nouns and morphologically complex stems. A comparison of the other two effects reported by Rácz & Rebrus (2012), stem-final coda complexity and harmony class (shown in the supplemental materials), yields a similar result: the distribution of possessives in Papp (1969) is sufficiently similar to contemporary sources to be an adequate representation of the Hungarian lexicon to serve as the basis of my analysis of the nonce word study in Section 5.

The choice to study monomorphemic nouns only is theoretically driven: my account of phonological and morphological frequency matching effects is grounded in a theory of morphology that stores roots rather than stems. It is also an empirical question: which corpus more closely reflects Hungarian speakers’ behavior? In Section 5.7.3, I suggest that the monomorphemic corpus is a better match for my experimental results. However, the primary results of this paper (Hungarian speakers apply learned generalizations about the possessive according to both the phonology and the stem class of nouns) are robust no matter the corpus.

4.2.3.2 Reinterpretation of Rácz & Rebrus (2012)

I conclude this section with a brief discussion of the plosive place effect discussed above. Rácz & Rebrus (2012) attribute the relatively high rates of -jV among nouns ending in labial and alveolar stops to a nearly categorical preference for -jV of two derivational suffixes: the past participle -tː (e.g. [ɒlkɒlmɒz-otː-jɒ] ‘her employee’) and the comparative -bː.11 However, inspection of my corpus suggests that this is not a major factor: in particular, there are almost no comparatives with listed possessives in Papp (1969). Instead, the crucial fact, noted by Rácz & Rebrus (2012), is that almost all other derivational suffixes categorically take -V. This explains why the rate of -jV is so much higher among monomorphemic nouns than among all nominals.12 In addition, two very common nominalizers, -ʃaːɡ (1,348 nouns, for example [sɒbɒd-ʃaːɡ-ɒ] ‘her freedom’) and -ɒt (1,364 nouns, for example [viʒɡaːl-ɒt-ɒ] ‘her examination’), are likely responsible for the fact that stems ending in velar and alveolar plosives, respectively, show much lower rates of -jV when counting all nominals than when only counting monomorphemic nouns. By contrast, there are no derived forms in Papp (1969) ending in labial stops, so the rate of -jV among these nominal stems stays relatively high. This section thus shows one advantage of a morphologically rich corpus like Papp (1969): it facilitates a detailed view of the distribution of possessives that can be difficult to obtain from a naturalistic corpus.

5 A nonce word study of the Hungarian morphological dependency

In this section, I present a nonce word study testing whether Hungarian speakers productively apply gradient phonological and morphological effects on possessive allomorphy from the lexicon. While previous nonce word studies have focused on phonological generalizations (e.g. Hayes et al. 2009; Becker et al. 2011; Gouskova et al. 2015), I show that speakers apply a morphological dependency as well: nonce words are assigned -V more often when presented as lowering stems (with plural -ɒk). I use a novel extension of the nonce word paradigm: in most nonce word studies, speakers are presented with a single form and make inferences based on its phonological properties. In this study, I change both the base form and a second, inflected, form, which provides information to participants about the nonce word’s inflectional patterns. This novel experimental condition enables me to test the psychological reality of correlations between morphological patterns.

5.1 Predictions

I hypothesize that speakers form the possessives of novel words by matching the distribution of -V and -jV in the lexicon (described in Section 4) according to both phonological factors and stem class, following the Law of Frequency Matching of Hayes et al. (2009) (see Section 1.1). Since my primary concern is the morphological dependency, I focus on phonological frequency matching in the aggregate rather than individual phonological effects.

Rácz & Rebrus (2012) argue that -jV is the productive default for most words (see Section 2.2). If this is true, speakers should instead categorically assign -jV to most words.

5.2 Participants

Participants were recruited through Prolific (https://app.prolific.co/) and were born in Hungary and raised as monolingual Hungarian speakers. I recruited 30 participants for the stimulus norming study and 91 for the stimulus testing study. One participant was rejected for poor quality (see Section 5.4.2), and an additional 48 participants were recruited for earlier versions of the stimulus testing study; because the experimental task was substantially different (fill-in-the-blank rather than forced-choice, or missing the attention check for the plural), their data are not presented here.

5.3 Stimuli

I trained the UCLA Phonotactic Learner (Hayes & Wilson 2008) on the corpus of Hungarian nouns used in Section 4.1. The program produces a “sample salad” of 1,968 nonce words randomly generated from the probability distribution defined by the phonotactic grammar it learned. Many of the generated words included long strings of consonants like [ʒuːɡkfkb]. These ridiculous words reflect the limitations of the learned grammar: in particular, its constraints were limited to bigram sequences of segments or natural classes (that is, two segments/classes long) plus a word boundary, so the learner could not learn restrictions against long strings of consonants. The learned grammar was much less restrictive than actual Hungarian, so words like [ʒuːɡkfkb] were not used as stimuli. However, the grammar was good enough that many of its generated words looked like reasonable Hungarian words (like [tuːs]), and some were actually existing Hungarian words, like [iːɲ] ‘gum’.

I selected the 498 generated nonce words with the shape (C)VC(C) or (CV)CVC(C) and removed 162 disyllabic disharmonic words (which had one front vowel and one back vowel). Finally, I removed 19 generated stimuli that were headwords of any part of speech in Papp (1969).13 This left a final set of 317 nonce word stimuli, some of which were still, impressionistically, phonologically questionable (e.g. [ɲɒsm]). Each word was presented in the singular and plural, in the latter case with either a regular or lowering plural suffix.

5.4 Procedure

This experiment was split into two studies. First, participants rated all stimuli for plausibility as Hungarian words. The ratings obtained in this study were used to select a smaller set of stimuli for the main experiment, in which participants selected possessive forms.

5.4.1 Stimulus norming and selection

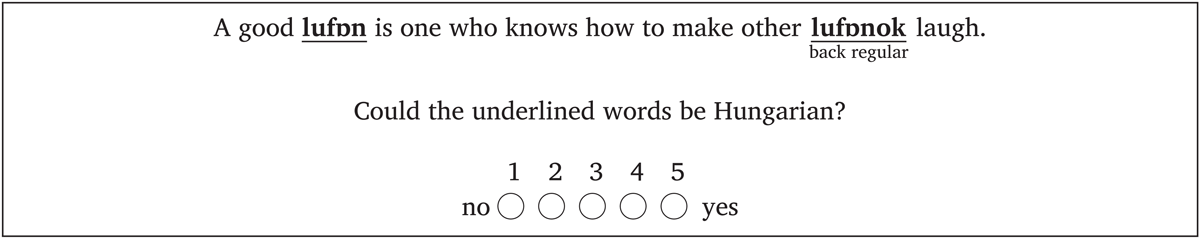

The goal of this study was to confirm the intuitions of the author (not a native Hungarian speaker) that the stimuli were broadly plausible as Hungarian words, including when presented as lowering stems. Participants completed 50 trials, each with a different stimulus. Stimuli were chosen randomly: five words never appeared at all, and the remaining 312 words were used in 1–10 trials each (mean 4.8 trials, standard deviation 2.1). An example trial is shown in Figure 1. Each trial had a frame sentence containing the target stimulus twice, presented in written form with regular Hungarian orthography (lufan, lufanok). In its first occurrence, the stimulus appeared in bare nominative form; the second time, the stimulus had a plural suffix (and sometimes subsequent suffixes). Most stimuli were shown with regular plurals (e.g. -ok), but 8 randomly chosen trials instead showed stimuli as lowering stems (e.g. with plural -ɒk).14 Participants rated each stimulus on a five-point scale according to the question Could the underlined words be Hungarian?, with the ends of the scale labelled no (1) and yes (5). Frame sentences, stimuli, and the sample trial in the original Hungarian are included in the supplemental materials.

Three participants were discarded because their ratings disagreed substantially from the average ratings for a given word (an average deviation of 1.5 from the mean for stimuli that were rated by at least 3 participants). The ratings of the remaining 27 participants were used as inputs to a Python script (available in the supplemental materials) that generated potential subsets of stimuli to be used in the stimulus testing study. These sets were evaluated on three criteria: distance from a desired phonological distribution similar to that of the base corpus, a high average overall rating, and a consistent average rating for stimuli falling into each phonological category. The distribution was chosen to allow for a sizable number of trials for stimuli with each phonological property while still ensuring that the aggregate distribution of all trials can be compared to the aggregate lexicon. The goal of maximizing the average rating was to make the experiment as naturalistic as possible: while I would not expect any individual low-rated stimuli to be treated differently from high-rated stimuli, participants are supposed to pretend that the stimuli are real words of Hungarian, and too great a presence of phonotactically deviant words might make the experiment more obviously artificial. I examined high-ranked sets manually and selected a set with 81 stimuli to use for the main testing phase.

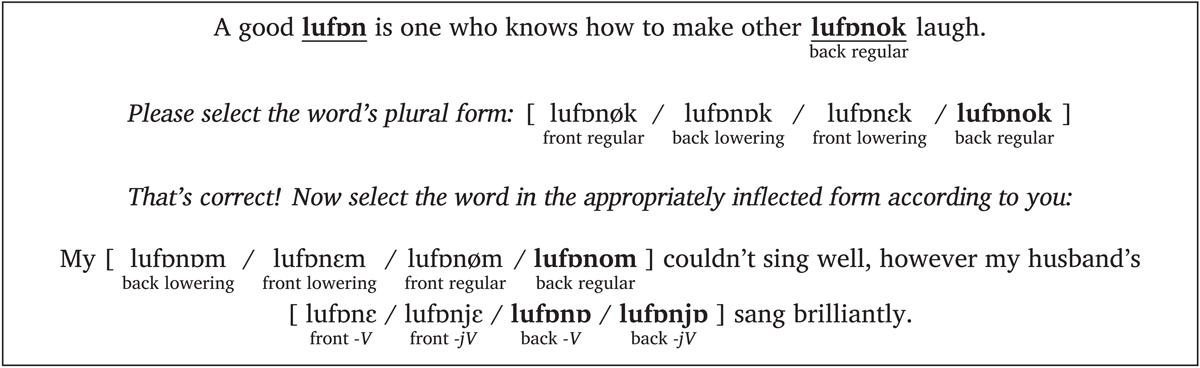

5.4.2 Morphological dependency testing

Participants each completed 35–50 trials,15 which had the format shown in Figure 2. First, the stimuli were presented in written form (lufan, lufanok, etc.) in the same frame sentences as in the stimulus norming experiment. As before, stimuli were chosen randomly: each stimulus appeared in 44–62 trials (mean 53.1, standard deviation 4.2). In Figure 2, the nonce word [lufɒn] has a regular plural -ok, but in 8–12 trials, the stimuli were presented as lowering stems, e.g. plural [lufɒn-ɒk]. As an attention check, participants had to correctly select the plural form appearing in the first sentence. Next, a second frame sentence appeared, in which participants had to select 1sg (or, for two sentences, 2sg) and possessive forms. The 1sg and 2sg suffixes have the same regular and lowering stem variants as the plural (see Section 2.3), so the linking vowel should match that of the plural: in this case, [lufɒn-om].16 The choices included both back and front variants; the possessive should have the same vowel harmony as the plural (in this case, [lufɒn-ɒ] or [lufɒn-jɒ]).

Trials in which participants chose a discordant 1sg or antiharmonic possessive were discarded. Of 4,305 total trials, 141 had a harmony mismatch in one or both selected forms and another 565 had a stem class mismatch. Setting aside the trials with a harmony mismatch, most stem class mismatches came in lowering stem trials: in 1,003 trials in which the stimulus was presented as a lowering stem, participants only selected a lowering stem suffix for the 1sg form in 525 (52.3%). By contrast, participants matched non-lowering stems 96.2% of the time (1,890 of 1,964 trials). These results indicate that participants often resisted treating nonce words as lowering stems. Individuals varied in their matching of stem class: 10 successfully matched all lowering stem trials, while two matched none and another 11 matched 20% or fewer (the standard deviation for individual match rate was 28.7%; I compare rate rather than number of matched trials because participants saw different numbers of trials). The results presented here exclude one participant who was particularly bad (matching 44% of trials, and only matching the harmony in 62% of trials, suggesting overall lack of attention); an analysis excluding more poor performers (14 additional participants who matched in 60% or less of all trials or 20% or less of lowering stem trials) yields similar results.

5.5 Analysis

After removing discordant trials and trials of the one excluded speaker, 3,577 trials remained. Of these, 1,179 had filler stimuli with front unrounded harmony, which do not exhibit lowering stem alternations (see Section 2.3); removing these leaves 2,398 trials with 57 stimuli.

This experiment tests the hypothesis that speakers apply patterns from their lexicon in producing novel possessive forms—in particular, the morphological correlation between stem class and possessive. The clearest confirmation of the effect of stem class would be to show that the odds assigned to nonce words by the model of the lexicon in Table 5, which includes phonological factors as well as stem class (which I call phon_morph_odds), predicts the experimental results better than the odds assigned to nonce words by the lexicon model in Table 6, which includes phonological factors but not stem class (phon_odds). However, the model with phon_morph_odds performs worse than a model with phon_odds alone (χ2 = 18.77). In Section 5.7.1, I show that this mismatch is, in fact, expected and that it is methodologically justified to separate stem class out from the phonological factors, as done in Section 5.6. The model presented below shows that taking the morphological factor of stem class into account leads to a better fit: participants’ choice of possessive for nonce words is influenced by whether it is presented as a lowering stem. I still represent the effect of a word’s phonology as a single aggregate factor (phon_odds), reflecting the assumption that speakers apply all of the phonological effects from the lexicon together. While this may paper over some differences between the effects of individual phonological factors, it is sufficient for this study, whose main purpose is to test the effect of stem class. (A model using individual phonological factors yields the same effect size for stem class.)

I present a mixed logistic regression model of the experimental results whose dependent variable is the possessive suffix selected in a trial (-V, coded as 0, vs. -jV, coded as 1) with random intercepts for participant and item. The model includes a fixed factor of phon_odds (the log odds that a nonce word takes -jV according to the phonological model of the lexicon in Table 6; see Section 4.2.2) and a by-participant random slope for phon_odds. It also includes a factor of stem class. I represent this as a dummy-coded categorical variable, rather than as a morph_odds factor derived from a lexicon model trained on stem class alone, for ease of presentation: given that this is a factor with only two levels (regular and lowering), all observations would have one of two values for morph_odds anyway, meaning that the two representations behave similarly. (The equivalent model using morph_odds yields identical results.) The model does not include a by-participant random intercept for stem class, as adding this factor does not significantly improve the model (χ2 = 3.05, p = .383) and makes the model worse according to the Akaike Information Criterion (AIC), which penalizes model complexity. The final model is possessive ∼ phon_odds + stem class + (phon_odds | participant) + (1 | item). The model was fitted with the glmer function in version 1.1-34 of R’s lme4 package (Bates et al. 2015) using the bobyqa optimizer, which was better at finding converging models than the default Nelder_Mead optimizer.

5.6 Results

In Table 9, we see the effects of the mixed logistic regression predicting participant responses by stem class and phonology, using the phon_odds calculated from the phonological model of the lexicon in Table 6 as described in Section 4.2.2.

Table 9: Effects of mixed logistic model with predictions of the phonological model of the lexicon (Table 6) and stem class for experimental use of possessive -jV, with significant effects italicized.

| Random effec | variance | SD | ||

| Participant | ||||

| Intercept | 0.61 | .78 | ||

| Phon_odds | 0.01 | .12 | ||

| Item | 0.51 | .72 | ||

| Fixed effects | β coef | SE | Wald z | p |

| Intercept | 0.77 | .15 | 5.27 | <.0001 |

| Phon_odds | 0.38 | .03 | 12.43 | <.0001 |

| Stem class (default: non-lowering) | ||||

| lowering | –0.43 | .13 | –3.21 | .0013 |

This model shows an overall bias towards -jV (since the intercept is positive): there were 1,031 responses of -V and 1,367 of -jV. The results also show evidence of frequency matching—that is, a correspondence between actual rates and rates predicted from a word’s phonology: the β coefficient for phon_odds is positive. However, this coefficient is smaller than 1. This means that the overall range of likelihood predicted by the experimental model is narrower than that predicted by the model of the lexicon: a change of 1 in a word’s predicted log odds of taking -jV according to its phonology corresponds to a change of only .38 in its predicted log odds in the experiment.

Even with this narrowed range, phonology (together with stem class) does not fully predict the experimental results—the marginal R2 of the model Table 9, which is the proportion of the variance described by the fixed effects, is .367. The random intercept for item likewise shows that different words were given fairly divergent rates of -jV even accounting for the fixed effects—and, indeed, the conditional R2, which is the proportion of the variance described by both fixed and random effects, is much higher, .556. One example of the imprecision in the model’s fit is [jøs], which has the lowest phon_odds of all the stimuli (–9.70) and is thus predicted by the phonological model of the lexicon in Table 6 to be least likely to take -jV (0.0006%). By a similar process to that described in Section 4.2.2, we can use the phon_odds to calculate the experimental likelihood of -jV predicted by the model in Table 9: 5.2% for non-lowering stem trials. In fact, participants assigned -jV to this word in 6 out of 36 non-lowering stem trials, or 16.7%, a much higher rate. The random intercept for [jøs] makes up some of this difference, adjusting the predicted likelihood of -jV in non-lowering stem trials to 10.1%.

The random intercept for participant similarly shows that different participants had different baseline rates of -jV, but the by-participant random slope for phon_odds has a low variance, suggesting that participants treated a nonce word’s phonology in similar ways. Including this random slope significantly improves the model (χ2 = 15.99, p < .001).

Stimuli (e.g. [huːʃɒkː]) presented as regular stems (with plural [huːʃɒkː-ok]) were assigned -jV ([huːʃɒkː-jɒ]) 58.1% of the time (1,090 out of 1,876 trials), while participants assigned -jV to stimuli slightly less often when they were presented as lowering stems (with plural [huːʃɒkː-ɒk]), 53.1% of the time (277 of 522 trials). This difference may seem small, but it is significant in the model in Table 9. An equivalent model without stem class performs significantly worse (χ2 = 9.96, p = .002). The effect sizes of the two models are otherwise very similar, suggesting that the effect of stem class is orthogonal to that of phonology—that is, that speakers are taking both phonological properties and stem class into consideration separately when providing the possessive of a nonce word, as predicted.

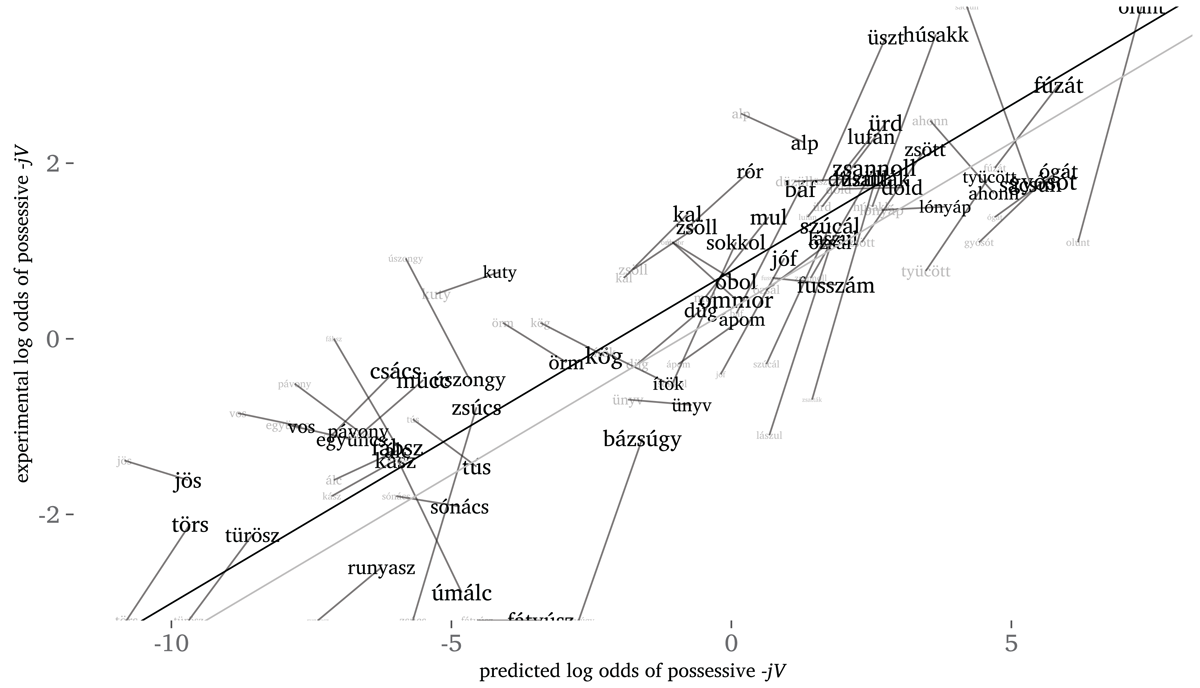

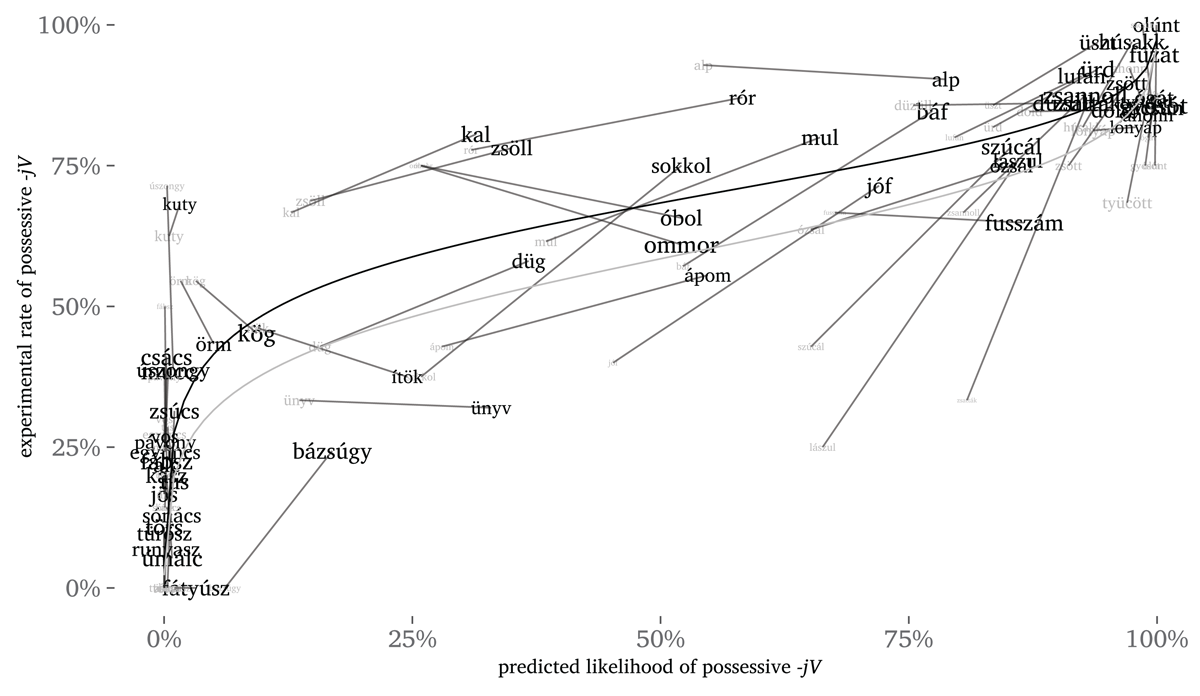

Figure 3 shows the relationship between the predicted likelihood (according to the phon_odds and stem class) of each nonce word taking -jV and its experimental rate of -jV. Both axes are shown in terms of log odds (that is, coefficients), making the relationship linear. Rates of 0 and 1 correspond to log odds of negative infinity and infinity, respectively—so, for example, the nonce word fátyúsz [faːcuːs], which speakers assigned -V in every trial, should be at negative infinity. It is included at the bottom edge of the graph in Figure 3. Likewise, words always assigned -jV in one condition are at the top edge of this graph. The graph shows each nonce word twice, representing trials when it was presented as a regular stem (in black), and a lowering stem (in gray). The size of each word represents the number of trials in which it appears. The lowering stem words are always smaller than the regular words because each word appeared in fewer lowering stem trials than regular stem trials. A line connects the two conditions for each word, going leftward from the regular word to the lowering stem word because the model predicts a lower likelihood of -jV for lowering stems. Figure 4 shows the same data plotted on scales of raw probability. The graphs show lines corresponding to the fit of the model in Table 9 for regular (black) and lowering stem (gray) conditions.

Figure 3: The relationship between predicted and experimental log odds of possessive -jV for individual nonce words presented with regular (black) and lowering (gray) plurals, sized according to number of trials, with a line showing the fits of the experimental model by stem class in Table 9.

Figure 4: The relationship between predicted likelihood and experimental rate of possessive -jV for individual nonce words presented with regular (black) and lowering (gray) plurals, sized according to number of trials, with a line showing the fits of the experimental model by stem class in Table 9.

The main findings of Table 9 can be seen in Figure 3. First, the log odds of -jV predicted from the lexicon map well onto the experimental log odds: the relationship is a tight linear fit. Second, the graph shows the effect of stem class: most of the lines in these figures slope downward and to the left, indicating that most nonce words had a lower experimental rate of -jV when presented as a lowering stem. Figure 4 shows that the experimental results are less extreme than the predicted likelihood, especially on the low end: nouns ending in palatals and sibilants, which nearly categorically take -V in the lexicon and thus had a near-zero predicted likelihood of -jV, were assigned -jV in the experiment up to nearly 50% of the time, or even higher in the case of kuty [kuc].17 These stimuli also show a less reliable effect of stem class than those with higher predicted rates of -jV.

5.7 Discussion

I interpret the results presented in Section 5.6 as supporting the main hypothesis of this study: participants show frequency matching effects both for phonological properties of the stimuli—as in previous nonce word studies—and for stem class. In this section, I discuss some of my analytical choices and other outstanding issues, and argue that this interpretation is empirically and methodologically justified and robust across different representations of the Hungarian lexicon.

5.7.1 Model construction

As described in Section 5.5, if speakers are applying the distribution of the lexicon to nonce words as I hypothesize, then the complex model of the lexicon (with both phonological factors and stem class as predictors of whether a noun takes -jV) should be the best predictor of the experimental results (whether participants assign -jV to nonce words). However, the full lexicon model in Table 5 (assigning phon_morph_odds to nonce words) does worse at predicting experimental results than the lexicon model in Table 6, which only includes phonological factors and not stem class (assigning phon_odds).

Table 9 shows that stem class does have an effect. The model with phon_morph_odds fails, however, because the experimental effect of stem class is weaker than the phonological effects relative to the lexicon. We can see this from the fixed effects in Table 9. The effect size of phon_odds in this model is .38, meaning that the effect of the phonological factors was 38% as strong experimentally as in the lexicon (see Section 5.6). The effect size of stem class in the model of the experimental results is –.43, while in the model of the lexicon (Table 5), it is –3.71. Thus, the effect of stem class is –.43/–3.71 =.116 = 11.6% as strong experimentally as in the lexicon. Accordingly, a model with phon_morph_odds predicting experimental results must split the difference between accurately capturing the relative strength of the phonological effects (thus overweighting stem class) and accurately capturing the relative strength of stem class (thus underweighting the phonological factors). Including phon_odds and stem class as separate factors gives the model a degree of freedom enabling it to capture the difference in strength between the two types of factors. In the next section, I propose interpretations for these strength differences that methodologically justify the degree of freedom.

5.7.2 How did speakers match the frequency of the lexicon?

In both phonology and stem class, participants applied patterns from the lexicon to the possessive of nonce words, but in less extreme form. The difference is particularly stark for nouns ending in sibilants and palatals, which nearly categorically take -V in the lexicon but were assigned -jV in the experiment in a substantial minority of trials. One likely source of this discrepancy is a task effect: the forced choice task makes alternatives more salient, leading participants to select “unlikely” possessives more often than they would in normal production.18 There is also likely some noise. The rate of discarded trials where participants chose suffixes with the wrong vowel harmony for the stem (141 out of 4,305; see Section 5.4.2), while low, suggests occasional inattention or random guessing, and a similar number of trials presumably include a randomly chosen answer that was not filtered out.

One relatively minor factor is that of individual participant differences. Although participants differed in their propensity to assign -jV (the random intercept for participant has substantial variance), this difference was only weakly mediated by phonology (the by-participant random slope for phon_odds has a low variance).

The low rate of harmony mismatches can be contrasted with the much higher rate of stem class mismatches. Despite my study design, which forced participants to pay attention to the presented plural suffix, they were not very accepting of nonce lowering stems, in particular: nearly half (478 of 1,003) of all trials in which the stimulus was presented with a lowering stem plural (e.g. -ɒk) were discarded because the participant assigned it a non-lowering 1sg suffix (e.g. -om), although individuals did so at different rates (see Section 5.4.2). This means that lack of consideration for (or rejection of) the experimental manipulation plays a larger role specifically with the factor of stem class, which would make its effect on the experimental results weaker. Thus, looking at quantitative evidence from discordant trials, we would expect the effect of stem class to be weaker than that of the phonological factors. This is what we find.

There is another reason to treat stem class separately from the phonological factors and to expect its effect to be attenuated. Participants had to choose between suffixed forms, meaning that they were directly confronted with the phonological form of each stimulus when selecting a possessive. In contrast, information about stem class is only available from other forms, so speakers are not explicitly reminded of it when selecting the possessive. These are two different types of information, and the former should have a stronger effect, because it is more immediate.

The treatment of stem class as separate from phonological factors pursued in this study is thus the most accurate way to represent this factor. Accordingly, the experimental results are valid support for my hypotheses: participants applied gradient patterns from the lexicon, counter to the claim by Rácz & Rebrus (2012), discussed in Section 2.2, that novel words categorically take one possessive suffix (-V for nouns ending in palatals and sibilants, -jV otherwise). In particular, participants applied the generalization, present in their lexicon, that lowering stems (which take plural suffixes like -ɒk) are more likely to take -V in the possessive. The relatively weak experimental correlation is expected, since there is good reason to believe that the experiment was only able to detect this correlation quite weakly—that is, the difference in strength between the effects of phonology and stem class is a result of the experiment rather than a fact about Hungarian speakers’ grammars.

5.7.3 Are speakers generalizing over roots or stems?

My decision to separate phonology and stem class in my analysis was practical. However, my results may also be shaped by my theoretically grounded choice to calculate the phonological effects using a corpus of monomorphemic words, which captures the assumption that lexical items are stored as roots. In Section 4.2.3, I compared this corpus with a stem-based corpus that counts derived forms and compounds separately.19 I conclude my discussion of the nonce word study by briefly discussing which corpus is a better fit for the experimental results. I suggest that participants are roughly matching the frequency of the lexicon counting roots, not stems.

As discussed in Section 4.2.3, the corpora are quite similar in the relative distribution of -V and -jV, and indeed, phon_odds produced by models trained on the two are quite closely correlated (R2 = .84), meaning that stimuli predicted to be more likely to take -jV in the model of monomorphemic nouns are also predicted to be more likely to take -jV in the model of all nominals. The corpora differed on two main points. First, the stem-based corpus had a much higher overall rate of -V. Second, the root-based corpus had a higher rate of -jV among alveolar plosives than labial plosives, while the stem-based corpus showed the opposite pattern. In Table 10, I compare the proportions of -jV in the corpora of monomorphemic nouns and all nominals from Table 8 with the experimental results. The effect of place is inconclusive: the experimental rate of -jV is roughly equal for labial and alveolar plosives, matching neither of the corpora. The baseline rate of -jV in the experiment, however, is much more in line with the predictions of the root-based corpus: participants assigned -jV 79.8% of the time to stimuli ending in non-palatal plosives, much closer to the rate of 80.3% in the corpus of monomorphemic nouns than the 35.7% rate across all nominals.20 The baseline rate of -jV suggests that participants, on the whole, were mirroring the frequencies of the root-based corpus. More sophisticated statistical analysis is less conclusive. On the one hand, the phon_odds produced by the stem-based corpus are better predictors of the experimental results than the root-based phon_odds: the equivalent of Table 9 using the stem-based corpus yields a better model (χ2 = 7.89) of the experimental results than that in Table 9. However, there are two reasons to question this as support for the stem-based model. First, these models have a free intercept parameter that sets the baseline, so they do not penalize the stem-based phon_odds for the large difference in baseline rate of -jV shown in Table 10. Second, the better performance of the stem-based phon_odds seems to be an artifact of the surprising behavior of nominals ending in palatals and sibilants: when these are removed, the root-based phon_odds yield a better-fitting model (χ2 = 10.87). The issue requires further study, but the comparisons in this section tentatively suggest that Hungarian speakers are counting over roots and affixes, not complex stems. This root-based storage, used in Distributed Morphology (e.g. Halle & Marantz 1993; Embick & Marantz 2008), fits with the theory of morphological dependencies presented in Section 6.

Table 10: Experimental frequency of -jV responses compared with type frequency of -jV in Papp (1969) for nouns ending in non-palatal plosives, by place of articulation (see Table 8).

| experiment (responses) | lexicon (types) | ||||

| monomorphemic nouns | all nominal | ||||

| -V | -jV | % -jV | % -jV | % -jV | |

| labial plosive | 11 | 72 | 86.7% | 76.8% | 58.2% |

| alveolar plosive | 47 | 339 | 87.8% | 96.5% | 47.9% |

| velar plosive | 81 | 137 | 62.8% | 64.0% | 20.7% |

| total | 139 | 548 | 79.8% | 80.3% | 35.7% |

6 Sublexicon models and morphological dependencies

To account for the frequency matching results in Section 5.6, we need a theory that can apply patterns of allomorphy from the lexicon productively to new words. In particular, this theory must be able to learn morphological patterns like the correlation between lowering stems and possessive -V. In Section 1.1, I argued that diacritic features on lexical items provide a convenient symbolic representation for lexically specific morphological behavior that can be invoked in generalizations. In this section, I sketch out such a theory, based on the sublexicon model of phonological analogy (Gouskova et al. 2015). I extend this basic model with a novel set of morphological constraints that allow for generalizations over cases of lexically specific allomorphy. As before, I assume Distributed Morphology (Halle & Marantz 1993), which uses root-based storage and diacritic features.

6.1 The basic sublexicon model

The sublexicon model (Allen & Becker 2015; Gouskova et al. 2015; Becker & Gouskova 2016) encodes phonological generalizations in lexically specific variation. This allows learners to pick up on the partial phonological predictability determining a given lexical item’s choice of allomorph. As such, it follows in the path of previous models, like the Minimal Generalization Learner (Albright & Hayes 2003), that use phonological analogy to determine the set of lexical items to which a given morphophonological rule applies (see Guzmán Naranjo (2019) for an overview).

In the sublexicon model, the learner divides the lexicon into sublexicons that pattern together. These sublexicons correspond with the morphological features described in Section 3: [+lower] for lowering stems, [–lower] for other consonant-final nouns, [–j] for nouns that take possessive -V, and [+j] for nouns that take -jV. These groupings are shown below:

- (7)

- Lexical entries for Hungarian nouns

- a.

- [+lower]: /vaːlː[+lower,–j] /’shoulder’, /hold[+lower,+j]/ ‘moon’, …

- b.

- [–lower]: /dɒl[–lower,–j] /’song’, tʃont[–lower,+j] /’bone’, …

- c.

- [+j]: /tʃont[–lower, +j] ‘bone’, /hold[+lower,+j] ‘moon’, …

- d.

- [–j]: /dɒl[–lower, –j] ‘song’, /vaːlː[+lower,–j] ‘shoulder’, …

6.2 Sublexical phonotactic grammars

Hayes & Wilson (2008) present a model of phonotactic learning in which a learner captures generalizations over a language’s surface forms through a constraint-based phonotactic grammar. In their proposal, the learner keeps track of sounds or sequences of sounds (defined in terms of features) that are rare or absent in the lexicon and proposes constraints against them, weighting them in accordance with the strength of the generalization. For example, geminate consonants in Hungarian generally do not appear in clusters, especially within a morpheme, so the phonotactic learner trained on my corpus of monomorphemic nouns generates strong phonotactic constraints penalizing geminate consonants adjacent to other consonants: *[–syllabic,+long][–syllabic] and *[–syllabic][–syllabic,+long].21 This is how the speaker knows that clusters with geminates are unlikely in Hungarian.

The sublexicon model extends the notion of phonotactic learning to capture generalizations over subsets of the lexicon that pattern together—that is, sublexicons. The learner induces a phonotactic grammar for each sublexicon, capturing patterns specific to that sublexicon. As discussed in Section 4.2.3, Hungarian nouns ending in sibilants and palatals categorically take possessive -V, while nouns ending in vowels always take -jV. The sublexical grammar for the [+j] sublexicon should include heavily weighted constraints penalizing final sibilants and palatals, and the [–j] sublexicon should penalize word-final vowels.

These sublexical grammars are then reflected in speakers’ behavior. When a speaker wishes to form the possessive of a novel word, they evaluate the stem against each sublexicon’s grammar, where each sublexical grammar yields a score for that word. The better a word fares on the [+j] sublexicon relative to the [–j] sublexicon, the more likely it is to be placed into this sublexicon, and thus take -jV.

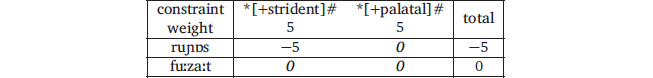

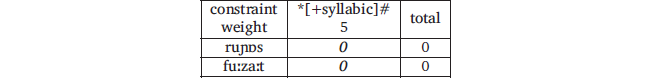

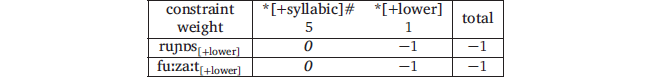

In Figure 5 and Figure 6, we see two nonce words from the experiment in Section 5, runyasz [ruɲɒs] and fúzát [fuːzaːt], tested on toy sublexical grammars with the constraints described above. Here, [ruɲɒs] is penalized by *[+strident]#, penalizing word-final sibilants, in the [+j] sublexicon, but not by the constraint against word-final vowels in the [–j] sublexicon; [fuːzaːt] accrues no penalities. I assume that all three constraints have a weight of 5.

Since [ruɲɒs] has a better score on the [–j] sublexical grammar than the [+j] sublexical grammar, it is much more likely to be placed into the former and form its possessive with -V. Specifically, this is a maximum entropy model (Hayes & Wilson 2008): a word’s likelihood of being placed into a sublexicon is proportional to its (negative) score raised to the power of e. Here, the probability of [ruɲɒs] being assigned to the [+j] sublexicon is e–5/(e0+e–5) = .0067 = .67%. On the other hand, since [fuːzaːt] has the same score on both sublexicons, it has a 50% chance of being assigned to each.

The sublexicon model is designed to capture generalizations over the phonological shape of each sublexicon’s members. In Section 5, I showed that speakers also observe a morphological generalization: lowering stems are more likely to have possessive -V. In the feature-based analysis of Section 3, this means that [+lower] and [–j] are likely to cooccur on lexical items, as stated in (3). In the next section, I extend the sublexicon model to accommodate these relations.

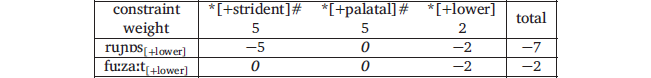

6.3 A sublexicon model with morphology

In my proposal, each sublexicon’s grammar has constraints penalizing diacritic features alongside those penalizing phonological features. For example, every member of the [+j] sublexicon has [+j] (by definition), but very few have [+lower], since lowering stems rarely take -jV. Since [+lower] is underrepresented in the [+j] sublexicon, the [+j] sublexical grammar should contain a heavily weighted constraint *[+lower] penalizing nouns with both [+lower] and [+j]. The [–j] sublexicon, comprising words that take -V, will also have a *[+lower] constraint, since lowering stems are also uncommon among these words. However, this constraint will not be as strong, since lowering stems are better represented among -V words than -jV words.

Figure 7 and Figure 8 show the evaluation of our two nonce words on the toy grammars, now containing *[+lower]. This constraint has a heavier weight in the [+j] grammar than in the [–j] grammar (2 and 1, respectively). Here, the speaker knows that the plurals of these words are [ruɲɒs-ɒk] and [fuːzaːt-ɒk], so she has marked both with [+lower].

The *[+lower] constraint brings the likelihood of [+j] (and possessive -jV) being assigned to /ruɲɒs[+lower] /slightly further, from .67% to .25%. For /fuːzaːt[+lower]/, the effect is more visible: the likelihood of [+j] goes from 50% to e–2/(e–1+e–2) = .269 = 26.9%. This shows how the sublexicon model can accommodate the effects found in the nonce word experiment, both phonological and morphological: nonce words ending in sibilants are less likely to be assigned -jV (that is, be placed in the [+j] sublexicon), as are words shown as lowering stems. These effects can all be assessed in a single calculation, correctly allowing them to compound or cancel out.

The sublexicon model presented in this section has two major theoretical benefits as a tool for capturing morphological dependencies. First of all, it does not require any additional theoretical mechanisms beyond those already proposed for well-established experimental effects (phonotactic constraints penalizing underrepresented feature combinations). Second, it relies on diacritic features, a common theoretical construct (though not universally accepted; see Section 3.3), making the sublexicon model compatible with theories of morphology that commonly use diacritic features—in my case, Distributed Morphology.

7 Conclusion

The study presented in this paper is intended as a beachhead for experimental study of morphological dependencies. The results are a proof of concept for a new experimental paradigm: a nonce word study in which stimuli are presented with varying morphological behavior. Hungarian speakers were shown to be sensitive to this manipulation: they assigned the possessive suffix -V more frequently to stimuli presented as lowering stems, with plural -ɒk, than to stimuli presented with the more common plural suffix -ok. This finding supports the claims of Ackerman et al. (2009), Ackerman & Malouf (2013), and others: speakers learn lexical correlations between a word’s morphological patterns and use these correlations to infer unknown forms of words.