1 Introduction

Coarticulation, or the overlap between speech gestures, generates contextually-dependent phonetic variants: sounds systematically and predictably take on acoustic features of neighboring segments. For example, when producing an English word like “den”, speakers begin the velum-lowering gesture for the final nasal segment during the preceding vowel. Coarticulation is an inevitable and natural property of fluid speech production, therefore it is systematic. Coarticulation is important for models of speech perception, since listeners use its presence to more efficiently process the speech signal (Fowler 1984). Anticipatory coarticulation in particular provides temporally early cues in the speech signal about the identity of upcoming sounds and its presence can be used by listeners during spoken word comprehension. For instance, United States (US) English listeners use anticipatory nasalization present on a vowel to identify the entire word: just by hearing [bı̃] listeners select that the word is “bean” not “bead” (Ali, Gallagher, Goldstein, & Daniloff 1971; Lahiri & Marslen-Wilson 1991). Furthermore, listeners are sensitive to variation in the strength of coarticulatory cues: monosyllabic words with nasal consonant codas (i.e., CVN(C) words) are recognized faster when their vowels contain strong and temporally early coarticulatory nasality cues, relative to vowels that contain later-occurring, or weaker, coarticulatory cues (Beddor et al. 2013; Scarborough & Zellou 2013; Zellou & Dahan 2019). Studies have also shown that when coarticulatory cues are incorrect, listeners’ perceptual judgments are slowed (e.g., Martin & Bunnell 1981; Marslen-Wilson & Warren 1994; Dahan et al. 2001).

Coarticulation is also central to our scientific understanding of perceptually-based mechanisms of diachronic variation, as listeners’ decontextualization of the acoustic results of coarticulation can lead to sound change (Ohala 1993; Beddor 2009). In particular, coarticulatory variation can be highly multidimensional. For instance, anticipatory nasal coarticulation can result in multiple acoustic effects such as increased vowel nasalization, differences in voice quality, and/or greater formant dynamism (e.g., Chen 1997; Garellek et al. 2016; Carignan 2017; Zellou & Brotherton 2021). The current paper investigates how listeners use multiple acoustic cues associated with coarticulatory vowel nasalization in perceiving oral-nasal lexical contrasts in English. In particular, we compare the perception of vowels from words contrasting in nasal and oral coda consonants (i.e., CVN and CVC words) produced by speaker groups who produce distinct variants of coarticulated vowels (either “Extensive Coarticulators” or “Minimal Coarticulators”) based on evaluations from a prior study (Zellou 2022). Crucially, we explore differences in the perception of these variable realizations of coarticulated vowels across listeners in apparent-time1; and, we also explore changes in perceptual cue weights for multidimensional features of nasal coarticulation across listeners in apparent-time. In doing so, we investigate foundational questions about variation in the weights of multiple coarticulatory cues in production and perception, and test how the perceptual mechanisms of coarticulatory-motivated sound change are realized as systematic and gradual cue-reweighting of multiple features across individuals over time.

In the following sections, we provide an overview of the role of coarticulation in sound change theories (Section 1.1), review multidimensional features for nasal coarticulation (Section 1.2), and introduce the current study (Section 1.3).

1.1 Coarticulation and perceptually-driven sound change

In addition to its role in spoken word comprehension, coarticulation has been at the forefront of theoretical and empirical understandings about the relationship between synchronic phonetic variation and the evolution of phonological patterns in a language over time. For instance, the reanalysis of coarticulation as contrastive nasalization (e.g., VN > ṼN > Ṽ) is recognized as a common historical pathway of sound change (Chen & Wang 1975; Hajek 1997). How do coarticulatory-based sound changes actuate? Understanding the conditions and catalysts for coarticulatory-based sound changes, as well as the pathway and time course by which gradual phonetic shifts occur over time within a speech community, has been the topic of much theoretical work (Weinrich et al. 1968; Beddor 2009; Yu 2023). This is because coarticulation results in an acoustic signal that contains temporally-distributed phonetic cues. For example, a speech signal containing an utterance [ṼN] could be interpreted by a listener as underlyingly /VN/, /ṼN/, or just /Ṽ/. A listener’s processing of the coarticulatory features, parsing the speech signal into discrete gestures, is hypothesized to be a source of innovative phonetic variants and a starting point for historical change in a language (Ohala 1993; Beddor 2009; Harrington 2012).

One view is that listeners perceive the speech signal as a consequence of co-produced articulatory gestures (Fowler 2005). Thus, listeners perceive coarticulation by attributing the acoustic effects of gestural overlap in the speech signal to their articulatory source(s) (Fowler 1984). Listeners have been shown to display behavior indicating they factor out phonetic variation when they can ascribe that information to another source in the signal. For example, listeners’ category boundary of a /da-ga/ continuum shifts toward more alveolar responses when the stimuli are presented in the context of a preceding /ɹ/, suggesting that the perceptual system attributes a lower F3 to the preceding rhotic (Mann 1980; also Mann & Repp 1980 for the influence of vowel coarticulation on sibilant perception). For nasal coarticulation, studies have demonstrated that listeners hear a nasalized vowel in the context of a nasal consonant as phonologically equivalent to oral vowels (e.g., [ṼN] is interpreted by the listener as /VN/, Kawasaki 1986). In this scenario, there is “parity” between the production and perception of coarticulation. This is a phonetically stable condition in that phonological parity has occurred between speaker and listener, a situation that would not lead to phonological change.

However, theoretical models of listener-based diachronic instability propose that since coarticulation results in an ambiguous acoustic signal, sound change can be actuated when listeners and speakers come to different interpretations of an utterance. Ohala’s (1993, inter alia) “innocent misperception” model is one hypothesis for how listener-based coarticulatory processing mechanisms lead to novel percepts due to coarticulation. For instance, if the listener does not fully attribute the coarticulatory variation to its source (e.g., a coarticulatorily nasalized vowel could be analyzed by the listener as phonologically nasal), this can lead to the decontextualization of coarticulation as intrinsic to the vowel. Such a scenario has been argued to be particularly likely if a listener fails to detect the source of coarticulation, such as if the nasal consonant was deleted or obscured in the speech signal for some reason (such as by noise) or if the listener makes a decision about the phonological structure of the vowel before even hearing the final consonant (Beddor 2009; Beddor et al. 2013).

In Ohala’s model, these listener-driven sound change mechanisms are framed as “errorful” (i.e., a listener’s failure to fully correct). In contrast, another perspective is that perceptually-driven sound changes reflect differences in the relative weighting of coarticulatory cues in the speech signal across listeners (e.g., Beddor 2009). Under such a view, coarticulatory-based sound changes are gradient and could reflect either differences in strategies or distinct coarticulatory grammars across individuals. For instance, Beddor (2009) has found that listeners vary in their use of nasal coarticulation in perception; for some US English listeners, vowel nasalization alone has been found to be sufficient to determine that a speaker said “Ben” (rather than “bed”) whereas other listeners require a nasal consonant. She proposes that listeners who are innovative with respect to perceived coarticulation are those who attend more closely to the coarticulatory information and assign less weight to—and perhaps do not require—the coarticulatory source. In this approach, the impetus of sound change is not misperception but rather incremental differences in the relative perceptual importance or weight of the multiple phonetic properties associated with a phonological contrast that occur across individuals.

Such cue-based models of perceptually-driven sound change argue that phonologization of coarticulation occurs along a pathway where, for instance, there is first a contextual relationship between coarticulation and source, but then listeners’ perceptual attention shifts gradually to the presence of the coarticulatory feature alone – whether or not the source is present – as the dominant cue to a contrast (Beddor 2009). This can occur over time as a gradual trade-off between the temporal extent of coarticulation and the strength of the source (Beddor et al. 2007). A final stage occurs when the cue is realized in the complete absence of the source. Beddor et al. (2013) demonstrate this with eye tracking: during the time course of the vowel alone, US English listeners are more likely to look to images depicting the CVN(C) word when presented with enhanced anticipatory nasal coarticulation, than when there was a vowel containing less coarticulation.

Many empirical studies of speech production have found gradient differences in overall degree and extent of coarticulatory nasality across languages; this is consistent with the view that coarticulatory-based sound change involves cue-reweighting mechanisms rather than categorical changes in the status of vowel nasality. For instance, there are weaker nasal coarticulatory patterns in CVN words in Spanish, French, Swedish, Italian, Japanese and Bininj Kunwok relative to that observed in other languages (Ushijima & Hirose 1974; Clumeck 1976; Cohn 1990; Farnetani 1990; Solé 1992; Delvaux et al. 2008; Stoakes et al. 2020). Meanwhile, US English, Sundanese, and Tereno have extensive coarticulatory vowel nasalization, with the velum lowering gesture starting very early in vowels before the nasal coda (Cohn 1990; Ohala 1993). Evidence of cross-dialectal differences in nasal coarticulation within a language indicates that coarticulatory patterns can vary in gradient ways at the speech community level, further supporting the cue-reweighting framework for coarticulatorily-motivated sound change. For instance, Bongiovanni (2021) found that extent of anticipatory vowel nasalization differs across dialects of Spanish: in Dominican Spanish, coarticulation is extensive and “phonologized”, while Argentinian Spanish has less extensive nasalization. These findings are consistent with related work by Solé (2007) suggesting gestural overlap in continental Spanish was a mechanical product of articulation and not a linguistically-specified feature (i.e., /VN/). In a comparison across varieties of Afrikaans, Coetzee et al. (2022) found that Kleurling Afrikaans speakers produce less extensive anticipatory nasal coarticulation than white Afrikaans speakers. They also found that individuals — in both communities — who produced more extensive coarticulatory nasality relied more on nasalization during lexical perception, suggesting consistency of cue weighting across a speaker’s production and perception. Patterns of coarticulation have also been shown to vary across dialects of US English: speakers from Philadelphia, Pennsylvania produce more extensive nasal coarticulation than speakers from Columbus, Ohio (Tamminga & Zellou 2015). Even within a speech community, variation in nasal coarticulation across generations has been observed. For instance, Zellou and Tamminga (2014) observed that Philadelphia English speakers born between 1950 and 1965 display a trend of increasing coarticulatory vowel nasalization, yet speakers born after 1965 move towards decreasing coarticulatory nasality; finally, speakers born after 1980 reverse this change, moving again toward producing greater nasal coarticulation. Taken together, these studies show that coarticulatory patterns change in gradient ways within a dialect (see also Carignan et al. 2021), and can even reverse direction.

The current study examines changes within California English, a US English dialect. The most well-documented sound change within California is the “California Vowel Shift”, consisting of the fronting of back vowels and a counterclockwise shifting of the front and low vowels over apparent-time (Eckert 2008). Less well known, but becoming increasingly investigated, is the change in the realization of vowels before nasal coda in California English, involving changes not just in degree of anticipatory nasalization, but also changes in diphthongization, vowel positioning, and other features across speech communities (Brotherton et al. 2019; Carignan & Zellou 2023), as well as over time (Hall-Lew et al. 2015; Podesva et al. 2015), and even across individuals within one social cohort (Zellou & Brotherton 2021).

Under the cue-reweighting framework of listener-based phonetic innovation (i.e., gradual change in the use of an acoustic feature as signaling a lexical contrast), several studies have investigated how the perceptual weighting of coarticulatory features changes across speech communities within a language as the catalyst for phonological change. Kleber et al. (2012) examined /ʊ/-fronting in Standard Southern British English and found younger (ages 18–20 years) and older (ages 50+ years) speakers had distinct perception grammars: younger listeners compensated less for alveolar consonant coarticulation on fronted /ʊ/ than older speakers. These group-level differences were argued to reflect different stages of a sound change: the older listeners reflect a pre-sound change stage where coarticulation is phonetic, while the younger listeners are in a stage where coarticulation has become phonologized. These perceptual differences were argued to be the catalyst for sound changes observed in production whereby older speakers displayed more coarticulatory-induced fronting of /ʊ/ while younger speakers show more categorical /ʊ/-fronting effects (i.e., fronting in both coarticulatory and non-coarticulatory contexts). Thus, the change in perceptual use of coarticulation over time was argued to be a trigger for phonological change in Standard Southern British English.

1.2 Multidimensionality of nasal coarticulation

Nasal coarticulation is a particularly apt phenomenon to examine in exploring the perceptual mechanisms for how sound change actuates. As mentioned, vowel nasalization results in variation across multiple temporal and spectral dimensions. For one, gestural overlap in the velum lowering gesture from a nasal coda onto a preceding vowel could be reanalyzed by a listener as an intrinsically nasalized vowel. Spectrally, this has been shown to be realized as an increase in the amplitude of the “nasal formants” and a dampening of the amplitudes of the “oral formants”, as well as an increase in the width of the formant band, especially for F1 (Chen 1997; Styler 2017). Since the acoustic characteristics of an intrinsically nasalized vowel (e.g., in the case of phonological nasalization, such as French) and a contextually nasalized vowel (i.e., coarticulated) are similar, a listener hearing a nasalized vowel does not know which one it is (unless a source consonant is present in the speech signal and detected).

Additionally, the spectral effects of vowel nasalization are similar to the spectral effects of breathy phonation (Ohala 1974; Matisoff 1975). Specifically, breathiness results in a weakening of the amplitude of the first formant along with an increase in the amplitude of the first harmonic (Garellek et al. 2016). Even though the increased spectral tilt in breathy vowels and nasal vowels stem from different articulatory sources (subglottal vs. velopharyngeal; Ohala 1974; Garellek 2014; Carignan 2017), the acoustic similarity between these features can lead to ambiguity for the listener. Indeed, breathiness on vowels has been reanalyzed by listeners as nasalization (Ohala 1975; Imatomi 2005) and, likewise, nasalization on vowels as breathiness (Blevins & Garrett 1993; Ohala & Busà 1995; Blevins 2004; Garellek et al. 2016).

Moreover, formant frequency patterns can also be perceptually perturbed by the presence of acoustic nasalization on vowels that can lead to sound change (Carignan 2014). Nasalization shifts the perceptual center of gravity of the spectrum lower for non-high vowels. A perceived lower F1 can be reanalyzed as due to changes in tongue position for these vowels (Beddor, Krakow, & Goldstein 1986; Krakow, Beddor, & Goldstein 1988; Carignan 2017). Most often, since nasal coarticulation is temporally dynamic, affecting portions of the vowel closer to a nasal consonant, the perceptual influence of nasalization on vowel quality changes result in the reanalysis of a monophthongal vowel as diphthongal. This can explain many nasality-induced diphthongal effects in vowel systems, cross-linguistically (Parkinson 1983; Walker 1984; Baker et al. 2008; Mielke et al. 2017; Zellou & Brotherton 2021).

1.3 Current Study

The aim of the current study is to investigate whether the perceptual use of nasal coarticulation changes over apparent-time in California English. We aim to explore whether there are changes in listeners’ perceptual weighting of cues for nasal coarticulation across apparent-time with California English listeners ranging in age from 18 to 58.

We compared perception of vowels in CVN and CVC words produced by speaker groups whose vowels are either non-ambiguous to listeners (‘Extensive Coarticulators’) or generate ambiguity (‘Minimal articulators’) in a prior study (Zellou 2022). This is analogous to the manipulated extensive and minimal coarticulation conditions in prior work (Beddor et al. 2013). Here, our stimuli are non-manipulated speech to reflect naturally occurring variations across several individuals. Moreover, our task is a lexical completion task, similar to that used in prior work (Ali et al. 1971; Ohala & Ohala 1995), which excises the final coda. This is analogous to situations in natural interactions where the coda is obscured (i.e., by noise) or deleted (via hypoarticulation). Excising the coda also allows us to test how our listeners respond in the stage of phonologization where perceptual attention is shifting more toward coarticulation, and away from the source. This approach probes listeners’ use of acoustic cues present on vowels that signal the phonological status of the final consonant as being nasal or oral.

2 Methods

2.1 Stimulus items: Speakers and acoustic analysis

Stimuli for the perception experiment were taken as a subset from a set of stimuli produced by 60 California English speakers in Zellou (2022), elicited in the frame “__ the word is __” for five CVC-CVN minimal pair sets containing non-high vowels (/ɑ/, /æ/, /ʌ/, /ɛ/, /oʊ/): bod, bon; bad, ban; bud, bun; bed, ben; bode, bone. (We focus on non-high vowels following prior work on nasal coarticulatory patterns in United States (US) English, Beddor & Krakow (1999).) The [CV] syllables were excised, amplitude normalized to 60 dB, and gated into wide-band noise (at –5 dB less than the peak vowel intensity; Ohala & Ohala 1995).

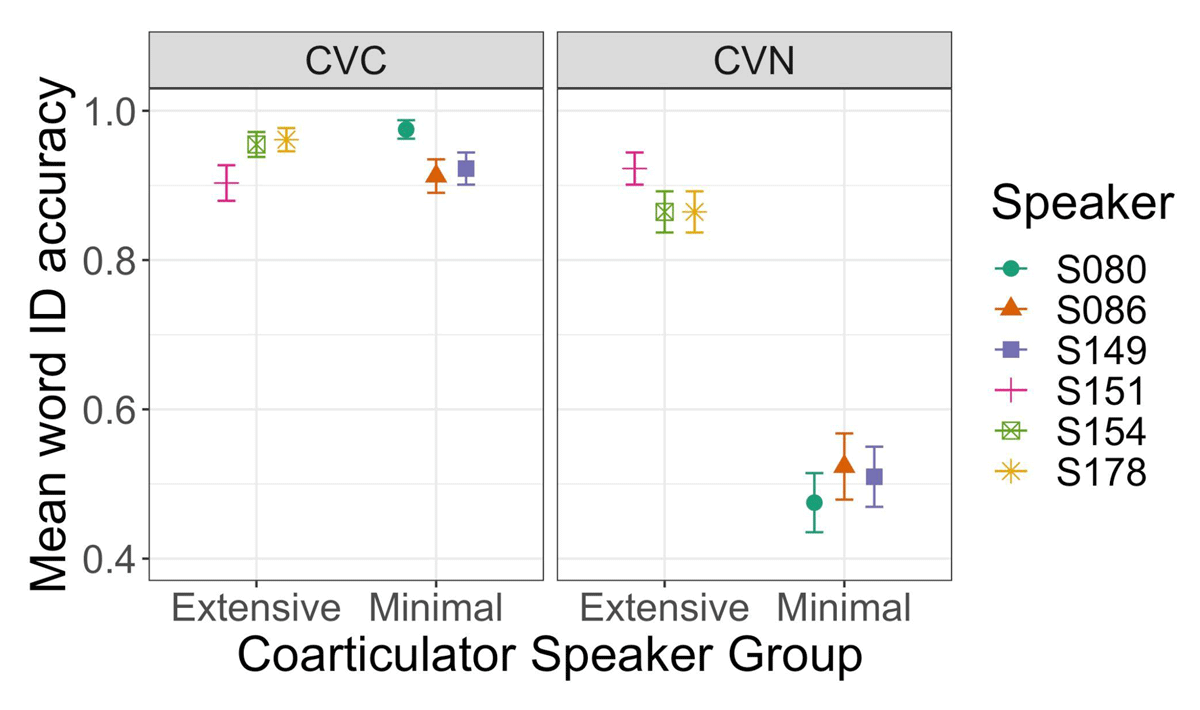

We selected a subset of 6 speakers’ productions based on how their vowels were perceptually categorized by a separate group of 94 young adult listeners (18–28 years old) in Zellou (2022). Since there is an enormous amount of variation within the speech community, we selected several speakers who represent two types of extreme realizations of coarticulatory variation: three speakers who produce unambiguous nasal coarticulation (“Extensive Coarticulators”: S154, S151, S178; aged 20, 18, 20, respectively; 3 F) and three speakers who produce more ambiguous nasal coarticulation (“Minimal Coarticulators”: S149, S086, S080; aged 19, 18, 20, respectively; 2M, 1 F). For each category, we selected the first contiguous group of n=3 speakers whose productions were not at-ceiling for listeners in the perception study (Figure 7 in Zellou 2022: 41). We selected each speaker’s first production of each word resulting in a total of 60 total stimuli in the present study (10 items × 6 speakers).

Figure 1 shows the categorization accuracy data for the 6 speakers’ vowels, taken from the data in Zellou (2022). As seen, while lexical categorization by listeners from hearing oral vowels (i.e., non-coarticulated, from CVC words) is very high, lexical categorization performance for coarticulated vowels (from CVN contexts) varies. Speakers in the Extensive Coarticulator group produce coarticulated vowels that listeners overwhelmingly recover as originating from a CVN word. On the other hand, speakers in the Minimal Coarticulator group produce vowels in CVN contexts that listeners find ambiguous and thus have more difficulty identifying whether the originally produced coda is oral or nasal. Hence, these two groups of speakers represent distinct types of produced coarticulatory behavior, which we can use as our experimental manipulation to test whether different degrees of coarticulation lead to distinct perceptual patterns across different types of listeners.

Perceptual results for word identification (ID) from Zellou (2022) for the 6 speakers selected for this study, categorized as “Extensive Coarticulators” or “Minimal Coarticulators” based on listener accuracy in identifying the nasal coda based on hearing the vowel alone. Bars indicate standard error of the mean.

As mentioned in the Introduction, we are also interested in how the multidimensional acoustic cues to vowel nasalization are used by listeners. Several acoustic properties of the Minimal and Extensive Coarticulators’ vowels used as stimuli for the perception study were measured. First, A1-P0 is a spectral measure of vowel nasalization quantifying the difference between the amplitude of the low frequency nasal peak, P0, which increases with increasing nasalization, and the amplitude of the first formant peak, A1, which decreases as nasalization increases (Chen 1997). A smaller A1-P0 value correlates with greater vowel nasality (Zellou & Dahan 2019). A1-P0 was measured at 9 equidistant time points across each nasalized vowel starting at 10% of vowel duration and ending at 90% vowel duration with a Praat script (Boersma & Weenink 2018; Styler 2017). Average A1-P0 was calculated as the mean across the 9 time points.

Prior work shows that increasing F1 bandwidth and higher spectral tilt (measured as A3-P0 - relative amplitudes of F3 and the lower nasal formant peak, Styler 2017) are additional spectral features that correlate with increased vowel nasalization. Thus, we took these acoustic measurements at the same 9 equidistant time points for each nasalized vowel (with a Praat script; Styler 2017) and the average value across these points for each vowel was calculated.

Finally, nasalization can also affect vowel qualities, particularly in dynamic ways over the vowel duration (Scarborough et al. 2015; Zellou & Scarborough 2019; Zellou et al. 2020). Therefore, we also measured the spectral rate of change in the formant frequencies (F1 and F2) over the vowel duration. To that end, F1 and F2 measurements were taken at each of the 9 timepoints, then log mean normalized (Barreda 2020). Spectral rate of change (ROC) was calculated using the methods in Fox and Jacewicz (2009: 2606). First, each vowel’s trajectory length (TL), or amount of spectral change, was calculated as the length of each vowel section (VSL, e.g., timepoint 1 to timepoint 2, timepoint 2 to timepoint 3, etc.). TL was then calculated as the sum of the four VSLs. The TL of each vowel was then used to calculate its average spectral ROC by dividing the TL by 80% of the vowel’s duration (following Fox and Jacewicz (2009), since we measured only over 80% of the vowel’s duration: 10%–90%). We additionally examined F1 and F2 independently at vowel midpoint (log mean normalized; Barreda 2020).

Table 1 shows the mean acoustic nasality, F1 bandwidth, spectral tilt, F1 (midpoint), F2 (midpoint), and diphthongization values for the nasalized and oral vowel stimuli, averaged across vowel timepoints, as well as mean vowel durations, for the Extensive and Minimal Coarticulator speaker groups. The table also shows the output of separate linear regression models run on each acoustic variable. (Data, models, and code are available for the current study in the Open Science Framework (OSF) repository for the project.2) Each model included effects of Speaker Group (2 levels: Extensive, Minimal; sum-coded) and Context (CVN, CVC; sum-coded), as well as their interaction. Each dependent variable was standardized (i.e., subtracting the mean and dividing by the standard deviation) with the scale() function in R prior to model fitting.

Mean (and standard deviation) and linear regression results for acoustic measures taken from vowels (for CVNs and CVCs) produced by the Extensive Coarticulators and the Minimal Coarticulators.

| Extensive Coarticulators’ mean (sd) | Minimal Coarticulators’mean (sd) | Summary Statistics from Regression Models | |

| Acoustic nasality (A1-P0) | CVN: –1.2 (3.1) CVC: 2.6 (2.6) |

CVN: –0.3 (2.3) CVC: 0.8 (2.3) |

Group(Extensive) [β = 0.3, t = 1.1, p = 0.3] Context(CVN) [β = –1.2, t = –5.3, p < 0.001] Gr.*Context [β = –0.7, t = –3.0, p < 0.01] |

| F1 bandwidth | CVN: 256.5 (132) CVC: 149.4 (73) |

CVN: 122.2 (70) CVC: 128.2 (72) |

Group(Extensive) [β = 0.4, t = 4.8, p < 0.01] Context(CVN) [β = 0.3, t = 3.5, p < 0.01] Gr.*Context [β = 0.2, t = 3.1, p < 0.01] |

| F1 (log mean normalized) | CVN: –0.32 (0.07) CVC: –0.31 (0.1) |

CVN: –0.36 (0.04) CVC: –0.33 (0.08) |

Group(Extensive) [β = 0.1, t = 2.4, p < 0.05] Context(CVN) [β = –0.1, t = –1.0, p = 0.3] Gr.*Context [β = 0.04, t = 0.5, p = 0.6] |

| F2 (log mean normalized) | CVN: 0.04 (0.11) CVC: 0.01 (0.08) |

CVN: 0.02 (0.11) CVC: –0.002 (0.08) |

Group(Extensive) [β = 0.1, t = 0.7, p = 0.5] Context(CVN) [β = 0.1, t = 1.7, p = 0.09] Gr.*Context [β = 0.01, t = 0.1, p = 0.9] |

| Spectral tilt (A3-P0) | CVN: –8.4 (14.1) CVC: –7.3 (13.8) |

CVN: –16.1 (6.1) CVC: –14.7 (7.3) |

Group(Extensive) [β = 0.3, t = 3.9, p < 0.01] Context(CVN) [β = –0.1, t = –0.6, p = 0.5] Gr.*Context [β = 0.0, t = 0.1, p = 0.9] |

| Diphthongization (F1/F2 rate of change) | CVN: 5.3 (1.9) CVC: 3.9 (1.1) |

CVN: 4.4 (0.9) CVC: 4.0 (1.2) |

Group(Extensive) [β = 0.1, t = 1.7, p = 0.1] Context(CVN) [β = 0.3, t = 3.6, p < 0.01] Gr.*Context [β = 0.2, t = 2.3, p < 0.05] |

| Vowel duration (sec) | CVN: 0.230 (0.064) CVC: 0.257 (0.054) |

CVN: 0.213 (0.057) CVC: 0.244 (0.043) |

Group(Extensive) [β = 0.1, t = 1.5, p = 0.1] Context(CVN) [β = –0.3, t = –2.9, p < 0.01] Gr.*Context [β = 0.0, t = 0.2, p = 0.9] |

First, we see that nasalized vowels had greater acoustic nasality (lower A1-P0 value), larger F1 bandwidth, greater diphthongization, and shorter duration than oral vowels. We additionally observed some Group differences: Extensive Coarticulators tended to produce even greater F1 bandwidth, higher F1 (lower vowels) and less spectral tilt (higher A3-P0) overall (i.e., in vowels in both CVN and CVC contexts). F1 and F2 independently did not significantly vary in CVC-CVN contexts for our speakers, but note that there was a numeric increase in F2 for nasal coarticulated vowels. Taken together, these cues are consistent with prior work showing that nasalization has an effect on vowel articulations and formant quality (e.g., Beddor 1993; Carignan 2017).

Critically, several interactions revealed differences in acoustic cues for Speaker Group and Context. In CVNs, Extensive Coarticulators produce even greater increases in acoustic nasality (lower A1-P0), larger F1 bandwidth, and diphthongization. No differences were observed between the Extensive and Minimal Coarticulator groups for spectral tilt, F1, F2, or vowel duration across CVN and CVC words.

Thus, the acoustic analysis indicates that the use and variation of acoustic nasalization, F1 bandwidth, and diphthongization appears to be targeted differences in the coarticulated vowels across the Extensive and Minimal Coarticulators, not a property of their vowel articulation in general.

2.2 Listener participants

128 participants were recruited for the study, from Academic Prolific and the UC Davis Psychology subjects pool, but 39 participants were removed from the analysis for various reasons (n = 4 non-native English speakers; n = 3 reported hearing loss; n = 14 did not complete the entire study; n = 16 did not pass the headphone screen; n = 2 did not pass the listening comprehension question). All participants were distinct from the listeners in Zellou (2022).

The final set of participants retained in the present study consisted of 89 L1 US English speakers (52 female, 2 nonbinary, 35 male; age range = 18–58 years old; mean age = 30.2 ± 11.8 years), all of whom indicated they grew up in California and were living in California at the time of the study. We categorized listeners to distinct age groups based on their year of birth, and following the generational cohorts of Americans established by prior research (Dimock 2019; younger = “Generation Z”, middle = “Millennial”, older = “Generation X”3). Table 2 provides the breakdown of the count and gender distribution of participants by age group. All participants completed informed consent, and the study was approved by the UC Davis Institutional Review Board. Prolific participants were compensated with $5.25 while Subject Pool participants were compensated with course credit.

Age cohort (based on reported age at time of study) and gender of listeners.

| Listener age cohort | Age range (mean age) | Female n | Non-binary n | Male n | Total n |

| Younger | 18–25 (20) | 30 | 1 | 14 | 45 |

| Middle | 26–41 (34) | 11 | 1 | 15 | 27 |

| Older | 42–58 (50) | 11 | 0 | 6 | 17 |

2.3 Perception task procedure

Participants completed the experiment from home, using a Qualtrics survey. First, participants completed a sound level check, where they heard a sentence (“Bill heard we asked about the host”; amplitude normalized to 65 dB) and were asked to adjust their sound to a comfortable listening level (they could replay this audio file as many times as they liked). Then they were asked to indicate the final word from a list of phonological competitors (“host”, “coast”, “toast”). Next, they completed a headphone check (adapted from Woods et al. 2017), where participants heard a series of three tones presented to each ear (amplitude normalized to 65 dB). One of the three tones had the opposite phase presented to each ear. If participants indicated the tone/opposite phase tone was quieter, this indicated that they were not wearing headphones4. If they responded incorrectly on more than 4 trials, the participant was removed from the analysis (n = 16, as reported above).

Then, participants completed the word completion experiment, designed after the paradigm used in prior work (e.g., Ohala & Ohala 1995; Zellou, Pycha, & Cohn 2023). On a given trial, listeners heard one of the speakers produce either a [CV] syllable (truncated from CVC) or a [CṼ] syllable (truncated from CVN) gated into noise (all items were randomly presented once for each listener). Then, listeners selected which one of two minimal pair word choices the syllable was extracted from (either a CVC or CVN, corresponding to the minimal pair option for that syllable).

2.4 Statistical analysis

We removed any trials where participants indicated there was a technical issue (76 trials; e.g., sound not playing, background noise). We coded performance on the retained data (10,606 trials) as binomial (1 = accurate identification of the originally intended lexical item, 0 = incorrect identification) and modeled responses with a mixed effects logistic regression with the lme4 R package (Bates et al. 2014). Fixed effects included Listener Age Group (3 levels: younger, middle, and older listener age group), Structure (2 levels: CVN, CVC), Coarticulation Category of the speaker (2 levels: Extensive Coarticulators; Minimal Coarticulators) and all possible interactions. Random effects included by-Listener, by-Speaker, and by-Item random intercepts. We also included by-Listener random slopes for Structure and by-Speaker random slopes for Structure (the model including Coarticulation Category as by-Listener random slopes did not converge). Categorical factors were sum coded.

3 Results

3.1 Perceptual responses by listener age across vowel and speaker types

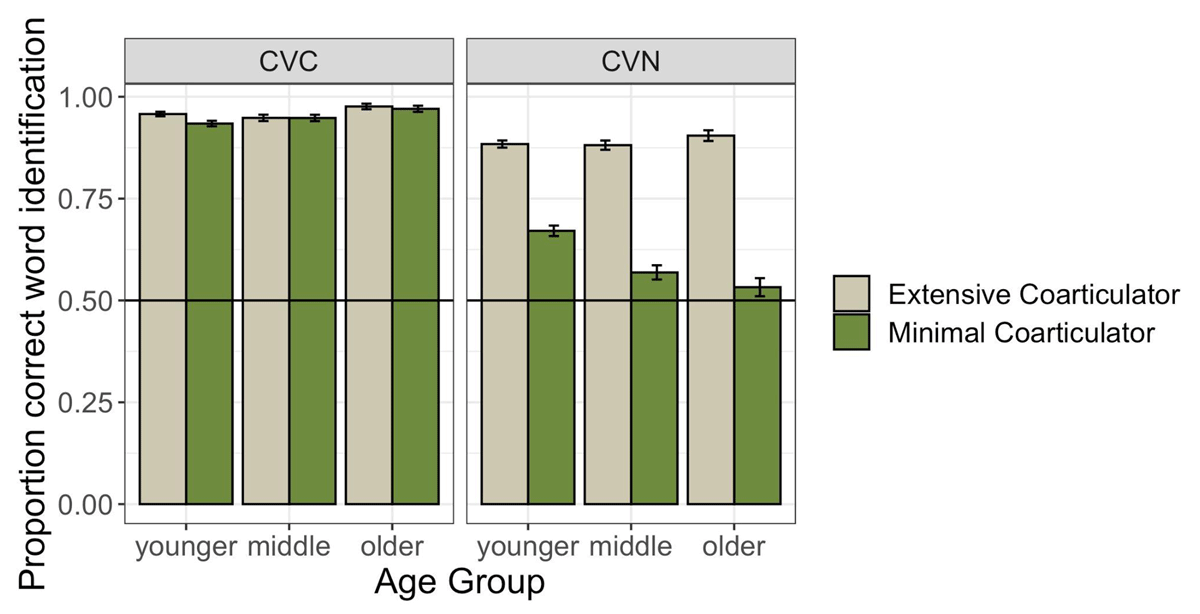

The model output is provided in Table 3 and the summarized raw data is plotted in Figure 2. First, the model showed an effect of Structure: overall, listeners had lower accuracy on average in identifying stimuli originally from CVN words. Additionally, there was an effect of Coarticulation Category: overall, listeners had lower accuracy in identifying the original lexical item from vowels produced by the Minimal Coarticulator speakers. However, this was mediated by a two-way interaction between Structure and Coarticulation Category: listeners were worse at lexical identification for the coarticulated vowels from CVNs produced by the Minimal Coarticulators.

Aggregated data model output. Asterisks indicate statistically significant effects.

No. observations = 10,606, no. listeners = 89, no. items = 10, no. speakers = 6.

| Estimate | Std. Error | z value | Pr(>|z|) | |

| (Intercept) | 2.8 | 0.36 | 7.83 | <0.001 |

| CVN | –1.04 | 0.36 | –2.92 | <0.01* |

| Listener Age Group (Older) | 0.16 | 0.16 | 1.01 | 0.3 |

| Listener Age Group (Younger) | –0.01 | 0.12 | –0.07 | 0.9 |

| Minimal Coarticulators | –0.66 | 0.21 | –3.22 | <0.01* |

| CVN * Age Group (Older) | –0.22 | 0.13 | –1.67 | 0.09 |

| CVN * Age Group (Younger) | 0.16 | 0.1 | 1.58 | 0.1 |

| CVN * Minimal Coarticulators | –0.61 | 0.21 | –2.9 | <0.01* |

| Age (Older) * Minimal Coarticulators | –0.13 | 0.08 | –1.72 | 0.09 |

| Age (Younger) * Minimal Coarticulators | 0.08 | 0.06 | 1.5 | 0.1 |

| CVN * Age (Older) * Minimal Coartic. | –0.16 | 0.08 | –2.05 | <0.05* |

| CVN * Age (Younger) * Minimal Coartic. | 0.24 | 0.06 | 4.22 | <0.001* |

| Random effects | Variance | Std. Dev | ||

| Listener (Intercept) | 0.55 | 0.74 | ||

| Structure (CVN) | 0.32 | 0.57 | ||

| Speaker (Intercept) | 0.24 | 0.49 | ||

| Structure (CVN) | 0.25 | 0.5 | ||

| Item (Intercept) | 0.77 | 0.88 |

Mean proportion of trials where participants identified the originally intended CVC or CVN word based on hearing the excised CV syllable, by listener age group (younger = 18–25 years old “Generation Z”, middle = 26–41 years old “Millennial”, older = 42–58 years old “Generation X”). The colors show differences for the speaker types: those who produced a higher degree of nasal coarticulation (“Extensive Coarticulators”; in light grey) and those who produced a lesser degree of coarticulation (“Minimal Coarticulators”; in dark green). Error bars indicate standard error of the mean.

There were also three-way interactions between Structure, Coarticulation Category, and Listener Age Group5, which can be seen in Figure 2. As seen, performance for the Minimal Coarticulators’ nasalized vowels is higher for younger listeners but lower for older listeners. The releveled model (provided in Supplementary Material, Table S1) showed no effects for the middle group, nor any interactions for the middle group with Structure or Coarticulation Category.

No other effects or interactions were observed. In particular, we do not see evidence of an overall Listener Age Group effect, such that participants’ performance on the task overall does not appear to worsen with age; rather, older listeners display reduced ability to identify the originally intended word from the coarticulated vowels produced by the Minimal Coarticulators specifically, relative to higher performance of these tokens by the younger listener group.

3.2 Acoustic cue weighting by listener age for coarticulated vowels

Next, we tested whether there is variation in the use of the multiple acoustic cues to vowel nasalization during perception across the listener groups. We fit a mixed effects logistic regression model to the responses for only the nasalized vowels (i.e., vowels from CVNs) with the lme4 R package (Bates et al. 2014). The model included fixed effects of Listener Age (3 levels: older, middle, younger listener age groups), Acoustic Nasality (A1-P0), F1 Bandwidth, and Diphthongization (ROC)6. In addition, interactions between Listener Age (younger, middle, older listener age groups) and each acoustic variable were included in the model7. The model also included by-Listener, by-Item, and by-Speaker random intercepts. We additionally included by-Listener random slopes for each of the acoustic features (Acoustic Nasality, F1 Bandwidth, Diphthongization)8. Acoustic variables were standardized around the mean using the scale() function in R prior to model fitting, while factors were sum coded.

While collinearity could be a problem when running a model with multiple acoustic features correlated with a phonetic cue, a check for multicollinearity using the check_collinearity() function in the performance R package (Lüdecke et al. 2021) computed a ‘low correlation’ assessment. The variance inflation factor (VIF) values for all the predictors were below 1.5, indicating low collinearity among variables and thus all were retained in the model.

The summary statistics for the model are provided in Table 4 (the releveled model output showing the middle listener age group is provided in Supplementary Material, Table S2). In terms of overall acoustic cue use, all listeners used larger F1 bandwidth and higher spectral rate of change (ROC) in formants to correctly identify coarticulated vowels as originating from CVN words, but there was no overall effect of A1-P0 (acoustic nasality).

Acoustic cue model output (coarticulated vowels only). Asterisks indicate statistically significant effects.

No. observations = 5,294, no. listeners = 89, no. items = 5, no. speakers = 6.

| Estimate | Std. Error | z value | Pr(>|z|) | |

| (Intercept) | 1.84 | 0.54 | 3.4 | <0.001 |

| Listener Age Group (Older) | 0.1 | 0.24 | 0.43 | 0.7 |

| Listener Age Group (Younger) | –0.06 | 0.19 | –0.31 | 0.8 |

| A1-P0 | –0.14 | 0.12 | –1.2 | 0.2 |

| F1 Bandwidth | 2.24 | 0.28 | 8.06 | <0.001* |

| Diphthongization (ROC) | 0.49 | 0.11 | 4.43 | <0.001* |

| Age (Older) * A1-P0 | 0.12 | 0.1 | 1.22 | 0.2 |

| Age (Younger) * A1-P0 | –0.36 | 0.08 | –4.5 | <0.001* |

| Age (Older) * F1 Bandwidth | 0.71 | 0.27 | 2.62 | <0.01* |

| Age (Younger) * F1 Bandwidth | –0.59 | 0.2 | –2.89 | <0.01* |

| Age (Older) * Diphthongization | –0.05 | 0.12 | –0.42 | 0.7 |

| Age (Younger) * Diphthongization | –0.06 | 0.09 | –0.65 | 0.5 |

| Random effects | Variance | Std. Dev | ||

| Listener (Intercept) | 1.3 | 1.2 | ||

| A1-P0 | 0.05 | 0.2 | ||

| F1 bandwidth | 0.9 | 0.9 | ||

| Speaker (Intercept) | 0.3 | 0.6 | ||

| Item (Intercept) | 1.02 | 1.01 |

Additionally, Listener Age Group interacted with two of the acoustic variables. First, there was an interaction between Listener Age Group and A1-P0 (acoustic nasality). Since acoustic nasality increases with decreasing A1-P0 values, we see that younger adult listeners show a stronger reliance on acoustic nasality in identifying CVN items from coarticulated vowels. The middle listeners also showed an interaction with A1-P0, with weaker reliance on the cue (recall that nasality is indicated with a negative sign) [Coef = 0.24, z = 2.75, p <0.01]. Yet, there was no difference in reliance on acoustic nasality for older adults. There were also interactions between Listener Age and F1 bandwidth, wherein older listeners use F1 bandwidth as a stronger cue to nasalization, while younger listeners use F1 bandwidth less. The releveled model showed that there was no difference for the middle listener age group in use of F1 bandwidth. Finally, there were no interactions observed between Listener Age and ROC suggesting that use of diphthongization as a cue to nasality remains stable across apparent-time.

4 General Discussion

This study examined the perception of anticipatory nasal coarticulation across apparent-time in California English. Listeners made lexical decisions based on hearing excised CV syllables containing either extensive or minimal nasal coarticulation as determined from a separate listening study (Zellou 2022). Identification of vowels from CVC contexts were at ceiling; yet, there was variation in identification performance across vowels from CVN contexts across speakers and listeners. Consistent with findings from prior work (e.g., Beddor et al. 2013; Zellou & Dahan 2019), listeners were overall more accurate at identifying the originally intended word as a CVN when hearing coarticulated vowels containing greater coarticulatory nasalization (defined using multiple acoustic variables) than those containing less coarticulatory nasality. Thus, this is consistent with the notion that coarticulation is perceptually informative and the time course of coarticulatory dynamics has consequences on lexical processing for listeners (Beddor 2009).

Moreover, extending prior work where coarticulatory timing was manipulated (e.g., Beddor et al. 2013), we extend this to naturally-occuring cross-speaker coarticulatory variation. Many studies report individual variation in coarticulatory patterns across speakers within a speech community (Yu 2019; Zellou 2017; Beddor et al. 2018; Yu & Zellou 2019). Here, we compared two groups of speakers who vary systematically in their realization of coarticulatory nasalization (in terms of several acoustic cues, including A1-P0, F1 bandwidth, and degree of diphthongization). And, when the coda was obscured, we find that listeners are able to identify intended CVN words more accurately when they were spoken by individuals who produce enhanced coarticulatory cues than speakers who produce less of them. Thus, differences in coarticulatory production grammars, and the use of multiple acoustic cues for a coarticulatory feature, vary across speakers and have perceptual consequences for listeners.

Additionally, there were differences across listeners over apparent-time. While all listeners showed an equivalent perceptual benefit for the extensively coarticulated vowels, performance for the minimally coarticulated vowels varied across listener age groups (defined generationally). In particular, the younger listeners showed higher performance for minimally coarticulated vowels, while the older listeners showed reduced performance for these tokens. Thus, perceptual sensitivity to minimal coarticulation was gradient, wherein performance for the minimally coarticulated vowels decreased over apparent-time. One interpretation is that this reflects an on-going coarticulatorily-based sound change in California English, similar to what has been observed in related work for different coarticulatory patterns in other dialects (e.g., Standard Southern British English in Harrington et al. 2011).

Since coarticulation results in multidimensional acoustic cues in the speech signal, we also explored variation in how listeners use the multiple cues in making nasal coda-lexical judgments. The acoustic-cue-use analysis revealed that all listeners rely on wider F1 bandwidth and greater diphthongization as perceptual cues to correctly identify lexical items from coarticulated vowels. Prior work reports that nasalized vowels across languages indeed contain wider F1 bandwidth (Styler 2017), and greater formant-spectral rate of change (Zellou & Scarborough 2019; Zellou et al. 2020) than oral vowels. In the present study, we also find that listeners are sensitive to these features in making perceptual judgments of nasality. Listeners in the current study use increased F1 bandwidth as a cue that the following segment is a nasal consonant, consistent with observations in prior work (e.g., Arai 2006). While related studies have reported increased diphthongization of nasalized vowels (Brotherton et al. 2019) and college-age adults imitate enhanced diphthongization in pre-nasal vowels (Zellou & Brotherton 2021), no prior work, to our knowledge, reports on listeners’ weighting of diphthongization as a cue to vowel nasality in English. As mentioned in the Introduction, the acoustic effect of nasal coarticulation results in dynamic perturbation of formants. While this effect is realized differently for vowel phonemes, we see that perceived formant dynamism resulting from the acoustic effects of nasal coarticulation is a strong cue for listeners across all age groups that the vowel is nasalized. This can lead to phonologization of vowel diphthongization in the context of nasality, such as in Canadian French (Walker 1984) and Brazilian Portuguese (Wetzels et al. 2020) and other Romance languages (Hajek 1997; Recasens 2014).

Moreover, we also observed differences in the weighting of some acoustic cues across listeners. In particular, younger listeners showed use of acoustic nasality to identify coarticulated vowels as nasal, while middle adult listeners hear vowels as more oral when they had greater nasality. At the same time, we do not find evidence that older age group listeners use A1-P0 as a perceptual cue for nasality. Since our acoustic analysis revealed that the presence of A1-P0 was weaker in the Minimal Coarticulators’ coarticulated vowels, this suggests the reduced performance in identifying Minimal Coarticultors’ CVN vowels for older adults was due to their reduced attention to this particular acoustic cue for nasality. In general, though, the relatively weak status of acoustic nasalization as a cue to the perception (and also for some speakers’ use in production) for nasalized vowels is somewhat surprising. The overall strength of the other cues (diphthongization, F1 bandwidth) over acoustic nasalization suggests ongoing cue-reweighting where the secondary features that co-vary with nasalization are becoming the stronger, primary cues while the original feature (acoustic nasalization) is decreasing in strength.

At the same time, the Minimal Coarticulators produce equivalent F1 bandwidth values across oral and nasalized vowels (they even produce slightly higher F1 bandwidth in oral vowels, the opposite pattern from expected). And, the acoustic-cue analysis showed that older adult listeners display greater reliance on F1 bandwidth as a cue to nasal identification. Adults in our middle listener age group did not show a difference in the way they used F1 bandwidth as a cue, while younger listeners use F1 bandwidth less on average. Thus, since younger listeners rely more heavily on A1-P0 as an acoustic cue, they are able to make more efficient use of the smaller amount of acoustic nasality produced by the Minimal Coarticulator. Yet, since the older listeners rely more on F1 bandwidth, they are less able to identify the oral-nasal distinction in the Minimal Coarticulators’ vowels, since that cue is not present. At the same time, we find that all listener groups use diphthongization as a cue to nasality equally; thus, use of diphthongization appears to be stable over time.

What can explain these findings? One explanation is that these patterns represent change in apparent-time of the acoustic cue weighting associated with nasality in California English. In the present study, we observe this in perception. An alternative explanation is that our findings represent a change in perceptual sensitivity - due to either differences in processing or hearing ability - to acoustic cues as individuals age. In this scenario, lifespan change in acoustic sensitivity could lead to a decrease in sensitivity to A1-P0 and an increase in sensitivity to F1 bandwidth. While we find this scenario less plausible given that the changes we observe in perception across age groups are also associated with distinct types of speakers, we cannot fully eliminate this as a possibility. Future work investigating changes in acoustic cues weighting of these features over the lifespan can inform this possibility.

The results of the present study also provide other important contributions to theoretical accounts of sound change actuation. Ohala (1993; also Ohala & Busà 1995) argues that “misperception” of acoustic effects of coarticulation as intrinsic properties of the vowel is a mechanism for sound change. In the present study, our findings suggest that listeners’ attention to the acoustic effects of coarticulation can lead to more accurate processing of the speech signal during spoken word comprehension (see also Beddor et al. 2013). Thus, consistent with more contemporary theoretical framings of sound change (Beddor 2009), perceptual attention to coarticulatory cues is not “errorful”, but rather is a useful and effective perceptual strategy that listeners employ routinely during spoken word comprehension (Beddor & Krakow 1999; Beddor et al. 2018).

4.1 Evidence for sound change being perception- or production-led?

Prominent models of sound change argue that innovation in either the production or perception of coarticulation triggers phonological change at the community level (Harrington 2012). Thus, a question that has garnered much attention in the literature is whether coarticulatorily-motivated sound change propagation is perception- or production-led (Kleber 2018; Kuang & Cui 2018; Coetzee et al. 2018; Pinget et al. 2020). One possibility is that sound change first propagates through shifts in perceptual attention, then is later realized in speakers’ productions; that is, sound change propagation is perception-led (Harrington 2012). Evidence of this comes from apparent-time studies showing, for instance, that younger speakers perceptually attend to coarticulatory patterns that they do not yet produce (e.g., Kuang & Cui 2018 find that some younger Southern Yi speakers who do not produce the more innovative phonetic shift still attend to it in perception). Another possible scenario is that sound change is propagated when coarticulatory effects are exaggerated by listener-turned-speakers and then get reanalyzed as the primary feature; that is, sound change propagation is production-led (Hyman 1976; Baker et al. 2011). This was observed by Pinget et al. (2020) who found that individuals who were most advanced in obstruent devoicing in Dutch were still perceptually sensitive to the conservative pattern.

Is there a concomitant, parallel change in production in this speech community? If yes, is this a production- or perception-led sound change? Examining any changes in the production of the multiple features associated with nasal coarticulation across time in California English can be informative as to whether California speakers are indeed moving more toward greater use of acoustic nasality as a primary phonetic cue to this contrast, and using F1 bandwidth less, as we would predict based on the perception findings in the current study; indeed, prior studies have shown that acoustic nasality and F1 bandwidth are features modified by speakers across contexts, and are therefore under active speaker control (e.g., Lu & Cooke 2008; Zellou & Scarborough 2015; Zellou & Scarborough 2019). Examination of how the timing of such a change in production is aligned with our perceptual shift can directly speak to how misalignment triggers phonological change over time in a speech community.

At this time, however, we can only speculate. Do we have any evidence for what is happening in production? In the present study, our stimuli came from multiple speakers in the same, younger age group (18–20 years old). So, if there is a change-in-progress in production, it is not strictly age-graded; younger speakers produce both “innovative” and “conservative” patterns in signaling the CVC-CVN contrast on vowels. This within-age category variation is consistent with cross-speaker differences reported by Zellou and Brotherton (2021) who found that some 19 year-old speakers were more advanced in the pre-nasal short-a split in California English, while others were more conservative. If we take the older listeners’ perceptual sensitivity in the present study as reflecting the conservative pattern, one possibility is that the Minimal Coarticulators represent a phonetic innovation in the reweighting of cues for nasalization cues away from F1 bandwidth. In this scenario, younger listeners show perceptual sensitivity to both the “conservative” pattern (Extensive coarticulation) and the “innovative” pattern (Minimal coarticulation), while older speakers are sensitive only to the “conservative” pattern (Extensive coarticulation). Thus, there are younger speakers who produce distinct coarticulatory features and younger listeners are also sensitive to both the innovative and the conservative patterns. The presence of exclusively innovative and conservative younger speakers — but perceptual flexibility for all younger listeners — could be evidence that this change is production-led. In Pinget et al. (2020), more innovative producers in Dutch maintain perceptual flexibility in order to understand more conservative speakers (similar patterns are also observed for Chru for some speakers in Brunelle et al. 2020). In California English, Zellou and Brotherton (2021) found that more advanced speakers in the ban~bad split still imitate (thus, are perceptually sensitive to) other speakers’ conservative nasality patterns. Therefore, the age-related changes in the current study could reflect a production-led shift, but nevertheless still one that is consistent with cue reweighting accounts of coarticulatory-based sound changes (Beddor 2009; Kuang & Cui 2018; Harrington et al. 2016). Future work exploring individual speakers’ production and perception patterns is needed to test whether these shifts might be perception- or production-led.

4.2 Future directions

In addition to exploring the production-perception link, our findings raise several other directions for future work. While other studies use methods that track listener behavior over time in clear and pristine listening conditions (e.g., eye-tracking in silence, Beddor et al. 2013), the current study more closely parallels natural language situations in the real world where part of the speech signal is obscured (by noise, or a cough) or deleted (as in hypoarticulation). Some researchers argue that sound change is more likely to be actuated in hypoarticulated speech contexts; when the source of coarticulation is reduced or obscured, listeners are less likely to attribute coarticulation to its source and instead can perceive it as an intrinsic property of the segment (Lindblom et al. 1995; Harrington et al. 2019). From the present study, we can deduce how listeners will react in such scenarios (e.g., background noise, hypoarticulated speech). A future direction is to compare how listeners attend to acoustic cues across different contexts such as with different types of noise and feedback from interlocutors, and also to coarticulatory patterns across systematic types of speech styles (e.g., nasal coarticulation can vary systematically across casual, slow-clear, and fast-clear speech in Cohn & Zellou 2023).

Future work can also compare the perceptual patterns in the present study with those by listeners from other dialect backgrounds or language experiences. For instance, is this a change in apparent-time for multiple dialects of US English, or just California English? Another avenue is to explore how listeners learn and adapt to novel cue weightings in production, given exposure and/or lexically-guided training with speakers such as our Minimal Coarticulators. Do listeners adapt their cue weighting of acoustic cues in using coarticulation for lexical perception? Furthermore, the type of speech produced — read speech — might have shaped the degree of style shifting. For example, related work has shown that speakers produce more variation in creakiness in some styles (e.g., conversational speech) than others (e.g., read speech) (Becker, Khan, & Zimman 2022), suggesting that we might see greater variation in more naturalistic contexts. We also acknowledge that our acoustic analysis involved taking acoustic measurements taken over several time points within a vowel and then transforming them into a single value for the statistical analyses. There is much work showing how coarticulation results in dynamic information (Carignan 2021), the time course of which listeners are exquisitely sensitive to (Beddor et al. 2013). Future work examining how cues unfold over time could reveal more insight into the dynamics of cue-use, both within a segment (e.g., in a vowel) but also across time (e.g., in a speech community). Finally, the speakers (Minimal and Extensive Coarticulators) were all California English speaking undergraduates (aged 18–20); future work examining how perception of coarticulation by age is needed, as well as the potential top-down effects of age-based expectations in shaping perception of coarticulation (e.g., /u/ fronting in Zellou, Cohn, & Block 2020).

5 Conclusion

Coarticulation results in an information-rich acoustic signal in that it provides multiple, and temporally wide-ranging, acoustic cues that listeners can use to more efficiently comprehend the listener’s intended message. Our results reveal variation across apparent-time in how listeners attend to the multiple features encoded as coarticulatory information. Broadly, this work contributes to our understanding of sound change, providing support for cue-reweighting accounts of coarticulatorily-motivated phonetic variation.

Notes

- The apparent-time hypothesis posits that, since individuals display robust post-adolescent linguistic stability, age differences observed in a speech community at a single point in time can be taken to reflect historical changes at the community level (Bailey 2002; Sankoff & Blondeau 2007). [^]

- https://osf.io/e5bw2/. [^]

- The one 58 year old was grouped with the Generation X group. [^]

- In addition to selecting which tone was ‘quieter’ (e.g. “1st tone was quieter”, etc.), we gave participants two additional options (“All 3 tones sound the same”, “One sounds louder than the others”). [^]

- We also modeled the data with Listener Age as a continuous variable. The results were similar, with a three-way interaction between Structure, Coarticulation Category, and Continuous Age, wherein older listeners showed even lower performance in identification of coarticulated vowels produced by the Minimal Coarticulators. [^]

- Since the acoustic analysis did not reveal the duration, spectral tilt (A3-P0), F1, or F2 are features targeted for enhancement of nasal coarticulation in either speaker group, they were not included as predictors in the model. [^]

- Including Coarticulator Type would lead to high collinearity between the acoustic variables and thus was not included. [^]

- The retained model structure was: Listener Age Group * (Acoustic Nasality + F1 Bandwidth + ROC) + (1 + Acoustic Nasality + F1 Bandwidth | Listener) + (1 | Speaker) + (1 | Item). By-listener random slopes for ROC resulted in a singularity error. [^]

Data availability

Data, models, and code are available for the current study in the Open Science Framework (OSF) repository for the project: http://doi.org/10.17605/OSF.IO/E5BW2.

Ethics and consent

This study was approved by the UC Davis Institutional Review Board (IRB ID 1864401) and all participants completed informed consent.

Acknowledgements

This material is based upon work supported by the National Science Foundation Grant No. BCS-2140183 awarded to GZ.

Competing interests

The authors report funding from the National Science Foundation. No other conflicts of interest or competing interests are reported.

References

Ali, Latif & Gallagher, Tanya & Goldstein, Jeffrey & Daniloff, Raymond. 1971. Perception of coarticulated nasality. The Journal of the Acoustical Society of America 49(2B). 538–540. DOI: http://doi.org/10.1121/1.1912384

Arai, Takayuki. 2006. Cue parsing between nasality and breathiness in speech perception. Acoustical science and technology 27(5). 298–301. DOI: http://doi.org/10.1250/ast.27.298

Bailey, Guy. 2002. Real and apparent time. In Chambers, Jack K. & Trudgill, Peter & Schilling-Estes, Natalie (eds.), The Handbook of Language Variation and Change, 312–332. Oxford: Blackwell. DOI: http://doi.org/10.1111/b.9781405116923.2003.00018.x

Baker, Adam & Archangeli, Diana & Mielke, Jeff. 2011. Variability in American English s-retraction suggests a solution to the actuation problem. Language Variation and Change 23(3). 347–374. DOI: http://doi.org/10.1017/S0954394511000135

Baker, Adam & Mielke, Jeff & Archangeli, Diana. 2008. More velar than /g/: Consonant coarticulation as a cause of diphthongization. In Proceedings of the 26th West Coast conference on formal linguistics, 60–68. Somerville, Mass.: Cascadilla Proceedings Project.

Barreda, Santiago. 2020. Vowel normalization as perceptual constancy. Language 96(2). 224–254. DOI: http://doi.org/10.1353/lan.2020.0018

Bates, Douglas & Mächler, Martin & Bolker, Ben & Walker, Steven. 2014. lme4: Linear mixed-effects models using Eigen and S4. R package version 1.1-7. 2014.

Becker, Kara & Khan, Sameer ud Dowla & Zimman, Lal. 2022. Beyond binary gender: creaky voice, gender, and the variationist enterprise. Language Variation and Change 34(2). 215–238. DOI: http://doi.org/10.1017/S0954394522000138

Beddor, Patrice S. 1993. The perception of nasal vowels. In Nasals, nasalization, and the velum, 171–196. Academic Press. DOI: http://doi.org/10.1016/B978-0-12-360380-7.50011-9

Beddor, Patrice S. 2009. A coarticulatory path to sound change. Language. 785–821. http://www.jstor.org/stable/40492954. DOI: http://doi.org/10.1353/lan.0.0165

Beddor, Patrice S. & Coetzee, Andries W. & Styler, Will & McGowan, Kevin B., & Boland, Julie E. 2018. The time course of individuals’ perception of coarticulatory information is linked to their production: Implications for sound change. Language 94(4). 931–968. DOI: http://doi.org/10.1353/lan.2018.0051

Beddor, Patrice S. & Krakow, Rena A. 1999. Perception of coarticulatory nasalization by speakers of English and Thai: Evidence for partial compensation. The Journal of the Acoustical Society of America 106(5). 2868–2887. DOI: http://doi.org/10.1121/1.428111

Beddor, Patrice S. & Krakow, Rena A. & Goldstein, Louis M. 1986. Perceptual constraints and phonological change: A study of nasal vowel height. Phonology Yearbook 3. 197–217. DOI: http://doi.org/10.1017/S0952675700000646

Beddor, Patrice S. & McGowan, Keven B. & Boland, Julie E. & Coetzee, Andries W. & Brasher, Anthony. 2013. The time course of perception of coarticulation. The Journal of the Acoustical Society of America 133(4). 2350–2366. DOI: http://doi.org/10.1121/1.4794366

Blevins, Juliette. 2004. Evolutionary phonology: The emergence of sound patterns. Cambridge: Cambridge University Press. https://hdl.handle.net/11858/00-001M-0000-0010-054F-2

Blevins, Juliette & Garrett, Andrew. 1993. The evolution of Ponapeic nasal substitution. Oceanic Linguistics 32(2). 199–236. https://www.jstor.org/stable/3623193. DOI: http://doi.org/10.2307/3623193

Boersma, Paul & Weenink, David. 2018. Praat: Doing phonetics by computer [Computer program]. Version 6.0.40, retrieved January 1, 2018 from http://www.praat.org/.

Bongiovanni, Silvina. 2021. Acoustic investigation of anticipatory vowel nasalization in a Caribbean and a non-Caribbean dialect of Spanish. Linguistics Vanguard 7(1). 20200008. DOI: http://doi.org/10.1515/lingvan-2020-0008

Brotherton, Chloe & Cohn, Michelle & Zellou, Georgia & Barreda, Santiago. 2019. Sub-regional variation in positioning and degree of nasalization of/æ/allophones in California. In Proceedings of the 19th International Congress of Phonetic Sciences (ICPhS 2019), 2373–2377.

Carignan, Christopher. 2014. An acoustic and articulatory examination of the ‘oral’ in ‘nasal’: The oral articulations of French nasal vowels are not arbitrary. Journal of Phonetics 46. 23–33. DOI: http://doi.org/10.1016/j.wocn.2014.05.001

Carignan, Christopher. 2017. Covariation of nasalization, tongue height, and breathiness in the realization of F1 of Southern French nasal vowels. Journal of Phonetics 63. 87–105. DOI: http://doi.org/10.1016/j.wocn.2017.04.005

Carignan, Christopher. 2021. A practical method of estimating the time-varying degree of vowel nasalization from acoustic features. The Journal of the Acoustical Society of America 149(2). 911–922. DOI: http://doi.org/10.1121/10.0002925

Carignan, Christopher & Coretta, Stefano & Frahm, Jens & Harrington, Jonathan & Hoole, Phil & Joseph, Arun & Kunay, Esther & Voit, Dirk. 2021. Planting the seed for sound change: Evidence from real-time MRI of velum kinematics in German. Language 97(2). 333–364. DOI: http://doi.org/10.1353/lan.2021.0020

Carignan, Christopher & Zellou, Georgia. 2023. Sociophonetics and vowel nasality. In The Routledge Handbook of Sociophonetics, 237–259. Routledge. DOI: http://doi.org/10.4324/9781003034636-12

Chen, Marilyn Y. 1997. Acoustic correlates of English and French nasalized vowels. The Journal of the Acoustical Society of America 102(4). 2360–2370. DOI: http://doi.org/10.1121/1.419620

Chen, Matthew Y. & Wang, William S-Y. 1975. Sound change: actuation and implementation. Language 51(2). 255–281. https://www.jstor.org/stable/412854. DOI: http://doi.org/10.2307/412854

Clumeck, Harold. 1976. Patterns of soft palate movements in six languages. Journal of Phonetics 4(4). 337–351. DOI: http://doi.org/10.1016/S0095-4470(19)31260-4

Coetzee, Andries W. & Beddor, Patrice S. & Shedden, Kerby & Styler, Will & Wissing, Daan. 2018. Plosive voicing in Afrikaans: Differential cue weighting and tonogenesis. Journal of Phonetics 66. 185–216. DOI: http://doi.org/10.1016/j.wocn.2017.09.009

Coetzee, Andries W. & Beddor, Patrice S. & Styler, Will & Tobin, Stephen & Bekker, Ian & Wissing, Daan. 2022. Producing and perceiving socially structured coarticulation: Coarticulatory nasalization in Afrikaans. Laboratory Phonology 13(1). DOI: http://doi.org/10.16995/labphon.6450

Cohn, Abigail C. 1990. Phonetic and phonological rules of nasalization. Ph.D. Dissertation. University of California, Los Angeles.

Cohn, Michelle & Zellou, Georgia. 2023. Selective tuning of nasal coarticulation and hyperarticulation across slow-clear, casual, and fast-clear speech styles. JASA Express Letters 3(12). DOI: http://doi.org/10.1121/10.0023841

Dahan, Delphine & Magnuson, James S. & Tanenhaus, Michael K. & Hogan, Ellen M. 2001. Subcategorical mismatches and the time course of lexical access: Evidence for lexical competition. Language and Cognitive Processes 16(5–6). 507–534. DOI: http://doi.org/10.1080/01690960143000074

Delvaux, Véronique & Demolin, Didier & Harmegnies, Bernard & Soquet, Alain. 2008. The aerodynamics of nasalization in French. Journal of Phonetics 36(4). 578–606. DOI: http://doi.org/10.1016/j.wocn.2008.02.002

Dimock, Michael. 2019. Defining generations: Where Millennials end and Generation Z begins. Pew Research Center 17(1). 1–7.

Eckert, Penelope. 2008. Where do ethnolects stop?. International Journal of Bilingualism 12(1–2). 25–42. DOI: http://doi.org/10.1177/13670069080120010301

Farnetani, Edda. 1990. VCV lingual coarticulation and its spatiotemporal domain. In Speech production and speech modelling (pp. 93–130). Dordrecht: Springer Netherlands. DOI: http://doi.org/10.1007/978-94-009-2037-8_5

Fowler, Carol A. 1984. Segmentation of coarticulated speech in perception. Perception & Psychophysics 36(4). 359–368. DOI: http://doi.org/10.3758/BF03202790

Fowler, Carol A. 2005. Parsing coarticulated speech in perception: Effects of coarticulation resistance. Journal of Phonetics 33(2). 199–213. DOI: http://doi.org/10.1016/j.wocn.2004.10.003

Fox, Robert A. & Jacewicz, Ewa. 2009. Cross-dialectal variation in formant dynamics of American English vowels. The Journal of the Acoustical Society of America 126(5). 2603–2618. DOI: http://doi.org/10.1121/1.3212921

Garellek, Marc. 2014. Voice quality strengthening and glottalization. Journal of Phonetics 45. 106–113. DOI: http://doi.org/10.1016/j.wocn.2014.04.001

Garellek, Marc & Ritchart, Amanda & Kuang, Jianjing. 2016. Breathy voice during nasality: A cross-linguistic study. Journal of Phonetics 59. 110–121. DOI: http://doi.org/10.1016/j.wocn.2016.09.001

Hajek, John. 1997. Universals of sound change in nasalization, Vol. 31. Oxford: Blackwell.

Hall-Lew, Lauren & Cardoso, Amanda & Kemenchedjieva, Yova & Wilson, Kieran & Purse, Ruaridh & Saigusa, Julie. 2015. San Francisco English and the California Vowel Shift. In Proceedings of The 18th International Conference of the Phonetic Sciences.

Harrington, Jonathan. 2012. The relationship between synchronic variation and diachronic change. Handbook of Laboratory Phonology, 321–332.

Harrington, Jonathan & Kleber, Felicitas & Reubold, Ulrich. 2011. The contributions of the lips and the tongue to the diachronic fronting of high back vowels in Standard Southern British English. Journal of the International Phonetic Association 41(2). 137–156. DOI: http://doi.org/10.1017/S0025100310000265

Harrington, Jonathan & Kleber, Felicitas & Reubold, Ulrich & Schiel, Florian & Stevens, Mary. 2019. The phonetic basis of the origin and spread of sound change. In The Routledge Handbook of Phonetics, 401–426. Routledge. DOI: http://doi.org/10.4324/9780429056253-15

Harrington, Jonathan & Kleber, Felicitas & Stevens, Mary. 2016. The relationship between the (mis)-parsing of coarticulation in perception and sound change: Evidence from dissimilation and language acquisition. Recent Advances in Nonlinear Speech Processing, 15–34. DOI: http://doi.org/10.1007/978-3-319-28109-4_3

Hyman, Larry. 1976. Phonologization. In Larry Hyman & Alphonse Juilland (eds.), Linguistic Studies Presented to Joseph H. Greenberg, 407–418. Saratoga: Anma Libri.

Imatomi, Setsuko. 2005. Effects of breathy voice source on ratings of hypernasality. The Cleft Palate – Craniofacial Journal 42(6). 641–648. DOI: http://doi.org/10.1597/03-146.1

Kawasaki, Haruko. 1986. Phonetic explanation for phonological universals: The case of distinctive vowel nasalization. In Ohala, John J. & Jaeger, Jeri J. (eds.), Experimental Phonology, 81–103. Cambridge, MA: MIT Press.

Kleber, Felicitas. 2018. VOT or quantity: What matters more for the voicing contrast in German regional varieties? Results from apparent-time analyses. Journal of Phonetics 71. 468–486. DOI: http://doi.org/10.1016/j.wocn.2018.10.004

Kleber, Felicitas & Harrington, Jonathan & Reubold, Ulrich. 2012. The relationship between the perception and production of coarticulation during a sound change in progress. Language and Speech 55(3). 383–405. DOI: http://doi.org/10.1177/0023830911422194

Krakow, Rena A. & Beddor, Patrice S. & Goldstein, Louis M. 1988. Coarticulatory influences on the perceived height of nasal vowels. Journal of the Acoustical Society of America 83(3). 1146–1158. DOI: http://doi.org/10.1121/1.396059

Kuang, Jianjing & Cui, Aletheia. 2018. Relative cue weighting in production and perception of an ongoing sound change in Southern Yi. Journal of Phonetics 71. 194–214. DOI: http://doi.org/10.1016/j.wocn.2018.09.002

Lahiri, Aditi & Marslen-Wilson, William. 1991. The mental representation of lexical form: A phonological approach to the recognition lexicon. Cognition 38(3). 245–294. DOI: http://doi.org/10.1016/0010-0277(91)90008-R

Lindblom, Björn & Guion, Susan & Hura, Susan & Moon, Seung-Jae & Willerman, Raquel. 1995. Is sound change adaptive? Rivista di Linguistica 7. 5–36.

Lu, Youyi & Cooke, Martin. 2008. Speech production modifications produced by competing talkers, babble, and stationary noise. The Journal of the Acoustical Society of America 124(5). 3261–3275. DOI: http://doi.org/10.1121/1.2990705

Lüdecke, Daniel & Ben-Shachar, Mattan S. & Patil, Indrajeet & Waggoner, Phillip & Makowski, Dominique. 2021. performance: An R package for assessment, comparison and testing of statistical models. Journal of Open Source Software 6(60). DOI: http://doi.org/10.21105/joss.03139

Mann, Virginia A. 1980. Influence of preceding liquid on stop-consonant perception. Perception & Psychophysics 28(5). 407–412. DOI: http://doi.org/10.3758/BF03204884

Mann, Virginia A. & Repp, Bruno H. 1980. Influence of vocalic context on perception of the [∫]-[s] distinction. Perception & Psychophysics 28(3). 213–228. DOI: http://doi.org/10.3758/BF03204377

Marslen-Wilson, William & Warren, Paul. 1994. Levels of perceptual representation and process in lexical access: words, phonemes, and features. Psychological Review 101(4). 653–675. DOI: http://doi.org/10.1037/0033-295X.101.4.653

Martin, James G. & Bunnell, H. Timothy. 1981. Perception of anticipatory coarticulation effects. The Journal of the Acoustical Society of America 69(2). 559–567. DOI: http://doi.org/10.1121/1.385484

Matisoff, James A. 1975. Rhinoglottophilia: the mysterious connection between nasality and glottality. In Nasálfest: Papers from a symposium on nasals and nasalization, 265–287. Stanford: Language Universals Project, Department of Linguistics, Stanford University.

Mielke, Jeff & Carignan, Christopher & Thomas, Eric R. 2017. The articulatory dynamics of pre-velar and pre-nasal/æ/-raising in English: An ultrasound study. The Journal of the Acoustical Society of America 142(1). 332–349. DOI: http://doi.org/10.1121/1.4991348

Ohala, John. J. 1974. Experimental historical phonology. In Anderson, John M. & Jones, Charles (eds.), Historical Linguistics, Vol. 2, 353–389. Amsterdam: North-Holland.

Ohala, John. J. 1975. Phonetic explanations for nasal sound patterns. In Ferguson, Charles A. & Hyman, Larry M. & Ohala, John J. (eds.), Nasálfest: Papers from a Symposium on Nasals and Nasalization, 289–316. Palo Alto, CA: Stanford University Language Universals Project.

Ohala, John. J. 1993. Coarticulation and phonology. Language and Speech 36(2–3). 155–170. DOI: http://doi.org/10.1177/002383099303600303

Ohala, John J. & Busà, M. Grazia. 1995. Nasal loss before voiceless fricatives: a perceptually-based sound change. Rivista di Linguistica 7. 125–144.

Ohala, John J. & Ohala, Manjari. 1995. Speech perception and lexical representation: The role of vowel nasalization in Hindi and English. Phonology and phonetic evidence. Papers in Laboratory Phonology IV. 41–60. DOI: http://doi.org/10.1017/CBO9780511554315.004

Parkinson, Stephen. 1983. Portuguese nasal vowels as phonological diphthongs. Lingua 61(2–3). 157–177. DOI: http://doi.org/10.1016/0024-3841(83)90031-1

Pinget, Anne-France & Kager, René & Van de Velde, Hans. 2020. Linking variation in perception and production in sound change: Evidence from Dutch obstruent devoicing. Language and Speech 63(3). 660–685. DOI: http://doi.org/10.1177/0023830919880206

Podesva, Robert J. & D’onofrio, Annette & Van Hofwegen, Janneke & Kim, Seung Kyung. 2015. Country ideology and the California vowel shift. Language Variation and Change 27(2). 157–186. DOI: http://doi.org/10.1017/S095439451500006X

Recasens, Daniel. 2014. Coarticulation and Sound Change in Romance. Amsterdam: John Benjamins. DOI: http://doi.org/10.1075/cilt.329

Sankoff, Gillian & Blondeau, Hélène. 2007. Language change across the lifespan: /r/ in Montreal French. Language 83(3). 560–588. DOI: http://doi.org/10.1353/lan.2007.0106

Scarborough, Rebecca & Zellou, Georgia. 2013. Clarity in communication: “Clear” speech authenticity and lexical neighborhood density effects in speech production and perception. The Journal of the Acoustical Society of America 134(5). 3793–3807. DOI: http://doi.org/10.1121/1.4824120

Scarborough, Rebecca & Zellou, Georgia & Mirzayan, Armik & Rood, David S. 2015. Phonetic and phonological patterns of nasality in Lakota vowels. Journal of the International Phonetic Association 45(3). 289–309. DOI: http://doi.org/10.1017/S0025100315000171

Solé, Maria-Josep. 1992. Phonetic and phonological processes: The case of nasalization. Language and Speech 35(1–2). 29–43. DOI: http://doi.org/10.1177/002383099203500204

Solé, Maria-Josep. 2007. Controlled and mechanical properties in speech. Experimental Approaches to Phonology. 302–321. DOI: http://doi.org/10.1093/oso/9780199296675.003.0018

Stoakes, Hywel M. & Fletcher, Janet M. & Butcher, Andrew R. 2020. Nasal coarticulation in Bininj Kunwok: An aerodynamic analysis. Journal of the International Phonetic Association 50(3). 305–332. DOI: http://doi.org/10.1017/S0025100318000282

Styler, Will. 2017. On the acoustical features of vowel nasality in English and French. The Journal of the Acoustical Society of America 142(4). 2469–2482. DOI: http://doi.org/10.1121/1.5008854

Tamminga, Meredith & Zellou, Georgia. 2015. Cross-dialectal differences in nasal coarticulation in American English. In ICPhS.

Ushijima, T., & Hirose, H. 1974. Electromyographic study of the velum during speech. Journal of Phonetics 2(4). 315–326. DOI: http://doi.org/10.1016/S0095-4470(19)31301-4

Walker, Douglas C. 1984. The pronunciation of Canadian French, 51–65. Ottawa: University of Ottawa Press.

Wetzels, W. Leo, & Menuzzi, Sergio, & Costa, João. 2020. The Handbook of Portuguese Linguistics. Hoboken: John Wiley & Sons.

Woods, Kevin J. & Siegel, Max H. & Traer, James & McDermott, Josh H. 2017. Headphone screening to facilitate web-based auditory experiments. Attention, Perception, & Psychophysics 79. 2064–2072. DOI: http://doi.org/10.3758/s13414-017-1361-2

Yu, Alan C. L. 2019. On the nature of the perception-production link: Individual variability in English sibilant-vowel coarticulation. Laboratory Phonology 10(1). DOI: http://doi.org/10.5334/labphon.97

Yu, Alan C. L. 2023. The Actuation Problem. Annual Review of Linguistics 9. 215–231. DOI: http://doi.org/10.1146/annurev-linguistics-031120-101336

Yu, Alan C. L. & Zellou, Georgia. 2019. Individual differences in language processing: Phonology. Annual Review of Linguistics 5. 131–150. DOI: http://doi.org/10.1146/annurev-linguistics-011516-033815