1 Introduction

This paper is concerned with the meanings of the English words cause and because. Our proposal is that three properties of the semantics of these words – properties that initially appear to have little to do with one another – are in fact the result of a single mechanism: an exhaustification operator in their lexical semantics.

In this paper we consider two kinds of sentences in English: sentences of the form c cause e where c is a noun and e a to-infinitive, and sentences of the form q because p, where p and q are clauses. For example:

- (1)

- a.

- The light turned on because Alice flicked the switch.

- b.

- Alice flicking the switch caused the light to turn on.

In what follows we will assume that cause and because have the same semantics, putting aside some differences in meaning between them.12 Let us introduce the three properties we aim to account for.

1.1 Three properties of cause and because

1.1.1 Property 1: The comparative nature of cause and because

A popular idea in the literature on causation is that the meaning of causal terms involves comparing what would happen in the presence of the cause versus what would happen in the absence of the cause. Here are some examples of analyses with such a comparative element.

Lewis (1973a: 536) proposes that an event e causally depends on an event c just in case the following two counterfactuals are true: if c had occurred, e would have occurred, and if c had not occurred, e would not have occurred.

Wright (1985; 2011) proposes the NESS (Necessary Element of a Sufficient Set) test for causation, according to which something is a cause just in case there is a set of conditions that are jointly sufficient for the effect, but are not sufficient when the cause is removed from the set.

Mackie’s INUS condition states that a cause is “an insufficient but non-redundant part of a condition which is itself unnecessary but sufficient for the result” (Mackie 1974: 64).

More recently, Beckers (2016) use a notion of production, arguing that the semantics of is a cause of involves comparing the presence and absence of the cause with respect to producing the effect. According to Beckers, C is an cause of E just in case, informally put, C produced E, and if C had not occurred, the absence of C would not have also produced E.

While these analyses differ in their details, they have the same overall shape. They consist of two conditions: one that considers what would happen in the presence of the cause (what we will call a ‘positive condition’) and one that considers what would happen in the absence of the cause (what we will call a ‘negative condition’).

1.1.2 Property 2: Asymmetry in strength between positive and negative conditions

The second property of the semantics of cause and because we consider is an asymmetry in strength between the positive condition and the negative condition. In section 5 we give evidence that the positive condition is strong while the negative condition is weak, in the following sense. The positive condition requires that in all scenarios where the cause occurs the relevant condition is met, while the negative condition only requires that in some scenario where the cause does not occur the relevant condition is met. What exactly this ‘relevant condition’ is depends on the analysis in question; for example, in the NESS and INUS conditions it is the effect not occurring, according to Beckers it is that the absence of the cause does not produce the effect.

1.1.3 Property 3: The positive and negative conditions have the same background

Almost all analyses of causal claims appeal to some set of background facts (Suppes 1970; Cartwright 1979; Skyrms 1980; Mayrhofer et al. 2008). These facts are in some sense ‘taken for granted’ when evaluating a causal claim. To illustrate, consider (1), repeated below.

- (1)

- a.

- The light turned on because Alice flicked the switch.

- b.

- Alice flicking the switch caused the light to turn on.

For these sentences to be true, one requires more than just the flicking of the switch. There must be power in the building, a wire connecting the switch and light, and so on. These background facts are involved in checking the positive condition. For example, in the NESS and INUS tests above one checks whether the presence of the cause is sufficient for the effect given some background facts: the ‘set’ in the words of the NESS test; the ‘condition’ in the words of the INUS test.

There is also a background involved when evaluating the negative condition: the facts from the actual world that are held fixed when evaluating what would happen if the cause had not occurred. For example, when interpreting (1) we consider relevant scenarios where Alice had not flicked the switch. In these scenarios the ‘background’ or ‘circumstances’ are held fixed; for instance, given that there is actually power in the building and a wire connecting the switch and light, one does not consider scenarios where there is no power in the building or no wire connecting the switch to the light.

In section 6 we see evidence that the positive condition is interpreted with respect to a background, and the negative condition is also interpreted with respect to a background. This raises the question whether there is any systematic relationship between the two. Section 6.2 presents evidence that these two backgrounds must be the same. One may stipulate that the lexical semantics of cause and because require them to be same, as for example the NESS and INUS tests do, where the ‘set’ or ‘condition’, minus the cause, is the same for both the positive and negative conditions. However, one may also wonder whether there is a more systematic principle accounting for the fact that the positive and negative backgrounds must be the same.

1.2 The apparent dissimilarity of properties 1, 2 and 3

These, then, are the three properties of cause and because we seek to account for. At first glance they are quite different. Granted, Property 1 is necessary for Properties 2 and 3, since Properties 2 and 3 are formulated in terms of the positive and negative conditions that are guaranteed by Property 1. But beyond this, the three properties appear to have nothing to do with one another. Property 2 is about the logical strength of the positive and negative conditions, while Property 3 is about their backgrounds. They appear to be about different aspects of the meaning of cause and because. For instance, one could imagine a lexical entry of cause and because that involves comparing the presence and absence of the cause (i.e. has Property 1), but does not require the positive and negative conditions to be evaluated with respect to the same set of background facts (lacks Property 3).

Similarly, one can imagine a lexical entry with Property 1 but without Property 2: such an entry would compare the presence and absence of the cause along some dimension (such as sufficiency for the effect) but would require both conditions to be strong, or both conditions to be weak. Indeed, we have already seen an analysis of this kind: recall that Lewis (1973a) proposed that an event e causally depends on an event c just in case if c had occurred, e would have occurred, and if c had not occurred, e would not have occurred. In the same year that Lewis published his paper on causation (Lewis 1973a), he also published Counterfactuals (Lewis 1973b) in which he proposed that would is a necessity modal, requiring that in all the most similar worlds to the actual world where the antecedent holds, the consequent also holds. In the terminology above, Lewis’s analysis of causal dependence has both a strong positive condition and a strong negative condition. And the analysis does not say anything about Property 3. So Lewis’s analysis has Property 1 but lacks Property 2 and may or may not have Property 3. Lewis’ analysis of causal dependence does not violate any general theoretical principles. So it would be all the more surprising if the semantics of cause and because derived Properties 1, 2 and 3 from a single source.

This, however, is what we propose. We show that a single operator in the semantics of cause and because can account for all three properties.

1.3 Preliminaries: overview of the semantics of modality

Before presenting our proposed semantics of cause and because, let us briefly introduce the framework in which the semantics will be expressed. We let □f,g(p)(q) be a universal counterfactual modal with modal base f, ordering source g, restrictor p and nuclear scope q. The use of a modal base and ordering source comes from Kratzer’s analysis of modality. We can roughly paraphrase □f,g(p)(q) as given the circumstances, the truth of p guaranteed the truth of q, or, given the circumstances, p is sufficient for q (for a formal analysis of the notion of modality involved in causal claims, see McHugh 2022).3

The view that modals have both a restrictor and a nuclear scope – akin to quantificational determiners such as every – comes from Lewis (1975) and what Partee (1991) calls the Lewis-Kratzer-Heim view of if-clauses, where the if-clause contributes the modal’s restriction and the consequent the modal’s scope. Here we are not dealing with if-clauses but with because-clauses and a periphrastic causative (the verb cause). Although adjuncts (if- and because-clauses) and periphrastic causatives differ in their syntax, we propose they are semantically uniform in the following sense: the right argument of because and the left argument of cause (the ‘cause’) restrict a modal, while the left argument of because and the right argument of cause (the ‘effect’) contribute the modal’s scope.

Let us briefly clarify what kind of modality is involved in causal claims. The modality is circumstantial (often called ‘natural’, ‘physical’ or ‘nomic’, see e.g. Fine 2002; Kratzer 2012: chapter 2), rather than logical, mathematical, metaphysical, epistemic or normative modality. The examples in (2) illustrate circumstantial modality. The must in (2a) expresses nomic necessity (uttered in a situation where the ball is in mid-air). And the can in (2b) expresses nomic possibility.

- (2)

- a.

- The ball must come down.

- b.

- Hydrangeas can grow here. (Kratzer 1991: ex. (21a))

Circumstantial modality takes into account the circumstances holding in a given context. In the case of (2a) the circumstances include the fact that Alice is on earth, earth’s gravity, that there are no birds circling that could decide to snatch the ball mid-air, and so on.

2 Cause, because, and exhaustification

We will consider two semantics of cause and because, what we call the ‘simplified’ and the ‘full’ semantics. The simplified semantics is a useful first approximation of the meaning of cause and because, but faces well-known problems from overdetermination cases, discussed below. The full semantics overcomes these problems, and is our proposal for the meaning of cause and because. We begin with the simplified semantics.

2.1 The simplified semantics

On the simplified semantics, the positive condition states that the cause is sufficient for the effect given the background. We formalize this sufficiency condition as □f,g(p)(q).

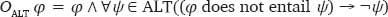

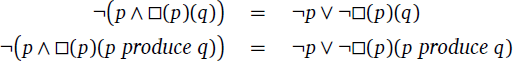

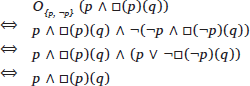

Our key observation is that Properties 1, 2 and 3 above all follow from the presence of a single operator: exhaustification with respect to the cause’s polar alternatives: {p, ¬p}. Exhaustification is defined as follows, and is akin to a silent only.4

When we plug in the sufficiency condition □f,g(p)(q) for the prejacent and replace the cause p with its polar alternative ¬p, exhaustification checks whether the prejacent entails the result, □f,g(¬p)(q): if not, exhaustification negates it.5

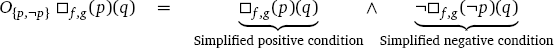

As it happens, □f,g(p)(q) does not entail □f,g(¬p)(q), since it is possible for p to guarantee q given the circumstances while ¬p does not also guarantee q given the circumstances. Thus exhaustification of the sufficiency condition □f,g(p)(q) has the following effect.6

This condition states, loosely put, that given the circumstances, the cause is sufficient for the effect but the absence of the cause is not sufficient for the effect. This is essentially the NESS test above, formalized in terms of circumstantial modality.

Our simplified semantics for because and cause, given in (3), states that the cause occurred, and that this exhaustified sufficiency condition above holds. For brevity we only state the entries for because, though it should be understood as also applying to cause with the left and right arguments swapped (i.e. q because p is equivalent to p cause q).78

- (3)

- Semantics of because (simplified).

- ⟦because⟧ = λp⟨s,t⟩ λq⟨s,t⟩: p ∧ O{p,¬ p} □f,g(p)(q).

2.2 Properties 1, 2, and 3 via exhaustification

Properties 1, 2 and 3 fall out immediately from exhaustification.

The comparative character of because (Property 1) results from the comparative nature of exhaustification, which compares the prejacent with its alternatives. We stipulate that in the semantics of because, the alternatives are the cause’s polar alternatives.

The asymmetry in strength between the positive and negative conditions (Property 2) results from the fact that exhaustification negates alternatives. This parallels the behaviour of only when it composes with a universal modal, such as guaranteed:

- (4)

- a.

- You are guaranteed to get a seat only if you book in advance.

- (i) ⇒ You are not guaranteed to get a seat if you do not book in advance.

- (ii) ⇏ You are guaranteed to not get a seat if you do not book in advance.

- b.

- The effect is guaranteed to occur only if the cause occurs.

- (i) ⇒ The effect is not guaranteed to occur if the cause does not occur.

- (ii) ⇏ The effect is guaranteed to not occur if the cause does not occur.

In (4) we assume that broad focus on the if-clause triggers its polar alternative.9 Exhaustification, like only, negates the prejacent’s excludable alternatives. Given the duality between universal and existential quantification, the negation contributed by exhaustification turns a necessity modal into a possibility modal, generating the observed asymmetry in strength: O{p,¬p}□f, g(p)(q) entails ¬□f,g(¬p)(q) rather than □f,g(¬p)(¬q).

Finally, the fact that the positive and negative conditions have the same background (Property 3) falls out from the fact that exhaustification simply copies the modal’s parameters – the modal base (f) and ordering source (g) – without altering them. If, as we argue in section 6.1 below, the background involved in the interpretation of cause and because is the modal base, then exhaustification ensures that this background is the same in both the positive and negative conditions.

2.3 The full semantics

The simplified semantics faces well-known problems from cases of overdetermination, where a causal claim is intuitively true even though the effect would still have occurred without the cause (we discuss overdetermination cases in section 4.1; for a survey of overdetermination cases see Hall & Paul 2003). For this reason we also consider a semantics designed to work in cases with and without overdetermination alike. For the full semantics, we borrow the notion of production from Beckers (2016) and Beckers & Vennekens (2018), inspired by Hall (2004). Beckers aims to analyze the truth conditions of is a cause of within the framework of structural causal models.

Our goal in this essay is not to justify a solution to the problems raised by overdetermination, and so we will not attempt to justify a particular formalization of production.10 The idea, informally, is that proposition p produced proposition q just in case there is a chain of events from a p-event to a q-event such that each event on the chain actually occurred, and each event on the chain counterfactually depends on a previous event on the chain. What is most important to observe for present purposes is the overall shape of Beckers’ analysis, which consists of the following two conditions, here stated informally (for a formalization see Beckers & Vennekens 2018).

- (5)

- p is a cause of q just in case

- a.

- p produced q, and

- b.

- If p had not occurred, ¬p would not have produced q.

Beckers’ key innovation is that (5) does not require that if the cause had not occurred, the effect would not have occurred; rather, it requires that if the cause had not occurred, the absence of the cause would not have produced the effect.11

At first glance, it is quite surprising that the semantics of cause and because would involve considering whether the absence of the cause would have itself produced the effect. Where could such a complex condition possibly come from? Notice that a formula of exactly this shape is expected if the semantics of cause and because involve considering whether the cause produced the effect, and also includes a mechanism that involves replacing the cause with its negation. Exhaustification with respect to the cause’s polar alternatives is just such a mechanism.

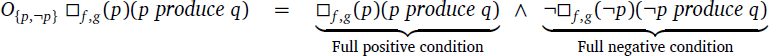

However, clearly, exhaustifying (5a) does not result in (5b). The two conditions have a fundamentally different shape. Nonetheless, in sections 3 and 4 we see evidence that (5a) is not quite correct. The semantics of cause and because do not only require that the cause produce the effect, but requires that the truth of the cause be sufficient for the cause to produce the effect. Formally, the condition is □f,g(p)(p produce q). When we exhaustify (5a), we do not get (5b), but when we exhaustify □f,g(p)(p produce q), remarkably, we get exactly Beckers’ condition in (5b).

If we replace q in the simplified semantics with p produce q we get the following semantic entry, which we call the ‘full’ semantics.

- (6)

- Semantics of because (full).

- ⟦because⟧ λp⟨s,t⟩ λq⟨s,t⟩ : p ∧ O{p,¬p} □f,g(p)(p produce q).

On the full semantics, Properties 1, 2 and 3 also fall out as a result of exhaustification, for the same reasons as on the simplified semantics.

2.4 Why put exhaustification in the semantics of cause and because?

What is to be gained by putting exhaustification into the semantics of cause and because? In one sense, quite little. The exhaustification operator is well-defined, so one can always replace the exhaustified formula with its equivalent exhaustification-free result, if desired.

In another sense, however, writing the semantics of cause and because in terms of exhaustification allows us to derive some aspects of their meaning from a general mechanism, one not unique to causation or modality. Exhaustification appears in theories from a number of semantic domains, such as scalar implicatures (van Rooij & Schulz 2004; Schulz & van Rooij 2006; Spector 2007), polarity items (Krifka 1995; Chierchia 2013) and free choice inferences (Fox 2007). A number of authors go so far as to propose mandatory exhaustification, in the sense that every matrix sentence is parsed with an exhaustification operator by default (see Krifka 1995; Fox 2007; Magri 2009).

A compelling, albeit speculative idea is that the natural language system finds it economical to build meanings from familiar operations, with the more familiar the operation, the greater the gains in economy from its reuse. If we are constantly exhaustifying the sentences we interpret, as some have proposed, it is not so surprising to see the same operation appear in the lexical semantics of certain words. Exhaustification has previously been applied in the lexical semantics of Mandarin dou (Xiang 2016), approximative uses of just (Thomas & Deo 2020), and of course, only.12 If the present proposal is correct, we can add cause and because to the growing list of words whose meaning can be expressed in terms of exhaustification.

Of course, this kind of reasoning can only take us so far. Exhaustification alone does not tell us what to exhaustify, nor what the alternatives are.13 Some motivation for polar alternatives – comparing the cause with its absence – may come from looking at the relationship between causal reasoning and decision-making. A paradigm case of causal reasoning concerns an agent deciding whether or not to do an action. Faced with a decision problem about whether or not to bring about p, we may think of the simplified semantics as addressing the questions If I bring about p, will q be true? And if I do not, will q be true? and the full semantics as addressing the questions If I bring about p, will that produce q? And if I do not bring about p, will that produce q?14

Nonetheless, even if the paradigm case of causal reasoning involves polar alternatives, this still does not tell us why the paradigm case ends up hardwired into the semantics of cause and because. One may imagine an alternative meaning of cause and because which allows the set of alternatives to be contextually determined rather than fixed to be the cause’s polar alternatives, {p, ¬p}. Since the definition of exhaustification allows for any set of alternatives, to derive the entries for cause and because we propose, we must add a stipulation that the alternatives used by exhaustification are the cause’s polar alternatives. It remains to be seen whether this stipulation can be derived from general principles.

2.5 Comparing the full and simplified semantics

Before moving on to the data, let us pause to better understand the relationship between the full and simplified semantics.

Where the simplified semantics cares about whether or not the effect occurred, the full semantics cares about whether or not the cause produced the effect. It turns out that the two semantic entries are logically independent, in the sense that there are cases where the full semantics is satisfied but not the simplified semantics, and vice versa. Figure 1 shows entailment relations between the conditions of the full and simplified semantics. These are guaranteed by the two facts in (7).

- (7)

- a.

- Production is factive: p produce q entails p ∧ q.

- b.

- Modals are upward entailing in their scope:

- If q+ entails q then □(p)(q+) entails □(p)(q).

It follows that the full semantics has a stronger positive condition but a weaker negative condition compared with the simplified semantics, as shown in Figure 1.

A further property of both semantics is that counterfactual dependence, here formalized as □(¬p)(¬q), entails the negative conditions of both the full and simplified semantics. Let us start by showing that counterfactual dependence entails the simplified negative condition, ¬□(¬p)(q). It is commonly assumed that modals – like all quantificational elements – presuppose that their domain is nonempty (Cooper 1983; von Fintel 1994; Beaver 1995; Ippolito 2006). In this the case, the domain of the modal at world w is the set of worlds selected by the modal when restricted by the proposition p. Let us denote this set of worlds by D(p,w). Then □(¬p)(¬q) is true just in case all worlds in D(¬p,w) are ¬q-worlds. We assume that an utterance that entails □(¬p)(¬q) presupposes that D(¬p,w) is nonempty. So counterfactual dependence, □(¬p)(¬q), together with the nonempty domain presupposition implies that some world in D(¬p,w) is a ¬q-world. Since worlds are logically consistent, this world is not an q-world. So it is not the case that every world in D(¬p,w) is an q-world: ¬□(¬p)(q), which is just the simplified negative condition.

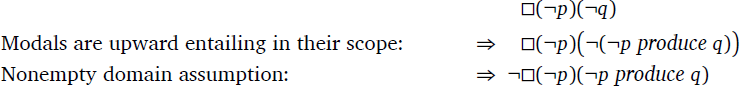

To see that counterfactual dependence entails the full negative condition (when the restricted modal has nonempty a domain), first recall that production is factive (7a). Contrapositively, if q does not occur then nothing produces q to occur; in particular, ¬p does not produce q to occur. Thus ¬q entails ¬(¬p produce q). Then as modals are upward entailing in their scope (7b), we have the following chain of implications.

The last formula is the full negative condition.

3 Sufficiency

Now that we have a proposal for the semantics of cause and because, let us test its predictions. It is well-known that there are cases – called overdetermination cases in the literature – where a causal claim is true but the effect does not counterfactually depend on the cause (see section 4.1). Another problem, which has not received as much attention, is that there are cases of counterfactual dependence without the corresponding causal claim being true.15

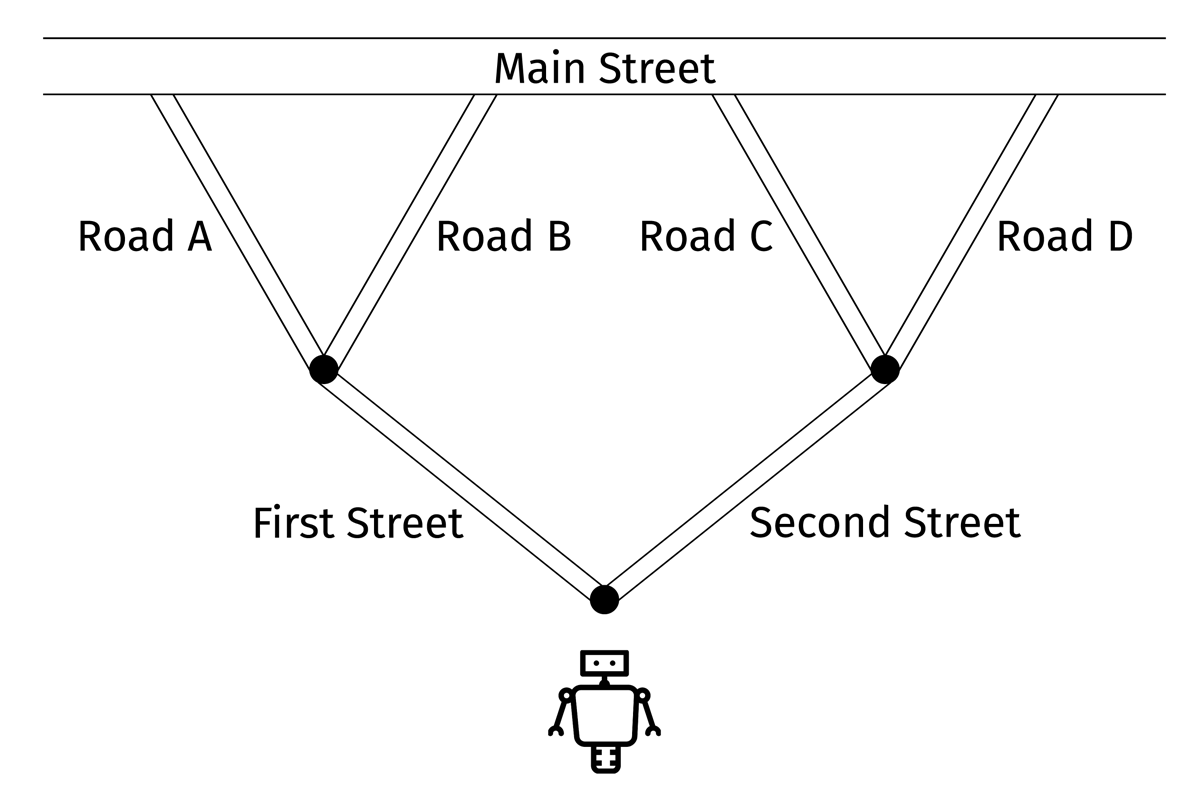

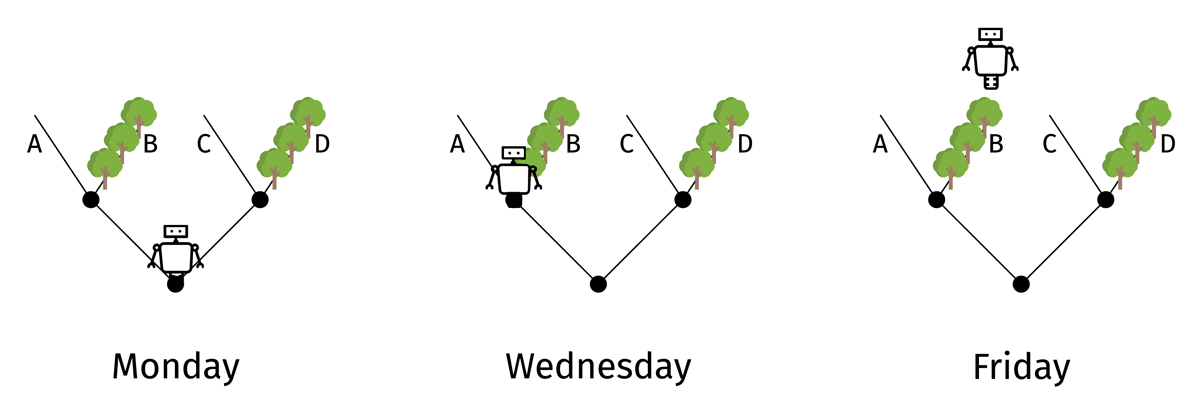

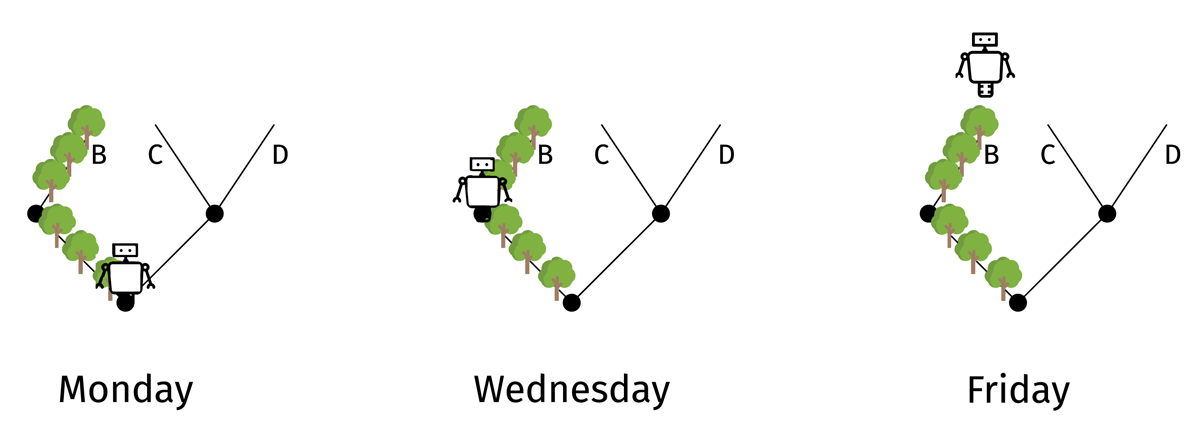

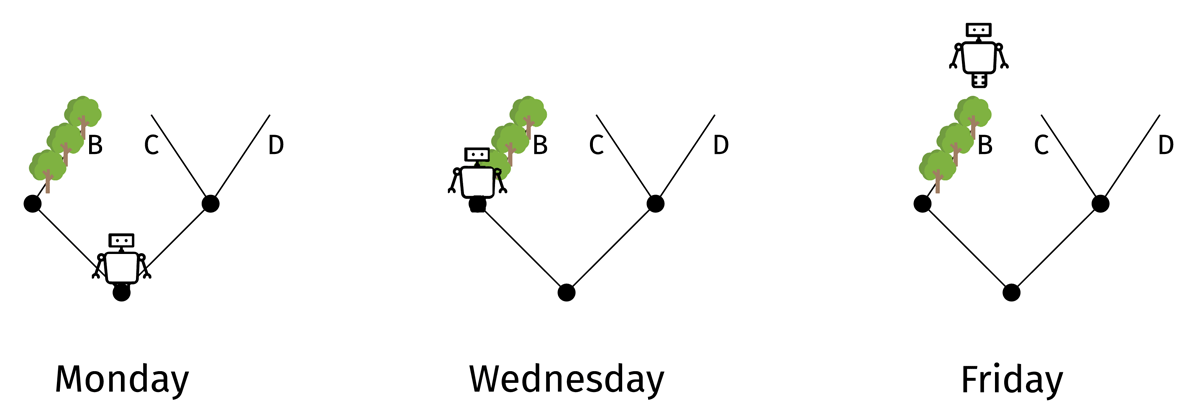

Imagine a robot has to get to Main Street, choosing between any of the four available routes to get there (see Figure 2).

When the robot reaches a fork in the road, it decides what way to go at random. It is programmed so that it must take one of the routes to Main Street and cannot reverse. On this particular day, the robot took First Street and then Road B. Now consider the sentences below.

- (8)

- a.

- The robot taking First Street caused it to take Road B.

- b.

- The robot took Road B because it took First Street.

In this context, (8a) and (8b) are intuitively false. However, the robot taking Road B counterfactually depends on it taking First Street: if it hadn’t taken First Street, it wouldn’t have taken Road B. In general, then, counterfactual dependence is not enough for the truth of the corresponding causal claim.

Intuitively, the sentences in (8) are false because the robot did not have to take Road B after taking 1st Street. It could have taken Road A instead. If this explanation is correct, it implies that causes are sufficient for their effects (in other words, causes ‘guarantee’, ‘determine’, ‘make’, or ‘ensure’ that their effects happen).

We can test this explanation by changing the context in a minimal way to make taking First Street sufficient for taking Road B, and checking whether our intuitions change accordingly. To that end, suppose that before setting out on its journey the robot was programmed with the rule: Always change direction!. The effect of the rule is that if the robot goes left at one fork in the road, it must go right at the next one, and if it goes right at the first fork, it must go left left at the second. Today, the robot took took First Street and, since it went left first, its programming required it to turn right, taking Road B.

In this new context, consider again the sentences in (8), repeated below.

- (8)

- a.

- The robot taking First Street caused it to take Road B.

- b.

- The robot took Road B because it took First Street.

Suddenly the sentences in (8) are true. This is evidence that cause and because require the cause to be sufficient for the effect, a claim we have formalized as □f,g(p)(q). The two contexts differ in that the robot taking First Street was sufficient for it to take Road B when it is programmed to always change direction but not when it turns at random.

The simplified and full semantics, repeated below, each entail that the cause is sufficient for the effect, □(p)(q).

- (9)

- a.

- Simplified:

- p ∧ O□(p)(q)

- b.

- Full:

- p ∧ O□(p)(p produce q)

The simplified formula entails □(p)(q) since exhaustification entails its prejacent: O□(p)(q) entails □(p)(q). For the full semantics, recall from section 2.5 that the full positive condition entails the simplified production condition (since p produce q entails q and modals are upward entailing in their scope); that is, O□(p)(p produce q) entails □(p)(p produce q) which in turn entails □(p)(q).

Now, in the context where the robot turns at random, the robot taking First Street was not sufficient for it to take Road B, so both the simplified and full semantics correctly predict that (8) are false in that context.

What about in the context where the robot is programmed to always change direction? In that context the robot taking First Street was sufficient for it to take Road B, so the simplified positive condition is satisfied. The full positive condition is also satisfied: the robot taking First Street was sufficient for there to be a chain of events from it taking First Street to it taking Road B, with each event counterfactually depending on the previous one. In other words, the robot taking First Street was sufficient for that to produce it to take Road B. Thus the full positive condition is condition is also satisfied when the robot is programmed to always change direction.

Turning to the negative conditions, note that in (8) the effect counterfactually depended on the cause: if the robot hadn’t taken First Street, it wouldn’t have taken Road B. Formally, we have □(¬p)(¬q). As we saw in section 2.5, counterfactual dependence entails the negative conditions of both the full and simplified semantics. Thus the negative conditions are satisfied, and so both the full and simplified semantics correctly predict (8) to be true in the context where the robot is programmed to always change direction.

To summarize this section, the robot example provided evidence that cause and because imply that the cause is sufficient for its effect. In the context where the robot turns at random, both the full and simplified semantics correctly predict that (8) are true, and in the context where the robot is programmed to always change direction, both semantics correctly predict that (8) are false.

4 Difference-making

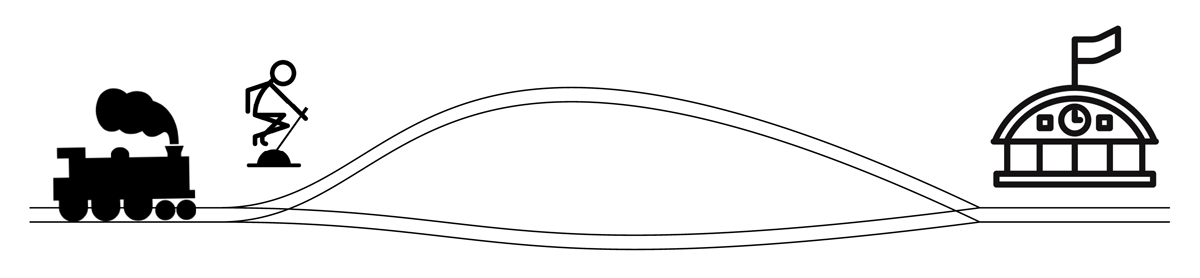

Of course, the semantics of cause and because involves more than just sufficiency. The cause must also in some sense ‘make a difference’ to the effect. As Lewis put it, “We think of a cause as something that makes a difference, and the difference it makes must be a difference from what would have happened without it” (Lewis 1973a: 557). This difference-making component is illustrated in the following scenario, due to Hall (2000) and depicted in Figure 3.

An engineer is standing by a switch in the railroad tracks. A train approaches in the distance. She flips the switch, so that the train travels down the right-hand track, instead of the left. Since the tracks reconverge up ahead, the train arrives at its destination all the same.

Consider (10) in this context.

- (10)

- a.

- The train reached the station because the engineer flipped the switch.

- b.

- The engineer flipping the switch caused the train to reach the station.

The sentences in (10) are intuitively false. The simplified and full semantics correctly predict this. This is due to their negative conditions: ¬□(p)(q) and ¬□(p)(p produce q), respectively. Even if the engineer had not flipped the switch, the train was guaranteed to reach the station: □(¬flip switch)(train reach station). So the simplified negative condition ¬□(¬flip switch)(train reach station) is not satisfied and (10) are predicted to be false.

Turning to the full semantics, recall that while the simplified semantics cares about whether the effect occurs, the full semantics cares about whether the cause produces the effect to occur. According to Beckers (2016: 95) and the definition of production in note 10, the engineer flipping the switch produced the train to reach the station.16 Crucially, however, if the engineer had not flipped the switch, them not flipping the switch would have also produced the train to reach the station: we have

□(¬flip switch)((¬flip switch) produce train reach station).

The full negative condition fails, so the full semantics correctly predicts (10) to be false.

4.1 Overdetermination

So far we have only considered cases where the full and simplified semantics agree. Let us now consider a case where they come apart. As is well-known, the idea that causation requires counterfactual dependence is plagued by a host of counterexamples (see Lewis 2000; Hall & Paul 2003; Hall 2004; Halpern 2016; Beckers 2016; Andreas & Günther 2020 and many more). Here is an oft-discussed example from Hall (2004: 235).

Suzy and Billy, expert rock-throwers, are engaged in a competition to see who can shatter a target bottle first. They both pick up rocks and throw them at the bottle, but Suzy throws hers before Billy. Consequently Suzy’s rock gets there first, shattering the bottle. Since both throws are perfectly accurate, Billy’s would have shattered the bottle if Suzy’s had not occurred, so the shattering is overdetermined.

- (11)

- a.

- The bottle broke because Suzy threw her rock at it.

- b.

- Suzy throwing her rock at the bottle caused it to break.

- (12)

- a.

- The bottle broke because Billy threw his rock at it.

- b.

- Billy throwing his rock at the bottle caused it to break.

Intuitively, the sentences in (11) are true and the sentences in (12) are false.

Let us consider how the simplified and full semantics fare with this scenario. In the Billy and Suzy case we assume that even if Suzy hadn’t thrown, the bottle was guaranteed to break. So the negative condition, ¬□(¬Suzy throw)(bottle break), is not satisfied. The simplified semantics therefore incorrectly predicts (11) to be false.

However, the full semantics correctly predicts (11) to be true. Firstly, the positive condition is satisfied. Suzy throwing the rock was sufficient for her throw to produce the bottle to break. Secondly, the negative condition is satisfied. As Beckers (2016: 842) explains, if Suzy had not thrown, the fact that she did not throw would not have produced the bottle to break. Rather, if she hadn’t thrown, Billy’s throw would have produced the bottle to break.

4.2 From sufficiency and production to sufficiency for production

In the previous section we saw that because requires (i) that the cause be sufficient for the effect and (ii) that the cause produce the effect. One may wonder whether there is any interaction between the sufficiency and production conditions. One possibility is that the semantics of because requires sufficiency, and also requires production, without requiring any relationship between them.

- (13)

- Hypothesis 1: sufficiency and production.

- q because p entails □(p)(q) ∧ (p produce q).

A stronger possibility is that because requires the cause to be sufficient for the cause itself to produce the effect:

- (14)

- Hypothesis 2: sufficiency for production.

- q because p entails □(p)(p produce q).

According to Hypothesis 1, the production condition is outside the scope of the modal, while according to Hypothesis 2, it is inside the scope of the modal.

Hypothesis 2 entails Hypothesis 1. This is due to the following entailment.

- (15)

- p ∧ □(p)(p produce q) entails □(p)(q) ∧ p produce q.

This follows from the facts in (7) – that production is factive and modals are upward entailing in their scope – together with modus ponens for universal modals.

- (16)

- Modus ponens for universal modals. p ∧ □(p)(q) entails q.

While Hypothesis 2 entails Hypothesis 1 (given that q because p entails p), the converse does not hold: Hypothesis 1 does not entail Hypothesis 2. This is because it is possible for the truth of p to guarantee the truth of q, while p does not guarantee that p itself would produce q.

- (17)

- p ∧ □(p)(q) ∧ (p produce q) does not entail p ∧ □(p)(p produce q).

We witness the failure of this entailment in cases where the cause produced the effect, but it was possible for the cause to occur and yet for the effect to be produced in some other way. Here is a such a scenario.

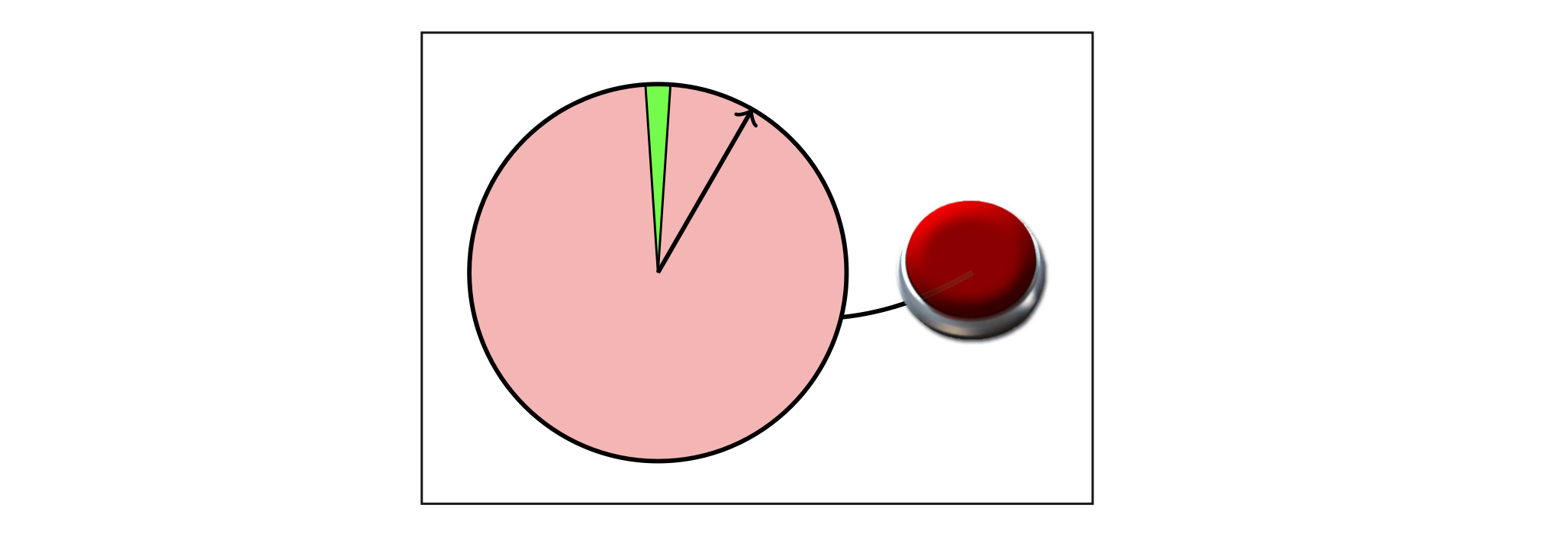

Alice and Bob are two children at the funfair with their parents. The parents decide that the children should have a souvenir of their time at the funfair: if any child does not win a teddy by the end of the day, the parents will buy one for them.

Alice and Bob play a game with a spinner and a button (see Figure 4). A pointer moves around the circle until the player pushes the button. If the pointer lands in the thin green region, the player wins a teddy. If it lands in the red region, the player gets nothing.

Alice entered the game and, by sheer luck, pushed the spinner at the right time. The pointer landed in the winning region and she won a teddy. Bob entered the game and pushed the button at the wrong time. The pointer landed in the red region and he didn’t win a teddy.

At the end of the day, the parents notice that Bob didn’t win a teddy, so they bought him one.

So Alice and Bob both entered the spinner game. Alice won and Bob lost, so Alice got a teddy but Bob did not. Now consider (18).

- (18)

- Alice got a teddy because she entered the spinner game.

Intuitively, (18) is false. Bob is here as a foil to make salient the possibility that Alice enters the game but pushes the button at the wrong time, in which case she does not get a teddy from the spinner game.

Let us now check the following three conditions.

Sufficiency: □(Alice enter)(get teddy) ✓

Since Alice was guaranteed to get a teddy, anything whatsoever was sufficient for her to get a teddy. In particular, Alice entering the game was sufficient for her to get a teddy.

Production: Alice enter produce get teddy ✓

The fact that Alice entered the game produced her to get a teddy. Without providing a formalization of this claim, we can loosely say that Alice entering the game produced her to get a teddy because there is a chain of events from her entering the game to her getting a teddy from the game such that each event counterfactually depends on the previous one.

Sufficiency for production: □(Alice enter)(Alice enter produce get teddy) ✗

Given the set up of the scenario, it was determined that Alice would get a teddy somehow, but it was not determined how she would get it. She could have hit the button at the wrong time and lost the spinner game (a possibility emphasized by the presence of Bob), in which case she would have gotten a teddy from her parents at the end of the day rather than from winning the game. In that case her entering the game would not have produced her to get a teddy; rather, her parents buying a teddy would have produced her to get one. In all, then, the fact that Alice entered the game was not sufficient for her entering the game to produce her to win a teddy.

Thus Hypothesis 2, but not Hypothesis 1, correctly predicts (18) to be false.

To test this explanation of (18)’s falsity, let us consider a different cause, one that is sufficient for the cause to produce the effect. An example is (19).

- (19)

- Alice got a teddy because she won the spinner game (and Bob got a teddy because his parents bought him one).

(19) is intuitively true. We can account for this as follows. While Alice entering the game was not sufficient for her entering the game to produce her to get a teddy, winning the game was sufficient for her winning the game to produce her to get a teddy: □(Alice win)(Alice win produce get teddy). Once (18) is minimally altered to contain a cause that was sufficient for it to produce the effect, as in (19), the judgment changes. This further supports Hypothesis 2.

Before moving on, let us pause to consider an alternative explanation of (18)’s falsity. One might suggest that (18) is false because even if Alice had not entered the spinner game, she would have gotten a teddy anyway (since her parents would get her a teddy if she didn’t already already one by the end of the day). In the Billy and Suzy case we already saw that because does not require effects to counterfactually depend on their causes. But one might think there are special reasons for this, peculiar to the Billy and Suzy case. However, (19) is also true even though the effect does not counterfactually depend on the cause: if Alice hadn’t won the spinner game, she would still have gotten a teddy. This suggests that the falsity of (18) is not due to the fact that if Alice had not entered the spinner game, she would have gotten a teddy anyway.

To summarize, the fact that (18) is false in the fairground scenario supports Hypothesis 2: because requires that the cause is sufficient for the cause itself to produce the effect.

5 Property 2: Asymmetry in strength between the positive and negative conditions

As discussed in section 1.1.2, exhaustification generates an asymmetry in modal force between the positive and negative conditions. The positive conditions are strong, requiring that in all cases selected by the modal where the cause occurs the relevant condition is met: for the simplified semantics, that the cause occurs; for the full semantics, that the cause produces the effect. In contrast, the negative conditions are weak, requiring that in some cases selected by the modal where the cause does not occur, the relevant condition is not met.

In section 3 we saw evidence that the positive condition is strong. In the robot scenario where the robot turns at random, (8) are intuitively false.

- (8)

- a.

- The robot taking First Street caused it to take Road B.

- b.

- The robot took Road B because it took First Street.

The mere possibility that the robot could have taken First Street without taking Road B was enough to violate the positive condition.

The train scenario above suggested that the semantics of because also features a negative condition. But that scenario could not tell us whether the negative condition should be strong or weak. The falsity of (10) is also compatible with a strong negative condition, one requiring that in all scenarios selected by the modal where the cause does not occur, the relevant condition is not met (in the simplified semantics, that the effect occurs; in the full semantics that the cause produces the effect). The strong negative conditions are given in (20), where the negation is inside the modal’s scope.

- (20)

- Semantics of because with a strong negative condition.

- a.

- p ∧ □(p)(q) ∧ □(¬p)(¬q)

- Simplified

- b.

- p ∧ □(p)(p produce q) ∧ □(¬p)(¬(¬p produce q))

- Full

There is evidence that the strong negative condition is too strong. Here are some naturally occurring examples (from McHugh 2020: ex. 6).

- (21)

- a.

- Reyna was born at Royal Bolton Hospital but received a Danish passport because her mother was born in Copenhagen.17

- b.

- He has an American passport because he was born in Boston.18

- c.

- Naama Issachar … could spend up to seven-and-a-half years in a Russian prison because 9.5 grams of cannabis were found in her possession during a routine security check.19

- d.

- A 90-day study in 8 adults found that supplementing a standard diet with 1.3 cups (100 grams) of fresh coconut daily caused significant weight loss.20

Consider (21a). If Reyna’s mother hadn’t been born in Copenhagen, there are presumably many places where she could have been born instead: somewhere else in Denmark, or outside Denmark altogether. Let us suppose that if Reyna’s mother had been born somewhere else in Denmark, Reyna would still have a Danish passport (i.e. there is nothing special about Copenhagen compared to anywhere else in Denmark when it comes to receiving Danish citizenship), but if her mother had been born outside Denmark, Reyna might not have received a Danish passport.

Let us first compare the simplified semantics with the strong and weak negative condition. The strong condition is false: if Reyna’s mother hadn’t been born in Copenhagen, she could have been born somewhere else in Denmark, in which case Reyna would still have a Danish passport. In contrast, the weak condition only requires that it be possible that if Reyna’s mother hadn’t been born in Copenhagen, Reyna would not have received a Danish passport. Since Reyna’s mother could have been born outside Denmark, in which case Reyna might not have received a Danish passport, the weak simplified negative condition is true.

- (22)

- a.

- Strong:

- □(¬R’s mother born in Copenhagen)(¬R has Danish passport)

- ✗

- b.

- Weak:

- ¬□(¬R’s mother born in Copenhagen)(R has Danish passport)

- ✓

Similarly with the full semantics, the strong negative condition is false but the weak negative condition is true. If Reyna’s mother had been born somewhere else in Denmark – say, Aarhus – then the fact that she was born in Aarhus would also have produced Reyna to have a Danish passport. So the strong full negative condition, (20b), is also false. But the weak negative condition is true and as we saw in section 2.5 (Figure 1), the simplified negative condition implies the full negative condition. That is, if Reyna didn’t have a Danish passport, nothing would have produced her to have one; in particular, her mother not being born in Copenhagen would not have produced her to have one.

- (23)

- a.

- Strong:

- □(¬Copenhagen)(¬(¬Copenhagen) produce Danish passport)

- ✗

- b.

- Weak:

- ¬□(¬Copenhagen)((¬Copenhagen) produce Danish passport)

- ✓

Similarly regarding (21d), if the diet of the participants hadn’t been supplemented with 100 grams of coconut, it could have been supplemented with less than 100 grams of coconut. Intuitively, (21d) does not say that 100 grams is the minimal amount required to cause significant weight loss.

McHugh (2020: ex. 7) illustrates the point in a more extreme way with (24).

- (24)

- a.

- Computers do an awful lot of deliberation, and yet their every decision is wholly caused by the state of the universe plus the laws of nature.21

- b.

- If anything is happening at this moment in time, it is completely dependent on, or caused by, the state of the universe, as the most complete description, at the previous moment.22

- c.

- If you keep asking “why” questions about what happens in the universe, you ultimately reach the answer “because of the state of the universe and the laws of nature.”23

There the causes are far stronger (in the sense of logical entailment) than required to make the effect occur, yet evidently the causal claims are still assertable.

Now, there are countless ways in which the state of the universe could have been different than it actually is. The process leading to the computer’s decision could have been different, but also the computer could have been exactly as it was with a difference instead in, say, the weather on Pluto. For (24a) to be true, for example, the strong negative condition requires that for all of the ways in which the state of the universe could be different (say, if the weather on Pluto had been different), the computer’s decision would have been different. This is clearly too demanding. In contrast, the weak negative condition only requires that in at least one of the them, the computer’s decision would have been different. In at least one of these scenarios (e.g. if the process leading to the computer’s decision had been different), then the computer’s decision would have been different, so the weak negative conditions correctly predict (24a) to be assertable.

6 Property 3: The positive and negative conditions have the same background

In our description of sufficiency in section 3, we stated that p is sufficient for q in a circumstance just in case, in that circumstance, it is not nomically possible for p to be true without q being true. In this section we analyze what “the circumstances” are. It is important to understand what determines the circumstances when we discuss Property 3: that the circumstances (or ‘background’) of the positive and negative conditions are the same.

The robot scenario from section 3 illustrates why we need to relativize nomic possibility to the circumstances. There we considered two contexts: one where the robot turns at random, and one where it always changes direction. We can represent these two contexts in two separate worlds. Then what is nomically possible in one world is nomically impossible in the other world (e.g. the robot taking Road A). We can capture this fact since nomic possibility is relative to a world, which is something already built into Kratzer’s (1981) account of modality.

However, we can also represent the two robot contexts in the same world. Suppose that one day the robot is programmed to turn at random, and the next day it is reprogrammed to always change direction. Then something that was nomically possible in one world at one time is no longer nomically possible in the same world at another time (e.g. the robot taking Road A). This shows that nomic possibility is relative to more than just the world of evaluation. It is also relative to what we may call the circumstances.

We would like to understand what determines the circumstances. To that end, consider the following scenario. For the sake of continuity, we will adapt the robot case. Suppose roads B and D are lined with trees (see Figure 5). When the robot must choose whether to take a road with trees or one without, it is programmed to always take the road with trees. Otherwise it decides at random. On Monday it was positioned at the starting point. On Tuesday it took one of the roads in front of it. Since it faced two bare roads, it turned at random. On this particular occasion it happened to turn left. On Wednesday it faced roads A and B: a bare road and one with trees. On Thursday it took one of the roads. Given the robot’s programming, it took the road with trees, road B. Consider (25) and (26) in this context.

- (25)

- a.

- Given how things were on Monday, the robot took Road B because it is programmed to prefer tree-lined roads.

- b.

- Given how things were on Wednesday, the robot took Road B because it is programmed to prefer tree-lined roads.

- (26)

- a.

- Given how things were on Monday, the robot’s preference for tree-lined roads caused it to take Road B.

- b.

- Given how things were on Wednesday, the robot’s preference for tree-lined roads caused it to take Road B.

There is a contrast between the (a) sentences and the (b) sentences. Intuitively, the (a) sentences are false and the (b) sentences are true.

Intuitively, the (a) sentences are false because given how things were on Monday, the robot could have turned right, in which case it would have faced Roads C and D, and so wouldn’t have taken Road B. That is, the (a) sentences are false because they violate sufficiency.

The (b) sentences do not violate sufficiency because, given how things were on Wednesday, the robot was facing Roads A and B, so its programming guaranteed that it take Road B. Assuming that the (b) sentences satisfy the other requirements of cause and because, they are predicted to be true.

Given our analysis of sufficiency, the (a) sentences violate sufficiency because the fact that the robot turned left on Tuesday is not part of the circumstances used to interpret the (a) sentences, but is part of the circumstances used to interpret the (b) sentences. We can account for this difference by proposing that the given-clause determines the circumstances in each case. The circumstances for the (a) sentences are how things were on Monday, and the circumstances for the (b) sentences are how things were on Wednesday.

Observe that we can specify the circumstances with temporal information alone (e.g. on Monday, on Wednesday). This shows that the circumstances are a function of time. Of course, time is not enough: temporal information by itself (e.g. that it is Tuesday) does not carry any information unless one knows what world we are talking about. We see this in (25) and (26): the expression how things were on Monday/Wednesday refers to how things were on Monday/Wednesday in the world of evaluation.

6.1 The circumstances as modal base

This observation that the circumstances are a function of time is expected if what we have been calling ‘the circumstances’ are just the modal base of the modal expressed by cause and because. For it is often assumed that modals bases are sensitive to time. To see this, let us briefly review Condoravdi’s account of the interaction between tense and modality.

Condoravdi (2002) proposes that modals have a temporal perspective and a temporal orientation. The temporal perspective is the time when the possibilities are evaluated. The temporal orientation is the relationship between the temporal perspective and the time of the embedded eventuality. Consider (27).

- (27)

- a.

- He might have won the game. (Condoravdi 2002: ex. 6)

Condoravdi (2002: 62) points out that (27) has two readings, which she calls ‘epistemic’ and ‘counterfactual’. On the epistemic reading, (27) describes the speaker’s present knowledge about a past event: might has a present perspective and a past orientation. On the counterfactual reading, (27) describes what was possible at some point in the past – e.g. at half-time in the game – about an event in the future of that point: might has a past perspective and a future orientation. The two readings can be brought out with the following continuations (Condoravdi 2002: ex. 7).

- (28)

- a.

- He might have (already) won the game (# but he didn’t).

- Epistemic reading: present perspective, past orientation

- b.

- At that point he might (still) have won the game but he didn’t in the end.

- Counterfactual reading: past perspective, future orientation

Following Condoravdi (2002: 71), we assume that modal bases are a function of the world of evaluation and the temporal perspective. We can then capture the fact that the circumstances are a function of time if what we have been calling ‘the circumstances’ are the modal base of the modal expressed by because.

If we assume that the given-clauses in (25) and (26) set the modal base, we can account for the contrast between the (a) and (b) sentences. The proposition that the robot turned left on Tuesday is not part of how things were on Monday, but is part of how things were on Wednesday.

The fact that the given-clauses can manipulate the modal base shows that the embedded causal claims, given in (29), are compatible with multiple modal bases.

- (29)

- a.

- The robot took Road B because it is programmed to prefer tree-lined roads.

- b.

- The robot’s preference for tree-lined roads caused it to take Road B.

Suppose that we are evaluating (29) after the robot has completed its journey. The sentences in (29), without the given-clause, do not specify when the possibilities are to be evaluated. That is, they do not specify the modal’s temporal perspective. If it is set to before the robot turned left on Tuesday, our proposed semantics for because (both the full and simplified versions) predict (29) to be false. If they are evaluated after the robot turns left on Tuesday, our proposed semantics predicts them to be true. The prediction that (29) are ambiguous appears to be correct. We can bring out the two readings as follows.

- (30)

- a.

- The robot took Road B because it is programmed to prefer tree-lined roads. For, its programming made it take Road B rather than Road A.

- b.

- The robot didn’t take Road B because it is programmed to prefer tree-lined roads. For it could have turned right on Tuesday, in which case it would have taken Road C or D, not road B.

We can also show it is possible to set the temporal perspective to Monday by modifying the scenario. Let us remove Road A and add trees to First Street, as in Figure 6.

Consider (29), repeated below, in this context.

- (29)

- a.

- The robot took Road B because it is programmed to prefer tree-lined roads.

- b.

- The robot’s preference for tree-lined roads caused it to take Road B.

In this scenario (29) has a true reading.

If the temporal perspective is set to Monday, the full and simplified semantics correctly predict this result. For then the positive conditions are satisfied, since the robot’s programming guarantees that it take Road B. And the negative conditions are satisfied, since if the robot had not been programmed to prefer tree-lined roads, it could have taken Roads C or D. However, if the temporal perspective is set to Wednesday, the negative conditions are not satisfied. Given how things were on Wednesday, even if the robot had not been programmed to prefer tree-lined roads, Road B was its only option.

This provides further evidence that because and cause do not fix the temporal perspective of their modals.

6.2 Testing whether the positive and negative backgrounds can differ

Let us consider one last modification of the robot scenario. Suppose that Road A is still removed, and this time there are trees only on Road B, as depicted in Figure 7. As before, the robot is programmed to prefer tree-lined roads.

On Monday the robot first faced two bare roads. On this particular day it turned left, though it could just as easily have turned right. Then on Wednesday the robot faced a single tree-lined road, Road B, so it took it. At that point Road B was its only choice, so even if it hadn’t preferred tree-lined roads, it would still have taken Road B.

Consider (29) in this context.

- (29)

- a.

- The robot took Road B because it is programmed to prefer tree-lined roads.

- b.

- The robot’s preference for tree-lined roads caused it to take Road B.

Intuitively, (29) are false in this context.

Let us consider what our semantics above has to say about this case. (Since there is no overdetermination in this scenario, the full and simplified semantics give the same verdict.) We saw above that when interpreting (29), it is possible to set the temporal perspective to Monday, before the robot made its first turn, and it is possible to set it to Wednesday, after it made its first turn.

Suppose the temporal perspective is set to Monday. Then the positive condition is false, since the robot could have turned right first and avoided Road B. But the negative condition is true, since if the robot hadn’t been programmed to prefer tree-lined street, it could have avoided Road B by taking Roads C or D.

Suppose instead that the temporal perspective is set to Wednesday. Now the situation is reversed. The positive condition is true, since on Wednesday the robot was guaranteed to take Road B. But the negative condition is false, since if the robot hadn’t been programmed to prefer the tree-lined street, it would still have taken Road B. These results are summarized in Table 1.

For each temporal perspective, either the positive or negative condition fails.

| Temporal perspective | Positive condition | Negative condition |

| Monday | ✗ | ✓ |

| Wednesday | ✓ | ✗ |

If the temporal perspectives of the positive and negative conditions could differ when interpreting cause or because, we would expect (29) to have a true reading in the context of Figure 7.

Let us consider a second scenario, to help ensure that the arguments above are not due to particularities of the robot context. Suppose there are two veterinary clinics in Alice’s region, one in village A and one in village B, each with two kinds of positions, junior and senior. (For simplicity, suppose that these four jobs are the only jobs Alice could have; for example, if Alice hadn’t been a senior vet she would have been a junior vet.) The annual salaries for each position are listed in Table 2.

Salaries in context 1.

| Village A | Village B | |

| Senior vet | 30,000 | 20,000 |

| Junior vet | 15,000 | 15,000 |

Actually, Alice works in village A and is a senior vet. Consider (31).

- (31)

- Alice earns 30,000 per year because she’s a senior vet.

Given the salaries in Table 2, (31) has a true reading.

(31) satisfies the negative condition: if Alice hadn’t been a senior vet she would have earned 15,000 per year instead. For (31) to satisfy the positive condition the modal base must include the fact that Alice works in village A. If we considered the possibility of Alice working as a senior vet at village B she would earn 20,000, not 30,000. This is further illustrated by the fact that (32) is intuitively true.

- (32)

- Given that Alice works in village A, she earns 30,000 per year because she’s a senior vet.

Now consider (31) with respect to the salaries in Table 3.

Salaries in context 2.

| Village A | Village B | |

| Senior vet | 30,000 | 30,000 |

| Junior vet | 30,000 | 15,000 |

Given the salaries in Table 3, again (31) has a true reading. This time the situation is reversed: (31) satisfies the positive condition regardless whether we fix the fact that Alice works in village A. But for (31) to satisfy the negative condition, we must not fix the fact that she works in village A. This is illustrated by the fact that (32) is intuitively false given the salaries in Table 3.

To summarize, the fact that (31) has a true reading for both salary tables above shows that the modal base of the modal in because is flexible: it can include or omit the fact that Alice works in village A.

Now consider (31) with respect to the salaries in Table 4.

Salaries in context 3.

| Village A | Village B | |

| Senior vet | 30,000 | 20,000 |

| Junior vet | 30,000 | 15,000 |

Intuitively, (31) is false in this context.

Suppose the fact that Alice works in village A is part of the modal base when interpreting (31). Then the positive condition holds: given that she works in village A, the fact that she is a senior vet guarantees that she earns 30,000 per year. But the negative condition fails: if she were not a senior vet, she would be a junior vet (assuming these are the only positions available) and would still earn 30,000.

Suppose instead that the fact that Alice works in village A is not part of the modal base when interpreting (31). Now the situation is reversed. The positive condition fails since Alice could have been a senior vet in village B and would have only earned 20,000 per year. But the negative condition holds, since if Alice hadn’t been a senior vet, she could have been a junior vet in village B and would have earned 15,000 per year.

These two reasons for (31)’s falsity are illustrated by the following continuations, uttered in a situation where the salaries are given by Table 4.

- (33)

- A: Alice earns 30,000 per year because she’s a senior vet.

- a.

- B: That’s not right. Even if she worked as a junior vet, she would still earn 30,000 per year.

- b.

- B′: That’s not right. The senior vets in village B only earn 20,000 per year.

To summarize, the fact that (31) has a true reading with respect to the salaries in Tables 2 and 3, provides evidence that the modal bases of the positive and negative conditions are flexible: they may include or omit the fact that Alice works in village A. But whichever it is, the modal’s parameters in the positive and negative condition must be the same.

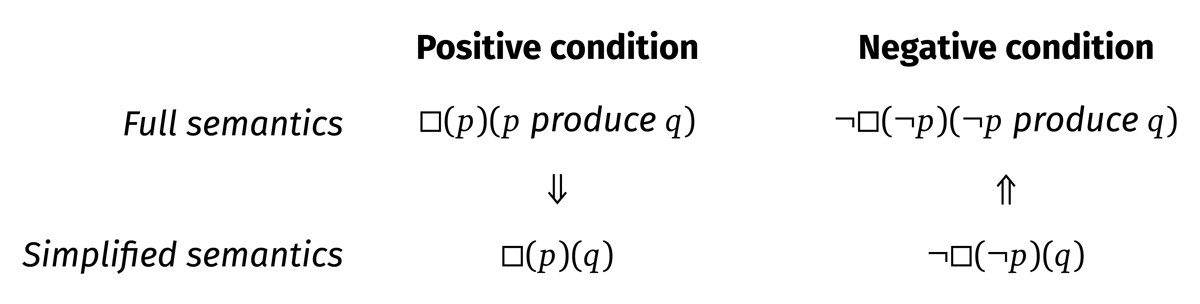

The fact that the modals of the positive and negative condition must have the same modal base is exactly what we expect from exhaustification. Even though the semantics of cause and because involves two modals (one in the positive condition and one in the negative condition), using exhaustification we may propose that their semantics in fact contains a single modal, which is copied by exhaustification. Since exhaustification only modifies the cause – replacing p with ¬p – it copies the modal without touching its parameters f and g.

- (34)

- Semantics of because (simplified).

- ⟦q because p⟧

- = p ∧ O{p,¬p} □f,g(p)(q)

- = p ∧ □f,g(p)(q) ∧ ¬□f,g(¬p)(q)

- (35)

- Semantics of because (full).

- ⟦q because p⟧

- = p ∧ Op,¬p □f,g(p)(p produce q)

- = p ∧ □f,g(p)(p produce q) ∧ ¬□f,g(¬p)(¬p produce q)

If the semantics of because does not include exhaustification, then each modal is generated independently, as in (36).

- (36)

- a.

- p ∧ □f,g(p)(q) ∧ ¬□f′,g′ (¬p)(q)

- b.

- p ∧ □f,g(p)(p produce q) ∧ ¬□f′,g′(¬p)(¬p produce q)

Since each modal comes with a modal base and ordering source, without further constraints is conceivable that the two modals could differ in their parameters. To avoid this possibility we may, of course, add a constraint that the modals’ parameters must be identical as a stipulation.

- (37)

- a.

- p ∧ □f,g(p)(q) ∧ ¬□f′,g′ (¬p)(q) ∧ f = f′ ∧g = g′

- b.

- p ∧ □f,g(p)(p produce q) ∧ ¬□f′,g′ (¬p) (¬p produce q) ∧ f = f′ ∧g = g′

Now, it is reasonable to expect that two modals within the same lexical entry would be subject to such a constraint, forcing their parameters to be the same. The benefit of writing the semantics of cause and because using exhaustification is that we derive this constraint automatically, without needing to add it as a separate requirement.24

7 Economy

The previous sections provided evidence that the semantics of cause and because satisfies properties 1, 2 and 3. These three properties all point to the presence of an exhaustification operator in the lexical semantics of cause and because. One may wonder about the status of this operator. It is always present, or subject to licensing conditions?

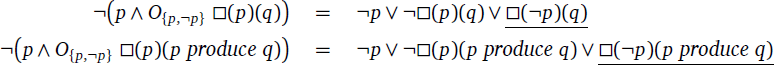

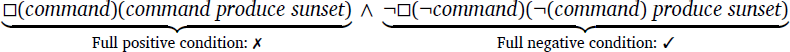

To answer this question, a key test case is how cause and because behave under negation. It is commonly assumed that exhaustification is subject to an economy condition that prevents it from appearing when it would lead to an overall weaker meaning (Chierchia 2013; Fox & Spector 2018). If the exhaustification operator in the semantics of cause and because is subject to this constraint, we would expect the following parses of cause and because under negation to be ruled out by Economy.

Without exhaustification the underlined disjunct disappears:

Under negation, then, exhaustification in the semantics of cause and because leads to a weaker meaning.

However, it turns out that the only parse of not … because and not … cause that correctly accounts for the data is one that violates Economy, as we see now.

7.1 Because and economy: data

In de Saint-Exupéry’s The Little Prince, the protagonist visits a king who claims to be able to command the sun to set. Suppose the king commands the sun to set, and sure enough, some time later it sets. Unfortunately for the king’s ego, the following sentences are false.

- (38)

- a.

- The sun set because the king commanded it.

- b.

- The king’s command caused the sun to set.

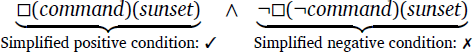

The simplified and full semantics account for the falsity of (38) in different ways. On the simplified semantics (38) are false since the sun would have set even if the king hadn’t commanded it; in symbols, □(¬command)(sunset). The simplified negative condition fails:

While on the full semantics (38) are false because the king’s command did not produce the sun to set. This implies that the king’s command is not sufficient for it to produce the sun to set; in symbols, ¬□(command)(command produce sunset).25 The full positive condition fails:

Compare this with the train track scenario. According to the simplified semantics, (38) are false for the same reason that (10), repeated below, are false in the train track scenario: the train would have reached the station anyway.

- (10)

- a.

- The train reached the station because the engineer flipped the switch.

- b.

- The engineer flipping the switch caused the train to reach the station.

While according to the full semantics, (10) and (38) are false for a different reason. Pulling the lever produced the train to reach the station (because there is a chain of events beginning with the engineer pulling the lever, through the train taking the side track, to the train reaching the station). But symmetrically, not pulling the lever would have also produced the train to reach the station, so the full semantics predicts (10) to be false.

Putting these sentences under negation, we observe that the following sentences are intuitively true (where not … because is read with not scoping above because).

- (39)

- a.

- The sun did not set because the king commanded it.

- b.

- The king’s command did not cause the sun to set.

- (40)

- a.

- The train did not reach the station because the engineer flipped the switch.

- b.

- The engineer flipping the switch did not cause the train to reach the station.

With these data at hand, let us see which parses using exhaustification account for them.

7.2 Because and economy: analysis

For the simplified semantics, the above data are compatible with two parses. The first, ¬(p ∧ O□(p)(q)), violates Economy.26 The second, which Fox & Spector (2018: ex. 70) discuss, features a higher exhaustification operator whose alternative is the prejacent without exhaustification: OALT¬O□(p)(q), where ALT = {¬O□(p)(q), ¬□(p)(q)}. In essence, the higher operator adds that the lower operator was required for the sentence to be true. This parse does not violate Economy.

Table 5 gives four possible parses of not … because with exhaustification, what truth value each predicts for (39) and (40), and whether the parse satisfies Economy.

Possible parses of not … (be)cause on the simplified semantics.

| Parse | Simplified meaning | (39) | (40) | Economy |

| ¬□(p)(q) | ¬□(p)(q) | F ✗ | T ✓ | ✓ |

| O¬□(p)(q) | ¬□(p)(q) ∧□(¬p)(q) | F ✗ | F ✗ | ✓ |

| ¬O□(p)(q) | ¬□(p)(q) ∨ □(¬p)(q) | T ✓ | T ✓ | ✗ |

| OALT¬O□(p)(q) | □(p)(q) ∧ □(¬p)(q) | T ✓ | T ✓ | ✓ |

The table shows that two parses of not … because on the simplified semantics correctly predict (40) and (39) to be true, ¬O□(p)(q) and OALT¬O□(p)(q), with the latter satisfying Economy.

This changes when we turn to the full semantics. Table 6 shows that only one parse of the full semantics correctly predicts the truth of (39) and (40). This is also the only parse that violates Economy. In the table we use p ⇝ q as shorthand for p produce q. As above, we consider the parse OALT¬O□(p)(p ⇝ q) where ALT = {¬O□(p)(p ⇝ q), ¬□(p)(p ⇝ q)}.

Possible parses of not … (be)cause on the full semantics.

| Parse | Full meaning | (39) | (40) | Economy |

| ¬□(p)(p ⇝ q) | ¬□(p)(p ⇝ q) | T ✓ | F ✗ | ✓ |

| O¬□(p)(p ⇝ q) | ¬□(p)(p ⇝ q) ∧ □(¬p)(p ⇝ q) | F ✗ | F ✗ | ✓ |

| ¬O□(p)(p ⇝ q) | ¬□(p)(p ⇝ q) ∨ □ (¬p)(p ⇝ q) | T ✓ | T ✓ | ✗ |

| OALT¬O□(p)(p ⇝ q) | □(p)(p ⇝ q) ∧ □(¬p)(p ⇝ q) | F ✗ | T ✓ | ✓ |

We saw in section 4.1 that the full semantics is superior to the simplified semantics in overdetermination cases (i.e. cases without counterfactual dependence where the causal claim is nonetheless true). Assuming, then, that the full semantics is the correct semantics of cause and because, Table 6 shows that the exhaustification operator in the semantics of cause and because violates Economy. This is not so surprising if exhaustification is hard-coded into the lexical semantics of these words, making it obligatory even when it leads to an overall weaker meaning.

8 Conclusion

In this paper we saw evidence that the semantics of because and cause satisfies three properties.

Property 1. The comparative nature of cause and because.

The semantics of cause and because involves comparing what would happen in the presence of the cause with what would happen in its absence.

Property 2. The asymmetry in strength between the two conditions.

The positive condition has universal modal force while the negative condition has existential modal force.

Property 3. The positive and negative conditions have the same background.

The facts from the actual world that are held fixed when evaluating the positive and negative conditions are the same.

On the surface, these properties are all quite different. However, we saw that we can account for them all in a uniform way, by proposing that there is an exhaustification operator in the lexical semantics of cause and because. Now, we are not forced to write their semantics of these words in terms of exhaustification; as discussed in section 2.4, we can always rewrite their semantics without exhaustification if desired. But doing so allows us to account for three features of their semantics using a domain-general operation, one not unique to causality or modality. In a sense, then, what we have shown is that the meanings of cause and because are more ordinary than we may have imagined.

Notes

- Comparing the semantics of cause and because requires assuming some relationship between their relata (nouns and to-infinitives in the case of cause, clauses in the case of because). We will assume that nouns and to-infinitives express clauses; for example, in (1) the noun Alice flicking the switch expresses the clause Alice flicked the switch and the to-infinitive the light to turn on expresses the clause the light turned on. This allows us to say that cause and because have the same semantics, in the sense that c cause e and q because p have the same truth conditions when noun c expresses clause p and to-infinitive e expresses clause q. [^]

- Copley & Wolff (2014: 55) offer the following example.

- (i)

- a.

- Lance Armstrong won seven Tours de France because of drugs.

According to Copley and Wolff, (ia) is true but (ib) is false. I find these data a bit too subtle to draw robust conclusions. Some clearer differences between cause and because concern ‘noncausal’ or ‘explanatory’ readings of because. Compare:- b.

- Drugs caused Lance Armstrong to win seven Tours de France.

- (ii)

- a.

- S satisfies the axiom of extensionality because it is a set.

- b.

- #The fact that S is a set causes it to satisfy the axiom of extensionality.

- (iii)

- Uttered in a situation where B is false.

- a.

- A ∨ B is true because A is true.

We will not attempt to account for these between cause and because here. For discussion of these readings of because see Kac (1972); Powell (1973); Kim (1974); Morreall (1979); Lange (2016). [^]- b.

- # The fact that A is true causes A ∨ B to be true.

- To simplify notation, we will sometimes omit the parameters and simply write □(p)(q) for □f,g(p)(q). [^]

- For simplicity’s sake we use Krifka’s (1993) entry for only. Our results also follow from Fox’s (2007) exhaustivity operator, based on the notion of innocent exclusion. For an overview and comparison of exhaustivity operators see Spector (2016). [^]

- For brevity, we will write O{p, ¬p} φ where the alternatives are φ itself, and the result of substituting ¬p for p in φ. We will also sometimes write O{p, ¬p} simply as O. [^]

- The inference to the negative condition is reminiscent of conditional perfection (Geis & Zwicky 1971). For an approach to conditional perfection that uses exhaustification and assumes that if not-p, q is an alternative to if p, q, see Bassi & Bar-Lev (2018: §5). Note also that it does not matter whether we take the positive condition as the prejacent and derive the negative condition by exhaustification, or vice versa, take the negative condition as the prejacent and derive the positive condition by exhaustification: O{p,¬p} □f,g(p)(q) is equivalent to O{p,¬p} ¬□f,g(¬p)(q). [^]

- We place the condition that the cause occurred (p) outside the scope of exhaustification because otherwise exhaustification would be vacuous, as we see in the following chain of equivalences.

[^]

- Note that we do not need to add q as a conjunct to (3) since p ∧ □f,g(p)(q) entails q: if p is true and is sufficient for the truth of q, then q is also true.

Our entry for because in (3) assigns the same status to the condition that the cause occurred (p) as we do to the other conjunct O{p,¬p} □f,g(p)(q). Both are entailments. Alternatively, one might propose that p is encoded as a presupposition in the lexical semantics of because. Such a stipulation does not account for why some inferences rather than others are selected as presuppositions in the first place (see Abrusán 2011; 2016: for discussion). Moreover, the inferences from cause and because that their arguments are true is a soft presupposition in the sense of Abusch (2002; 2010), as they are easily suspendable, as shown in (i).

- (i)

- a.

- The outcry which followed Morgan was not because the House of Lords had changed the law but because the public mistakenly thought it had done so. (Source: Temkin 2002)

Romoli (2012; 2015) proposes that the projection properties of because are in fact due to a scalar implicature. An utterance of ¬(q because p) triggers the alternatives ¬p and ¬q. Since ¬(q because p) – whose meaning according to (3) is given in (iia) – entails neither alternative, we derive the implicatures in (iib).- b.

- No, the coronavirus did not cause the death rate to drop in Chicago… Overall, deaths don’t appear to be declining. (Source: Politifact.com, 3 April 2020)

- (ii)

- a.

- ¬(q because p)

- ⇔

- ¬p ∨ ¬□(p)(q) ∨ □(¬p)(q)

Given Romoli’s account, we can capture the projection properties of because without needing to assign a special status to p in the lexical semantics of because. [^]- b.

- OALT ¬(q because p)

- ⇔

- ¬(q because p) ∧ p ∧ q

- where ALT = ¬{q because p),¬p,¬q}.

- For more on how polar alternatives are generated by interaction with focus, see e.g. Biezma & Rawlins (2012); Kamali & Krifka (2020). [^]

- For the sake of concreteness, we provide a working formalization of production in (i). The definition is directly inspired by Lewis (1973a) and Beckers (2016: Definition 13). The gist of the formalization is that a proposition p produced a proposition q just in case there is a chain of events beginning with a p-event and ending with a q-event through time such that each event on the chain actually occurred, and the fact that each event occurred at the time that it did counterfactually depends on the fact that a previous event on the chain occurred at the time that it did.

- (i)

- a.

- Where p is a proposition and t is a point in time, let us call pt a temporally-indexed proposition and define that pt is true at a world w just in case p is true at world w at time t.

- b.

- We define that p produce p′ is true at a world w just in case there is a set of temporally-indexed propositions A = {qt : t ∈ T} such that

- 1) T is dense set of points in time: i.e. each t ∈ T is a point in time, and for each t, t′ ∈ T, if there is a point in time t″ such that t < t″ < t′, then t″ ∈ T.

- 2) p entails the earliest proposition in A, and p′ entails the latest proposition in A: i.e. there are qt, q′t′ ∈ A such that p entails q and p′ entails q′, and t ≤ t″ ≤ t′ for all t″ ∈ T.